Neural Amp Modeler (NAM) in HISE

-

@aaronventure said in Neural Amp Modeler (NAM) in HISE:

If you use the multi node to force mono processing and before that collapse the stereo signal into mono, you get roughly the same performance.

Haha wasn't all that drama about it being 8x slower than the NAM plugin?

It would be cool to be able to embed the .nam files into the plugin instead of having to install them separately.

Can't you just embed the JSON content of the NAM file into a script and it will be embedded in the plugin?

-

@Christoph-Hart said in Neural Amp Modeler (NAM) in HISE:

Haha wasn't all that drama about it being 8x slower than the NAM plugin?

I have no idea, I'm only now touching it for the first time.

I ran direct comparisons in Reaper and a Waveform Synth with NAM on stereo channels consumes 1% of CPU (20% of RT CPU), while two NAM plugins in total consume 0.9% of CPU and 18% of RT CPU.

So HISE is about 10% less efficient, which is good enough and entirely shippable if you ask me.

@Christoph-Hart said in Neural Amp Modeler (NAM) in HISE:

Can't you just embed the JSON content of the NAM file into a script and it will be embedded in the plugin?

I sure can. I feel silly now. Thank you.

-

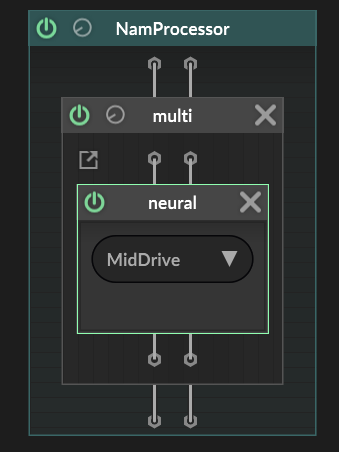

I'm seeing about 25% here with this. Not sure I understood about the multi container thing.

-

@Orvillain you can still see the two cables going into it. You multi node should contain a neural node and an empty chain node.

-

How well does this nam implementation handle different sample rates? I know some get tripped up if you try to run a sample rate that's different than what your model was trained on.

-

Strange....

I'm getting a "function not found" error when calling loadNAMModel -

@Christoph-Hart said in Neural Amp Modeler (NAM) in HISE:

Haha wasn't all that drama about it being 8x slower than the NAM plugin?

I think it was the AidaX, not NAM: https://forum.hise.audio/topic/11326/8-times-more-cpu-consumption-on-aida-x-neural-models

@aaronventure So, apart from AidaX, NAM performance is close to its own plugin, right? Great news then.

-

@aaronventure said in Neural Amp Modeler (NAM) in HISE:

@Christoph-Hart said in Neural Amp Modeler (NAM) in HISE:

Can't you just embed the JSON content of the NAM file into a script and it will be embedded in the plugin?

I sure can. I feel silly now. Thank you.

Have you ever tried embedding NAM content as JSON like @Christoph-Hart adviced?

As you mentioned above, I can open them externally, but embed doesn't work here.

-

@JulesV i haven't gotten around to actually implementing this, but it should work, instead of just reading the file as object, i would just paste the data in there

-

@aaronventure said in Neural Amp Modeler (NAM) in HISE:

@JulesV i haven't gotten around to actually implementing this, but it should work, instead of just reading the file as object, i would just paste the data in there

This is the nam file (to upload here, I changed the extension to

.txt, but you need to change it to.namafter downloading): A73.txtUsing this externally works. But for JSON, Hise cannot load this complex data, which has a lot of weights, and it hangs then crashes.

-

@JulesV Don't know if this helps but I have this working fine in my current project:

//ML Model Loader const neuralNetwork = Engine.createNeuralNetwork("Plasma"); const namModel = FileSystem.getFolder(FileSystem.AudioFiles).getChildFile("Plasma.json").loadAsObject(); neuralNetwork.loadNAMModel(namModel); ;I renamed my NAM model to a JSON, in this case Plasma.json and put it in AudioFiles as I found Samples wasn't working reliably for me.

This then pops up in the Neural node in scriptnode no problem. This is a 1000 Epoch NAM model so the json is pretty big/has a lots of weights.

Again, hope this helps!

And thanks so much @aaronventure for the help getting this going, it's been a bit of a game changer for me distortion wise! -

@Lurch said in Neural Amp Modeler (NAM) in HISE:

EDIT: Ignore the Const Var at the end there, copied by accident, doing a few things at once.

this is funny because you could've used the edit to remove it

-

@aaronventure it's been a long week

:

: -

@Lurch i also got the NAM files working and loading pretty well and easily, just by making a "Neural1.js" file in my project scripts folder , and inside the .js file is just

const Model1 = {paste the entire .nam file contents into here};and the basic way i was loading it (can be iterated with functions for multiple models/combobox control too) is like this roughly

include("Neural1.js"); const namModel1 = Engine.createNeuralNetwork("NN_1"); namModel1.loadNAMModel(Model1);and for loading multiple NAM files, i am using a branch node within scriptfx for switching between multiple neural nodes, which allows for 16 max child nodes when turning into a hardcoded master fx ive found . u also have to manually select the networks in every neural node dropdown within ScriptFX, which would be amazing to somehow be able to have it auto select it/load it

if anyone knows a better memory efficient way of using multiple neural nodes, or how to get over the 16 max limit of a branch node(not in the documentation to my knowledge) that would be amazing!

-

This post is deleted! -

@Sawatakashi sure i'll share a simple setup later on when i get a free moment!

-

This post is deleted! -

O Orvillain referenced this topic on

O Orvillain referenced this topic on

-

@sinewavekid can you give me and example to make this work?

-

@sinewavekid Yes, i would love an example snippet too

-

C cemeterychips referenced this topic on

-

do you need to add anything to the extra preprocessor definitions when building in projucer to get this stuff to work properly? had to rebuild and figured I'd consult you guys before I move on.