Don't know if this is the done thing really, but I wanted to show off:

https://youtu.be/1kMHloRQLcM

Best posts made by Orvillain

-

I wrote a reverbposted in C++ Development

-

I wrote a bbd delayposted in C++ Development

Again, wasn't sure where to put this. But I created my own node.

Modelled analog bucket brigade delay. I'm starting to build up quite a nice collection of delay and reverb utilities now!

-

RE: I wrote a reverbposted in C++ Development

@Chazrox I might do a video or two on everything I've learned!

-

RE: Third party HISE developersposted in General Questions

I'm actually moving into more JUCE C++ based projects now. But I've spent 18 years in music tech. Used to work for FXpansion, then ROLI, then inMusic until mid last year when I made the transition to developer. I've got a couple of HISE projects on the go right now - more synths and effects than sample libraries at the moment, but I've done sample libraries throughout my career, with a specific focus in drum libraries. I have a few Kontakt ports in the pipeline for this year too.

Eventually will launch my own plugin company as well. That is the goal.

-

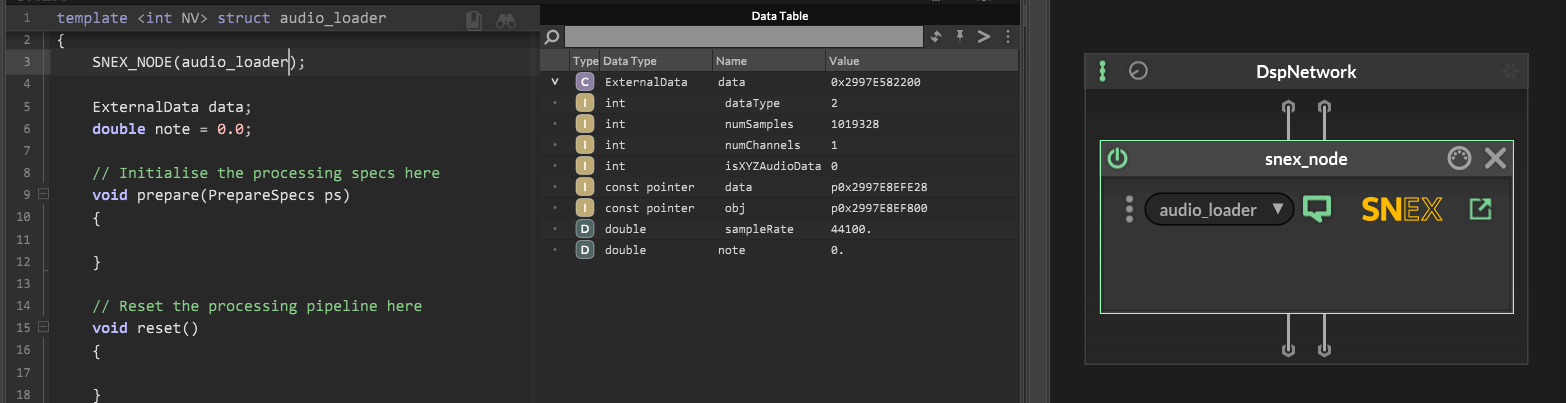

RE: Orv's ScriptNode+SNEX Journeyposted in ScriptNode

Lesson 5 - SNEX code in a bit more detail.

So I'm by no means an expert in C or C++ - in fact I only just recently started learning it. But here's what I've sussed out in regards to the HISE template.... and template is exactly the right word, because the first line is:

template <int NV> struct audio_loaderSomewhere under the hood, HISE must be setup to send in an integer into any SNEX node, that integer corresponding to a voice. NV = new voice perhaps, or number of voices ????

The line above declares a template that takes this NV integer in, and creates a struct called audio_loader for each instance of NV. Indeed we can prove this by running the following code:

template <int NV> struct audio_loader { SNEX_NODE(audio_loader); ExternalData data; double note = 0.0; // Initialise the processing specs here void prepare(PrepareSpecs ps) { } // Reset the processing pipeline here void reset() { } // Process the signal here template <typename ProcessDataType> void process(ProcessDataType& data) { } // Process the signal as frame here template <int C> void processFrame(span<float, C>& data) { } // Process the MIDI events here void handleHiseEvent(HiseEvent& e) { double note = e.getNoteNumber(); Console.print(note); } // Use this function to setup the external data void setExternalData(const ExternalData& d, int index) { data = d; } // Set the parameters here template <int P> void setParameter(double v) { } };There are only three things happening here:

- We set the ExternalData as in a previous post.

- We establish a variable with the datatype of double called 'note' and we initialise it as 0.0. But this value will never hold because....

- In the handleHiseEvent() method, we use e.getNoteNumber() and we assign this to the note variable. We then print the note variable out inside of the handleHiseEvent() method.

Now when we run this script, any time we play a midi note, the console will show us the note number that we pressed. This is even true if you play chords, or in a scenario where no note off events occur.

That's a long winded way of saying that a SNEX node is run for each active voice; at least when it is within a ScriptNode Synthesiser dsp network.

The next line in the script after the template is established is:

SNEX_NODE(audio_loader);This is pretty straight forward. The text you pass here has to match the name of the script loaded inside your SNEX node - not the name of the SNEX node itself.

Here you can see my SNEX node is just called: snex_node.

But the script loaded into it is called audio_loader, and so the reference to SNEX_NODE inside the script has to also reference audio_loader.

-

RE: Need filmstrip animationsposted in General Questions

@d-healey I really like that UI. Very simple, accessible, and smooth looking - for lack of a better word!

-

RE: Third party HISE developersposted in General Questions

@HISEnberg said in Third party HISE developers:

A bit abashed posting here with so much talent but here it goes!

Wouldn't worry about that, you've been very helpful in getting me up and running in various threads! So thanks! (and to everyone else also!)

-

RE: Transient detection within a loaded sampler - SNEX ????posted in General Questions

@HISEnberg

Yes I wrote a custom transient detector in a c++ node, and made sure it utilised an audio file, which I can load in my UI using the audio waveform floating tile.I implemented spectral flux extraction:

- Take your audio.

- Perform an FFT on it.

- Extract the spectral flux envelope from the FFT.

- Downsample the spectral flux envelope (optional but can help accuracy)

- Perform peak picking on the spectral flux envelope.

I used the stock JUCE FFT processor.

-

RE: Can We PLEASE Just Get This Feature DONEposted in Feature Requests

Free mankini with every commercial license???

-

RE: I wrote a reverbposted in C++ Development

@Chazrox said in I wrote a reverb:

@Orvillain Please.

I've been waiting for some dsp videos! I've been watching ADC's everyday on baby topics just to familiarize myself with the lingo and what nots. I think im ready to start diving in! There are some pretty wicked dsp guys in here for sure and I'd love to get some tutuorials for writing c++ nodes.

I've been waiting for some dsp videos! I've been watching ADC's everyday on baby topics just to familiarize myself with the lingo and what nots. I think im ready to start diving in! There are some pretty wicked dsp guys in here for sure and I'd love to get some tutuorials for writing c++ nodes.There's two guys who got me started in this. One is a dude called Geraint Luff aka SignalSmith. This is probably his most accessible video:

https://youtu.be/6ZK2GoiyotkThen the other guy of course is Sean Costello of ValhallaDSP fame:

https://valhalladsp.com/2021/09/22/getting-started-with-reverb-design-part-2-the-foundations/

https://valhalladsp.com/2021/09/23/getting-started-with-reverb-design-part-3-online-resources/In essence, here's the journey; assuming you know at least a little bit of C++

- Learn how to create a ring buffer (aka my Ring Delay thread)

- Learn how to create an all-pass filter using a ring buffer.

- Understand how fractional delays work, and the various types of interpolation.

- Learn how to manage feedback loops.

Loads of resources out there for sure!

Latest posts made by Orvillain

-

RE: HISE Transformation to the new ageposted in AI discussion

@David-Healey said in HISE Transformation to the new age:

@dannytaurus said in HISE Transformation to the new age:

Nah, this is the start.

Tell me that again in 5 years

I'm not quite as cynical as I seem, I use AI all the time, I'm just cautious.

I also am concerned about AI inbreeding which is a real problem with limited solutions at the moment.

Honestly, this stuff isn't going away. It really isn't. This is the future of coding. Developers in future will be systems architects. They won't be solely opinionated language purists anymore.

-

RE: Agentic coding workflowsposted in AI discussion

I've used Antigravity to get myself up to speed with JUCE. I've built quite a few things outside of HISE using it. To the point where for my own company, I don't think I will need HISE. For freelance work, HISE is still a solid option. But I saw a competitor today show off his AI plugin generator, and it was very impressive.

In terms of HISE specific code I've been doing, certainly not entire namespaces or anything to do with the UI. But methods and functions here and there, that I knew what I wanted to do, but I just wanted AG to write it faster for me.

I've had more success with AG than ChatGPT on this stuff. Although ChatGPT is actually quite good at writing prompts.

And on a tagent slightly, but one of the biggest hurdles to writing complex projects in HISE is just how many languages and approaches you need to take command of. In C++ I can focus on JUCE and C++. In HISE, I need to learn HISEscript, C++, SNEX, simple markdown, and even css. This is a barrier to speed.

-

RE: Using custom preset system - as in the actual presets themselves, not a browserposted in General Questions

@DanH said in Using custom preset system - as in the actual presets themselves, not a browser:

@ustk ok got it working. Is it possible to update the .preset file without using

Engine.saveUserPreset?Why do you want to do this?

I'm doing this when I save my custom fx chain format:

inline function saveFXChainPreset() { FileSystem.browse(FileSystem.getFolder(FileSystem.UserPresets), true, "*.fxchain", function (f) { if (!isDefined(f) || f == 0) return; PluginSharedData.presetMode = "FXChain"; // Get the data object directly from our custom save logic var data = PluginUserPresetHandling.onPresetSave(); f.writeObject(data); }); }the key being setup a file reference, and then call f.writeObject(blahblah) on it.

-

RE: Using custom preset system - as in the actual presets themselves, not a browserposted in General Questions

@ustk Yep, indeed you can! the XML data you get from the module state call isn't pretty, but it does work. I think you'd do that if you had some module that did not have any UI controls, but you still wanted the preset to dictate its internal state when loading or saving.

-

RE: Using custom preset system - as in the actual presets themselves, not a browserposted in General Questions

@Christoph-Hart along these lines....

Calling:

updateSaveInPresetComponents(params) does indeed have the effect of setting any non specified parameters to their default value. This is not optimal for all use cases, for example my fx chain use case. Because what is happening is my synthesis generators are being reset to their default state, and the only way I can see how to avoid this is by iterating over all controls I do want to edit, and calling .setValue and then .changed() on them... which is actually quite slow it turns out.Could anything be done about this???

-

RE: Using custom preset system - as in the actual presets themselves, not a browserposted in General Questions

We're covering a lot of this in this thread:

https://forum.hise.audio/topic/13701/custom-browser-custom-preset-file-format/29Basically, you've got this:

namespace PluginUserPresetHandling { const UserPresetHandler = Engine.createUserPresetHandler(); inline function onPresetSave() // this is your main preset save method { Console.print("onPresetSave triggered"); } inline function onPresetLoad(obj) // this is your main preset load method { Console.print("onPresetLoad triggered"); } inline function preLoadCallback() // things you want to happen before loading a preset happen here - looking for samples, looking for graphical assets, etc. { Console.print("preLoadCallback triggered"); } inline function postLoadCallback() // things you want to happen after loading a preset happen here - updating preset name labels in your UI, triggering other UI updates, etc. { Console.print("postLoadCallback triggered"); } inline function postSaveCallback() // things you want to happen after saving a preset happen here - copying samples to an external location, removing any dirty flags you might've setup in the UI layer, etc. { Console.print("postSaveCallback triggered"); } inline function init() { UserPresetHandler.setUseCustomUserPresetModel(onPresetLoad, onPresetSave, false); // this line is essential UserPresetHandler.setPreCallback(preLoadCallback); UserPresetHandler.setPostCallback(postLoadCallback); UserPresetHandler.setPostSaveCallback(postSaveCallback); } }You can achieve a hell of a lot with this, without discarding the HISE preset system.

For example, here is my onPresetSave method in my current project:

inline function onPresetSave() { Console.print("onPresetSave triggered"); PluginSharedHelpers.forceAllOuterSlotsEnabled(); if (PluginSharedData.presetMode == "Global") { return saveGlobalPreset(); } if (PluginSharedData.presetMode == "FXChain") { return saveFXChain(); } }In this way, I'm able to gate different save functions based on a master type, which means I can either write the HISE .preset file, or I can write a custom file using my own data model.

My load one is this:

inline function onPresetLoad(obj) {

Console.print("onPresetLoad triggered");

PluginSharedData.isRestoringPreset = true;if (PluginSharedData.presetMode == "Global") { loadGlobalPreset(obj); } if (PluginSharedData.presetMode == "FXChain") { loadFXChain(obj); } PluginSharedData.isRestoringPreset = false; }The loadFXchain method is this:

inline function loadFXChain(obj) { if (!isDefined(obj)) return; Console.print("we are now attempting to load an fx chain"); PluginSharedHelpers.forceAllOuterSlotsEnabled(); local fxSelections = obj.fxSelections; local fxChainOrder = obj.fxChainOrder; local params = obj.parameters; // Set effect menus by stable id (this loads the networks) if (isDefined(fxSelections)) { for (i = 0; i < fxSelections.length; i++) { local sel = fxSelections[i]; if (!isDefined(sel) || !isDefined(sel.id)) continue; local idName = (isDefined(sel.idName) && sel.idName != "") ? sel.idName : "empty"; UIEffectDropDownMenu.setMenuToId(sel.id, idName, true); // fire callback } } // Restore FX parameters for the current fxchainScope, skipping selectors (already set) if (isDefined(params)) { for (i = 0; i < params.length; i++) { local p = params[i]; if (!isDefined(p) || !isDefined(p.id)) continue; if (!_isFxParam(p.id)) continue; if (p.id.contains("EffectSelector")) continue; // handled above local c = Content.getComponent(p.id); if (!isDefined(c)) continue; c.setValue(p.value); c.changed(); } }And you can hopefully see, that what that is doing is skipping userPresetLoad altogether - and instead is manually iterating around whatever data exists in the file, finding the right UI component, and setting the value. This allows me to circumvent some of the HISE assumptions; namely that if a UI component is not specified when loading a user preset, it gets reset to the default value. Which is super no bueno.

Bear in mind some of this stuff can be asynchronous. So I don't think you can always rely on the order of things.

-

RE: Third party HISE developersposted in General Questions

@HISEnberg said in Third party HISE developers:

A bit abashed posting here with so much talent but here it goes!

Wouldn't worry about that, you've been very helpful in getting me up and running in various threads! So thanks! (and to everyone else also!)

-

RE: *sigh* iLok ......posted in General Questions

Nope, and unless my arm is twisted up behind my back, snapped in 3 places, and someone smacks me over the head with a cueball in a sock, I won't be either.

-

RE: C++ External Node & XML Issuesposted in Bug Reports

@Christoph-Hart said in C++ External Node & XML Issues:

@Orvillain I think the problem is that HISE converts Parameter IDs into actual attributes. This is only the case with hardcoded modules, script processors or DSP networks properly escape that in the value string.

<Processor Type="Hardcoded Master FX" ID="HardcodedMasterFX1" Bypassed="0" Network="No network" YourParameterGoesHere="0.5" TryValidating(That)="nope"> <EditorStates BodyShown="1" Visible="1" Solo="0"/> <ChildProcessors/> <RoutingMatrix NumSourceChannels="2" Channel0="0" Send0="-1" Channel1="1" Send1="-1"/> </Processor>I do a bit of sanitizing at some place though (eg. remove white space for the XML attribute, so all my ramblings might be moot because I sprinkled a character sanitation in there too.

ahhhhhhhhhhhhh, gotcha. Yes, then that does make sense that parenthesis would possibly break things... and now I'm going to do a sweep through my code to see how many landmines I've invented.

-

RE: C++ External Node & XML Issuesposted in Bug Reports

@HISEnberg said in C++ External Node & XML Issues:

@Orvillain Strange, I am inclined to agree with you since I've done this with other nodes, but removing them in this particular context seems to have solved the problem. Still I can't find the root issue here so I'll keep digging...

That is very odd. There's nothing in the XML specification that says you shouldn't use parenthesis in an attribute name, so far as I know. The only character to definitely be wary of in my experience is an ampersand.