Latest Hise Build, Juce 6.

I am unable to export to VST3 on Windows.

Full Console:

Re-saving file: D:\Projects\Hise Projects\Griffin_Test\Binaries\AutogeneratedProject.jucer

Finished saving: Visual Studio 2026

Finished saving: Xcode (macOS)

Finished saving: Xcode (iOS)

Finished saving: Linux Makefile

Compiling 64bit FX plugin Griffin_Test ...

MSBuild version 18.3.0-release-26070-10+3972042b7 for .NET Framework

Plugin.cpp

PresetData.cpp

factory.cpp

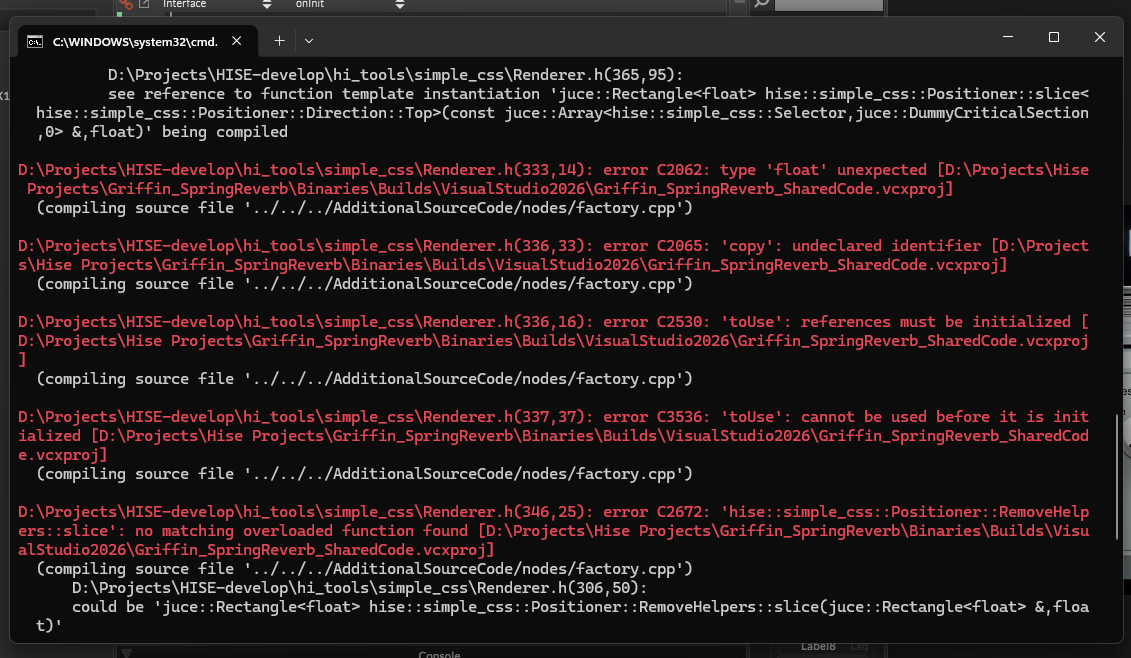

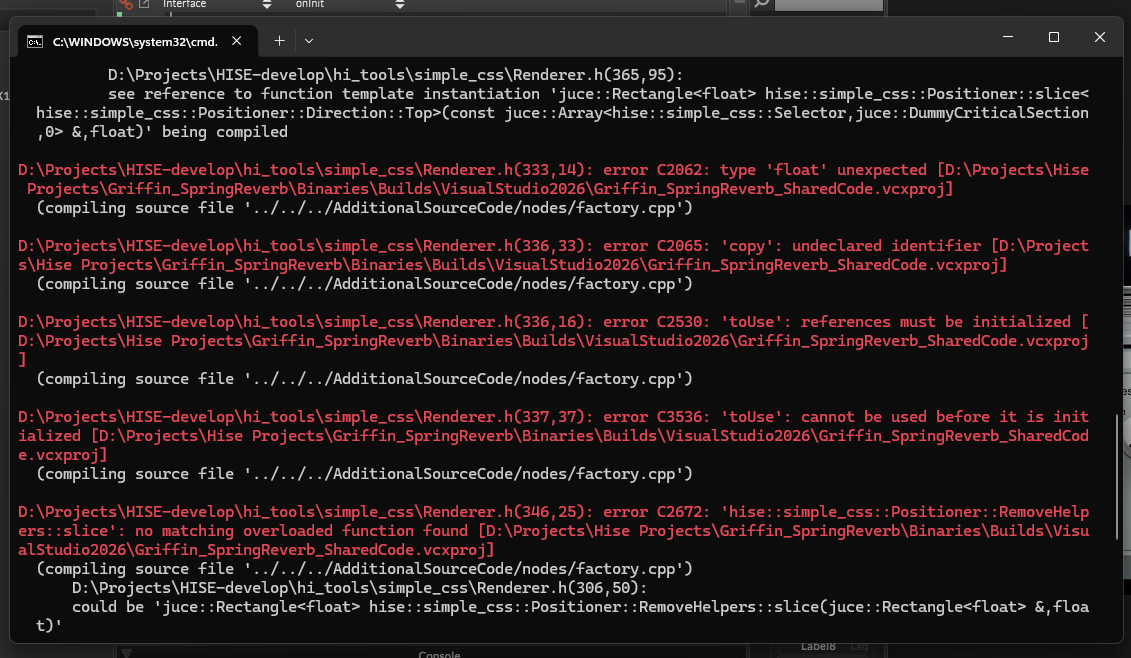

D:\Projects\HISE-develop\hi_tools\simple_css\Renderer.h(333,4): error C2872: 'Rectangle': ambiguous symbol [D:\Projects

\Hise Projects\Griffin_Test\Binaries\Builds\VisualStudio2026\Griffin_Test_SharedCode.vcxproj]

(compiling source file '../../../AdditionalSourceCode/nodes/factory.cpp')

C:\Program Files (x86)\Windows Kits\10\Include\10.0.26100.0\um\wingdi.h(4639,24):

could be 'BOOL Rectangle(HDC,int,int,int,int)'

D:\Projects\HISE-develop\JUCE\modules\juce_graphics\geometry\juce_Rectangle.h(66,7):

or 'juce::Rectangle'

D:\Projects\HISE-develop\hi_tools\simple_css\Renderer.h(333,4):

the template instantiation context (the oldest one first) is

D:\Projects\HISE-develop\hi_tools\simple_css\Renderer.h(365,95):

see reference to function template instantiation 'juce::Rectangle<float> hise::simple_css::Positioner::slice<

hise::simple_css::Positioner::Direction::Top>(const juce::Array<hise::simple_css::Selector,juce::DummyCriticalSection

,0> &,float)' being compiled

D:\Projects\HISE-develop\hi_tools\simple_css\Renderer.h(333,14): error C2062: type 'float' unexpected [D:\Projects\Hise

Projects\Griffin_SpringReverb\Binaries\Builds\VisualStudio2026\Griffin_Test_SharedCode.vcxproj]

(compiling source file '../../../AdditionalSourceCode/nodes/factory.cpp')

D:\Projects\HISE-develop\hi_tools\simple_css\Renderer.h(336,33): error C2065: 'copy': undeclared identifier [D:\Project

s\Hise Projects\Griffin_Test\Binaries\Builds\VisualStudio2026\Griffin_Test_SharedCode.vcxproj]

(compiling source file '../../../AdditionalSourceCode/nodes/factory.cpp')

D:\Projects\HISE-develop\hi_tools\simple_css\Renderer.h(336,16): error C2530: 'toUse': references must be initialized [

D:\Projects\Hise Projects\Griffin_Test\Binaries\Builds\VisualStudio2026\Griffin_Test_SharedCode.vcxproj

]

(compiling source file '../../../AdditionalSourceCode/nodes/factory.cpp')

D:\Projects\HISE-develop\hi_tools\simple_css\Renderer.h(337,37): error C3536: 'toUse': cannot be used before it is init

ialized [D:\Projects\Hise Projects\Griffin_Test\Binaries\Builds\VisualStudio2026\Griffin_Test_SharedCod

e.vcxproj]

(compiling source file '../../../AdditionalSourceCode/nodes/factory.cpp')

D:\Projects\HISE-develop\hi_tools\simple_css\Renderer.h(346,25): error C2672: 'hise::simple_css::Positioner::RemoveHelp

ers::slice': no matching overloaded function found [D:\Projects\Hise Projects\Griffin_Test\Binaries\Builds\Visu

alStudio2026\Griffin_Test_SharedCode.vcxproj]

(compiling source file '../../../AdditionalSourceCode/nodes/factory.cpp')

D:\Projects\HISE-develop\hi_tools\simple_css\Renderer.h(306,50):

could be 'juce::Rectangle<float> hise::simple_css::Positioner::RemoveHelpers::slice(juce::Rectangle<float> &,floa

t)'

D:\Projects\HISE-develop\hi_tools\simple_css\Renderer.h(346,25):

Failed to specialize function template 'juce::Rectangle<float> hise::simple_css::Positioner::RemoveHelpers::s

lice(juce::Rectangle<float> &,float)'

D:\Projects\HISE-develop\hi_tools\simple_css\Renderer.h(346,25):

With the following template arguments:

D:\Projects\HISE-develop\hi_tools\simple_css\Renderer.h(346,25):

'D=hise::simple_css::Positioner::Direction::Top'

D:\Projects\HISE-develop\hi_tools\simple_css\Renderer.h(306,33):

'Rectangle': ambiguous symbol

D:\Projects\HISE-develop\hi_tools\simple_css\Renderer.h(306,42):

syntax error: missing ';' before '<'

Press any key to continue . . .

Is this a bug, or something I've not set up correctly on my end?