@Christoph-Hart said in 8 Times more CPU consumption on Aida-X Neural Models:

@Dan-Korneff alright then it stays, the people have spoken :)

Just out of curiosity: who is using this in a real project and does it work there? Like except for the NAM loader?

To be honest: I‘m a bit „disincentivized“ in fixing the NAM stuff because I don‘t see a use case except for loading in existing models from that one website with potential infringement of other peoples work, but maybe my understanding of the possible use cases here is wrong.

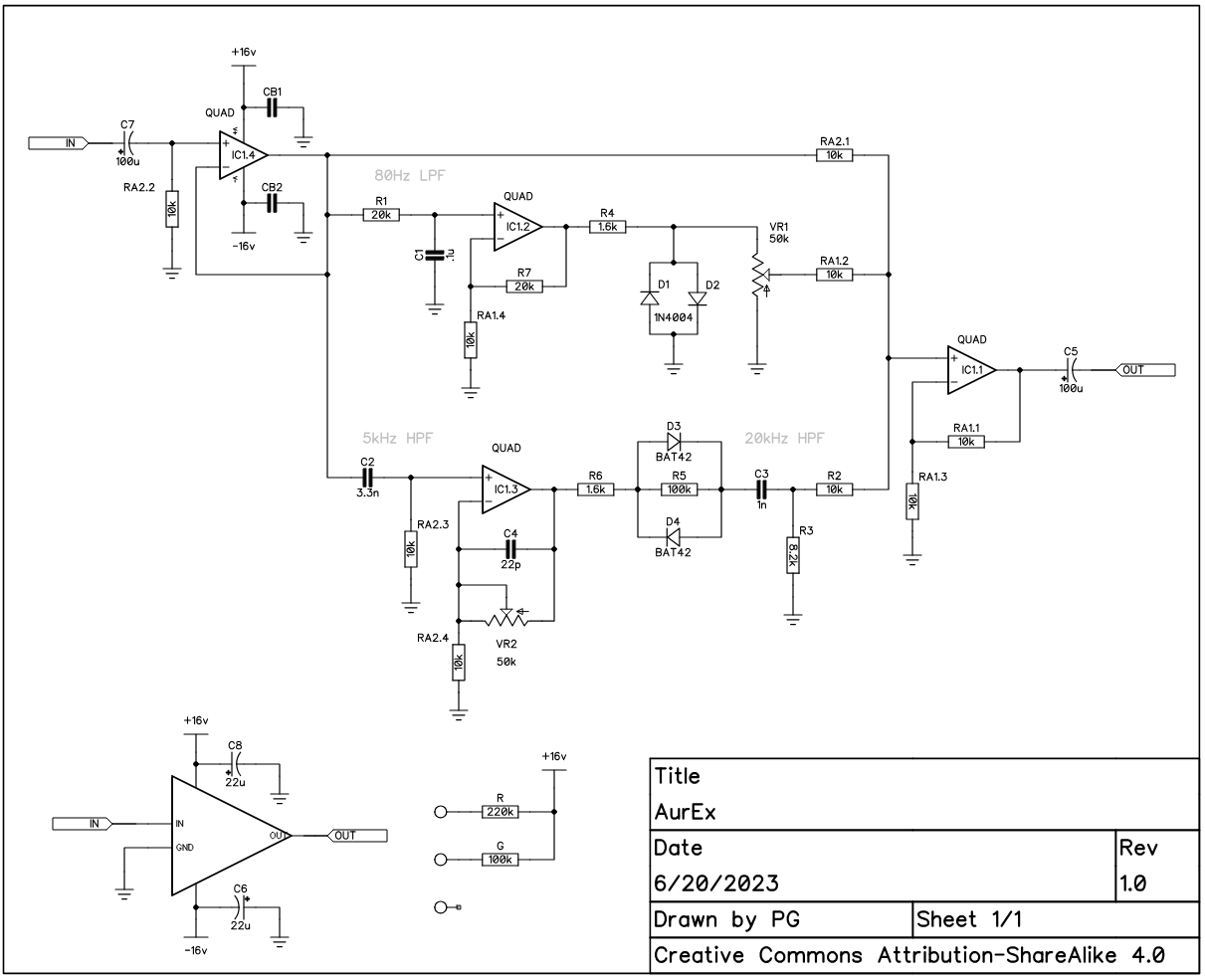

@Christoph-Hart I'm only interesting in using models of things I built and trained myself. I plan on using this as part of a hybrid approach where I model certain physical circuits with NAM and then use DSP for other aspects. Not just grabbing stuff of ToneZone and putting a plugin wrapper around a single profile.

As @Dan-Korneff said, NAM has a much more solid and predictable training pipeline, not to mention an easy to use, free, fast, cloud based platform for the training. That's what makes it desirable. There really are a lot of options to make really cool stuff.