Don't know if this is the done thing really, but I wanted to show off:

https://youtu.be/1kMHloRQLcM

Posts

-

I wrote a reverbposted in C++ Development

-

I wrote a bbd delayposted in C++ Development

Again, wasn't sure where to put this. But I created my own node.

Modelled analog bucket brigade delay. I'm starting to build up quite a nice collection of delay and reverb utilities now!

-

RE: I wrote a reverbposted in C++ Development

@Chazrox I might do a video or two on everything I've learned!

-

RE: Third party HISE developersposted in General Questions

I'm actually moving into more JUCE C++ based projects now. But I've spent 18 years in music tech. Used to work for FXpansion, then ROLI, then inMusic until mid last year when I made the transition to developer. I've got a couple of HISE projects on the go right now - more synths and effects than sample libraries at the moment, but I've done sample libraries throughout my career, with a specific focus in drum libraries. I have a few Kontakt ports in the pipeline for this year too.

Eventually will launch my own plugin company as well. That is the goal.

-

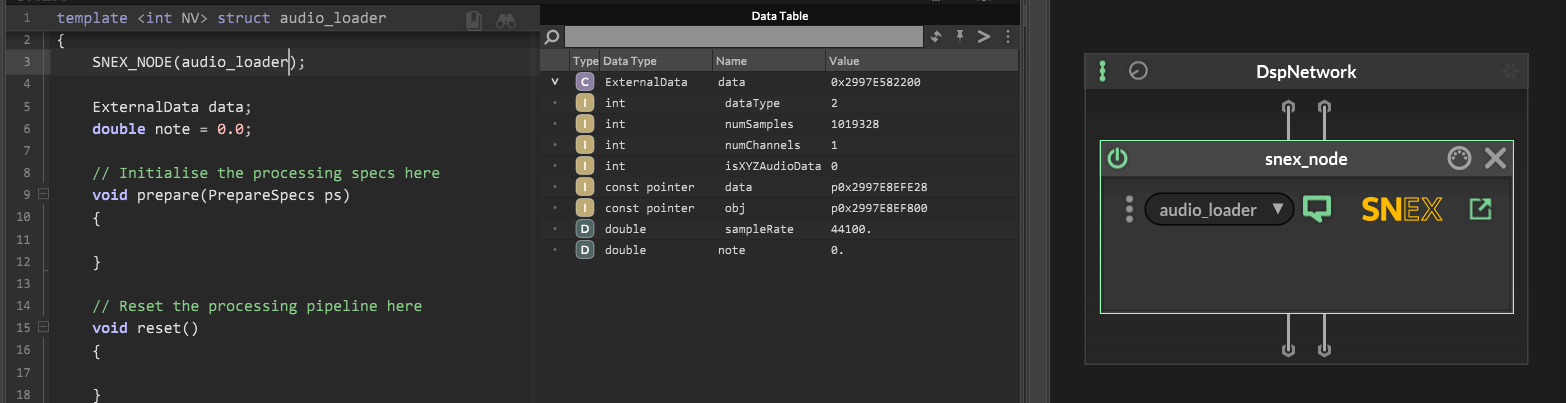

RE: Orv's ScriptNode+SNEX Journeyposted in ScriptNode

Lesson 5 - SNEX code in a bit more detail.

So I'm by no means an expert in C or C++ - in fact I only just recently started learning it. But here's what I've sussed out in regards to the HISE template.... and template is exactly the right word, because the first line is:

template <int NV> struct audio_loaderSomewhere under the hood, HISE must be setup to send in an integer into any SNEX node, that integer corresponding to a voice. NV = new voice perhaps, or number of voices ????

The line above declares a template that takes this NV integer in, and creates a struct called audio_loader for each instance of NV. Indeed we can prove this by running the following code:

template <int NV> struct audio_loader { SNEX_NODE(audio_loader); ExternalData data; double note = 0.0; // Initialise the processing specs here void prepare(PrepareSpecs ps) { } // Reset the processing pipeline here void reset() { } // Process the signal here template <typename ProcessDataType> void process(ProcessDataType& data) { } // Process the signal as frame here template <int C> void processFrame(span<float, C>& data) { } // Process the MIDI events here void handleHiseEvent(HiseEvent& e) { double note = e.getNoteNumber(); Console.print(note); } // Use this function to setup the external data void setExternalData(const ExternalData& d, int index) { data = d; } // Set the parameters here template <int P> void setParameter(double v) { } };There are only three things happening here:

- We set the ExternalData as in a previous post.

- We establish a variable with the datatype of double called 'note' and we initialise it as 0.0. But this value will never hold because....

- In the handleHiseEvent() method, we use e.getNoteNumber() and we assign this to the note variable. We then print the note variable out inside of the handleHiseEvent() method.

Now when we run this script, any time we play a midi note, the console will show us the note number that we pressed. This is even true if you play chords, or in a scenario where no note off events occur.

That's a long winded way of saying that a SNEX node is run for each active voice; at least when it is within a ScriptNode Synthesiser dsp network.

The next line in the script after the template is established is:

SNEX_NODE(audio_loader);This is pretty straight forward. The text you pass here has to match the name of the script loaded inside your SNEX node - not the name of the SNEX node itself.

Here you can see my SNEX node is just called: snex_node.

But the script loaded into it is called audio_loader, and so the reference to SNEX_NODE inside the script has to also reference audio_loader.

-

RE: Need filmstrip animationsposted in General Questions

@d-healey I really like that UI. Very simple, accessible, and smooth looking - for lack of a better word!

-

RE: Third party HISE developersposted in General Questions

@HISEnberg said in Third party HISE developers:

A bit abashed posting here with so much talent but here it goes!

Wouldn't worry about that, you've been very helpful in getting me up and running in various threads! So thanks! (and to everyone else also!)

-

RE: Transient detection within a loaded sampler - SNEX ????posted in General Questions

@HISEnberg

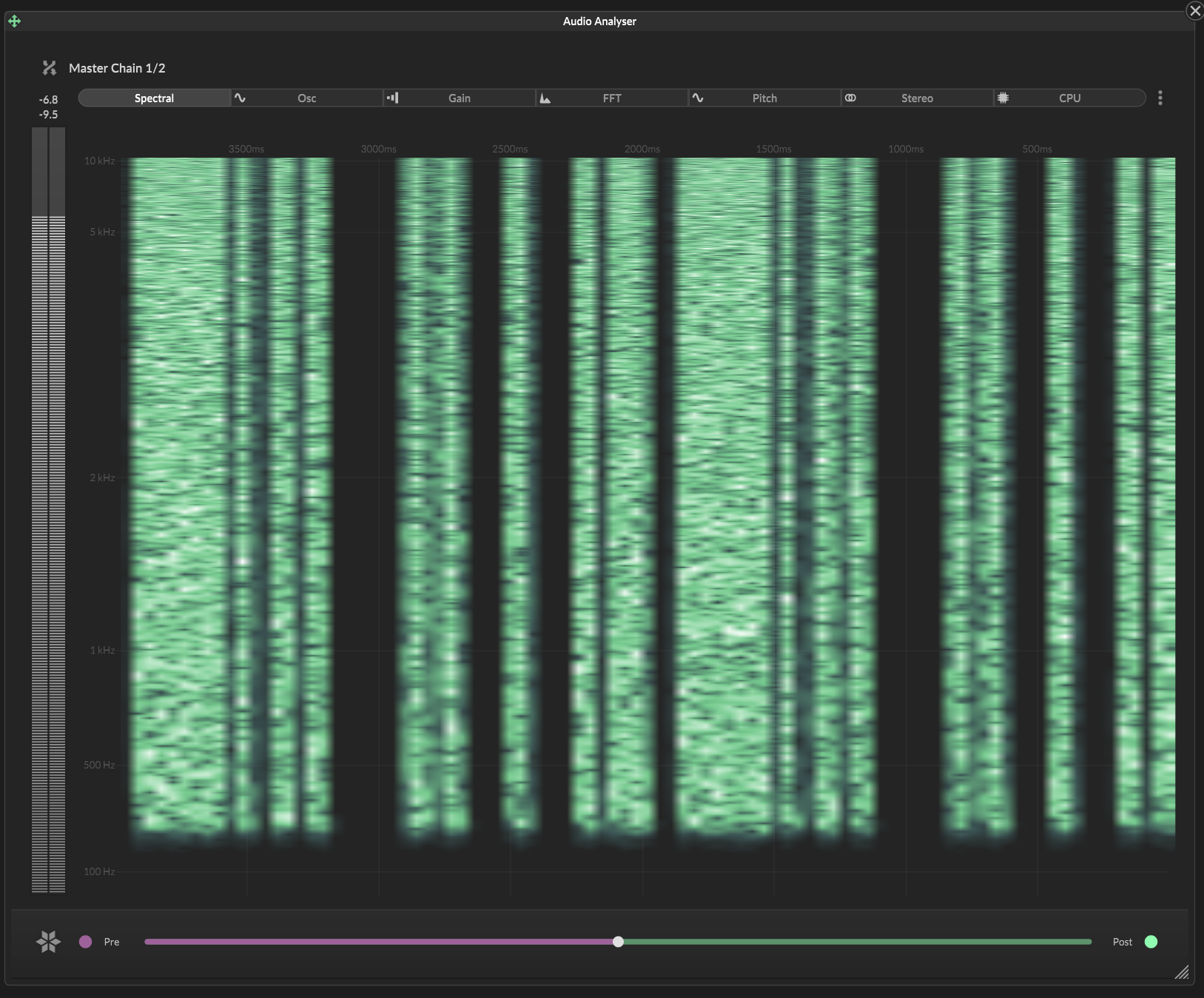

Yes I wrote a custom transient detector in a c++ node, and made sure it utilised an audio file, which I can load in my UI using the audio waveform floating tile.I implemented spectral flux extraction:

- Take your audio.

- Perform an FFT on it.

- Extract the spectral flux envelope from the FFT.

- Downsample the spectral flux envelope (optional but can help accuracy)

- Perform peak picking on the spectral flux envelope.

I used the stock JUCE FFT processor.

-

RE: Can We PLEASE Just Get This Feature DONEposted in Feature Requests

Free mankini with every commercial license???

-

RE: I wrote a reverbposted in C++ Development

@Chazrox said in I wrote a reverb:

@Orvillain Please.

I've been waiting for some dsp videos! I've been watching ADC's everyday on baby topics just to familiarize myself with the lingo and what nots. I think im ready to start diving in! There are some pretty wicked dsp guys in here for sure and I'd love to get some tutuorials for writing c++ nodes.

I've been waiting for some dsp videos! I've been watching ADC's everyday on baby topics just to familiarize myself with the lingo and what nots. I think im ready to start diving in! There are some pretty wicked dsp guys in here for sure and I'd love to get some tutuorials for writing c++ nodes.There's two guys who got me started in this. One is a dude called Geraint Luff aka SignalSmith. This is probably his most accessible video:

https://youtu.be/6ZK2GoiyotkThen the other guy of course is Sean Costello of ValhallaDSP fame:

https://valhalladsp.com/2021/09/22/getting-started-with-reverb-design-part-2-the-foundations/

https://valhalladsp.com/2021/09/23/getting-started-with-reverb-design-part-3-online-resources/In essence, here's the journey; assuming you know at least a little bit of C++

- Learn how to create a ring buffer (aka my Ring Delay thread)

- Learn how to create an all-pass filter using a ring buffer.

- Understand how fractional delays work, and the various types of interpolation.

- Learn how to manage feedback loops.

Loads of resources out there for sure!

-

RE: scriptAudioWaveForm and updating contentsposted in General Questions

@d-healey said in scriptAudioWaveForm and updating contents:

@Orvillain Did you try,

AudioWaveform.set("processorId", value);?Yeah I did, and it does update it based on a follow up AudioWaveform.get('processorId') call - but the UI component doesn't seem to update, and still shows data from the previous processorId. When I compile the script, then the UI updates one time... but not on subsequent calls to the set method.

I figured I needed to call some kind of update() function after setting the processorId, but no such luck so far.

-

RE: Custom browser - custom preset file format???posted in General Questions

woohoo! I've now got a custom FX chain file format too. At least in prototype form.

I think I'll write a tutorial on all of this once I've properly sussed it all out. Because it is quite powerful, and no-one else really seems to have dove into this.

-

RE: How to make a basic distortion Effectposted in General Questions

Start off with a tanh function.

Then put a high-pass filter before it. Set the range on the tanh to be quite high. 1-10 would do. Then tweak the high-pass cutoff and notice how it impacts the distortion.

Now add a low-pass filter after the tanh. Tweak the cutoff and notice how it cleans up the high frequencies.

Start from there.

-

RE: CSS in production plugins?posted in General Questions

Hmmmm I started using it for the modulation matrix controller, but there were things I wanted to do that I couldn't, so I wrote my own script panel matrix controller using LAF instead.

LAF is - I guess - a subset of the JUCE graphics API, so I'm more comfortable with LAF. CSS hasn't really fully clicked for me yet, if I'm honest. I've never needed it for anything else I've ever done, because none of it was web based and it was all Python, c++, JUCE, Lua, and C#.

-

RE: Third party C++ log to consoleposted in General Questions

Don't forget to add this to HISE's preprocessor definitions too:

JUCE_LOG_ASSERTIONS=1 -

RE: Setting UI values from a combobox - doesn't fall through to linked processorId+parameterId ??posted in General Questions

Oh for n00b sake... I just call .changed() it would seem.

-

Extend range of the spectral analyserposted in Feature Requests

Could we get this extended to cover 20hz-24kHz ??? Unless I'm missing something, there doesn't seem to be a way to do it. I've been writing some custom Butterworth filters and evaluating them and diagnosing issues with cascading has proven a bit tricky in a few instances.

-

I think I've figured out a better way to create parameters for a nodeposted in C++ Development

Boy, I hope I don't butcher this! But it is working for me, so I thought I would write it it.

Context: I am working on a BBD Delay model, as previously shown. There are around 90 odd parameters that I want, because I want my UI to set each parameter to a specific value, according to a chip or pedal voicing dictionary.

Now the classic method of doing this:

void createParameters(ParameterDataList& data) { { // Delay Time parameter::data p("Delay Time", { 10.0, 5000.0, 1.0}); registerCallback<0>(p); p.setDefaultValue(500.0); data.add(std::move(p)); } }And then this:

template <int P> void setParameter(double v) { if (P == 0) {effect.setDelayMs(v); return;} }Honestly I just found it unreadable when trying to scale this out to 90+ parameters.

Now I knew about lambda functions from Python, and I have heard many c++ developers talk about them over the years, so with a bit of ChatGPT research, this is what I've come up with.

Everything to follow happens inside the project namespace, and inside the nodes main struct.

First we need a parameter spec. When we create a parameter we need to specify several things - name, min value, max value, and optionally the step size. So we write a little struct to capture these things:

struct ParamSpec { int index; // the code doesn't use this - it is purely for readability later on const char* name; float min, max, step, def; };Then we need a set of parameters, that are never changing, are static, and constant. Like this:

inline static constexpr ParamSpec kSpecs[] = { // idx, name, min, max, step, def, { 0, "Delay Time", 20.0f, 10000.0f, 1.0f, 500.0f}, { 1, "Mix", 0.0f, 1.0f, 0.01f, 0.5f }, { 2, "Feedback", 0.0f, 2.0f, 0.01f, 0.5f}, };This creates essentially an array of ParamSpec objects. We've filled in the data required, and the whole thing is assigned to kSpecs. The compiler knows this will never change, because it is a static constexpr object.

Then immediately after we just want the number of parameters:

inline static constexpr std::size_t kNumParams = std::size(kSpecs);From what I can gather, some compilers need this total size number to be static as well.

From here, we write a function that uses std::index_sequence to essential unroll the array and create the objects we need. Like this:

template <std::size_t... Is> void createParametersImpl(ParameterDataList& data, std::index_sequence<Is...>) { ( [&] { const auto& s = kSpecs[Is]; parameter::data p(s.name, { s.min, s.max, s.step }); this->template registerCallback<Is>(p); p.setDefaultValue(s.def); data.add(std::move(p)); }(), ... ); }The way std::index_sequence works in this context is, it unrolls our array of parameters and creates a big array of them, complete with the relevant data. You can think of it as a compile-time for loop, that counts what we're supplying it.

Then Is becomes the index of whatever thing inside the array we're looking at and s becomes the specific instance inside the array. Because it is a struct with named parameters inside it (min/max/step/def) we can access them very easily by going s.def, for example.

So we have the index, we have each individual parameter value, and we have the name - plus this->template is prepended to the registerCallback line, so that we know the compiler knows where that function lives.

So after putting that in place, our createParameters method becomes:

void createParameters(ParameterDataList& data) { createParametersImpl(data, std::make_index_sequence<std::size(kSpecs)>{}); }You can see that it runs our actual parameter creation method, which iterates over our kSpecs array and fires off the expected parameter creation and callback registration lines. This means we can maintain one single source of truth for our parameters. This in theory should create all of our parameters at compile time, register them for the node, and they will appear on the node when you load it up in HISE - and they'll be in the order specified in the kSpecs list.

So to round that up. Do something like this inside your nodes struct and you will have an easier time of creating parameters:

struct ParamSpec { int index; // purely for readability; not used by code const char* name; float min, max, step, def; }; inline static constexpr ParamSpec kSpecs[] = { // idx, name, min, max, step, def { 0, "Delay Time", 20.0f, 10000.0f, 1.0f, 500.0f }, { 1, "Mix", 0.0f, 1.0f, 0.01f, 0.5f }, { 2, "Feedback", 0.0f, 2.0f, 0.01f, 0.5f }, }; inline static constexpr std::size_t kNumParams = std::size(kSpecs); template <std::size_t... Is> void createParametersImpl(ParameterDataList& data, std::index_sequence<Is...>) { ( [&] { const auto& s = kSpecs[Is]; parameter::data p(s.name, { s.min, s.max, s.step }); // use {min,max} if step unsupported this->template registerCallback<Is>(p); p.setDefaultValue(s.def); data.add(std::move(p)); }(), ... ); } void createParameters(ParameterDataList& data) { createParametersImpl(data, std::make_index_sequence<kNumParams>{}); }But what if we wanted to link each parameter to a particular callback inside the DSP class? That's where lambdas come in.

The very first step is to declare a Self reference at the top of our nodes struct. In my case that is BBDProcessor, but in your case it would be something else, and the name needs to match your nodes name. For instance:

namespace project { using namespace juce; using namespace hise; using namespace scriptnode; template <int NV> struct BBDProcessor: public data::base { using Self = BBDProcessor<NV>; SNEX_NODE(BBDProcessor); struct MetadataClass { SN_NODE_ID("BBDProcessor"); };This line:

using Self = BBDProcessor;That gives us a reference to our node class. To be clear, you should use whatever your node is called - not BBDProcessor.

<NV>is required too.

After that, we can add an extra line to our ParamSpec:

struct ParamSpec { int index; const char* name; float min, max, step, def; void (*apply)(Self&, double); };*void (apply)(Self&, double);

Breaking this down bit by bit:void = doesn't return anything

(*apply) = apply is a pointer

(Self&, double) = takes two arguments .... makes Self a reference to another thing... which will ultimately be the node's class itself.... and the second argument is a double value.This effectively gives our ParamSpec an internal function, that later on will point to another function. But it needs to know WHICH function we want to run.

So we move onto updating our actual parameter specifications, by adding a lamda to each of the entries. In my case, I have a particular object that is an instance of a DSP class I wrote (my main BBD Delay effect) so that makes this is quite easy. It looks like this:

inline static constexpr ParamSpec kSpecs[] = { // idx, name, min, max, step, def, apply { 0, "Delay Time", 20.0f, 10000.0f, 1.0f, 500.0f, +[](Self& s, double v){ s.effect.setDelayMs(asFloat(v)); } }, { 1, "Feedback", 0.0f, 2.0f, 0.01f, 0.5f, +[](Self& s, double v){ s.effect.setFeedback(asFloat(v)); } }, { 2, "Mix", 0.0f, 1.0f, 0.01f, 0.5f, +[](Self& s, double v){ s.effect.setMix(asFloat(v)); } }, };+[](Self& s, double v){ s.effect.setDelayMs(static_cast(v)); } },

+[] tells the compiler this is a lambda pointer function. Emphasis on the pointer - it is effectively saying "hey compiler, point at this function but treat it as throwaway"

Now for this to work, we do need some helper functions:

static float asFloat(double v) { return static_cast<float>(v); } static int asInt(double v) { return (int)std::lround(v); } static bool asBool(double v) { return v >= 0.5; }These are used to convert the double coming from our parameter, into whatever kind of value our actual setter function expects. I'm not yet using any bools, but you can see I am casting as a float for delayMs, feedback, and mix.

Finally, our setParameter method becomes:

template <int P> void setParameter(double v) { static_assert(P < (int)std::size(kSpecs), "Parameter index out of range"); kSpecs[P].apply(*this, v); }This is the thing that causes any given parameter index to trigger the associated function; in my case, delay functions.

So that's it. After a bit of study, I think I finally get this.

- ParamSpec holds everything that the node needs to know about each parameter.

- createParametersImpl() uses a compile-time index_sequence to build and register them.

- setParameter() uses the apply function pointer to trigger the associated function that we setup in the array of parameters.

- Adding new parameters is now just a case of adding a single line to kSpecs.

No management of indexes. No messing around later on with multi-line editing and moving lines around when you want to change parameter order. No needing to delete parameters from the creation and value set stages separately. It is all contained within one place.

I probably wouldn't have gotten here without ChatGPT tbh. Being able to say "hey my dude, I'm a complete idiot and have an IQ of 76, please explain it better before I unsubscribe" without getting developer derision (common!) is quite a powerful thing.

Anyway, jokes aside, hope this helps!

-

RE: Ring Buffer designposted in C++ Development

Here is a super contrived example. If you compile this as a node, and add it in your scriptnode layout... it will delay the right signal by 2 seconds.

#pragma once #include <JuceHeader.h> namespace project { using namespace juce; using namespace hise; using namespace scriptnode; static inline float cubic4(float s0, float s1, float s2, float s3, float f) { float a0 = -0.5f * s0 + 1.5f * s1 - 1.5f * s2 + 0.5f * s3; float a1 = s0 - 2.5f * s1 + 2.0f * s2 - 0.5f * s3; float a2 = -0.5f * s0 + 0.5f * s2; float a3 = s1; return ((a0 * f + a1) * f + a2) * f + a3; } struct RingDelay { // holds all of the sample data std::vector<float> buf; // write position int w = 0; // bitmask for fast wrap-around (which is why the buffer must always be power-of-2) int mask = 0; // sets the size of the buffer according to requested size in samples // will set the buffer to a power-of-two size above the requested capacity // for example - minCapacitySamples==3000, n==4096 void setSize(int minCapacitySamples) { // start off with n=1 int n = 1; // keep doubling n until it is greater or equal to minCapacitySamples while (n < minCapacitySamples) { n <<= 1; } // set the size of the buffer to n, and fill with zeros buf.assign(n, 0.0f); // mask is now n-1; 4095 in the example mask = n - 1; // reset the write pointer to zero w = 0; } // push a sample value into the buffer at the write position // this will always wrap around the capacity length because of the mask value being set prior void push(float x) { buf[w] = x; // set the current write position to the sample value w = (w + 1) & mask; // increment w by 1. Use bitwise 'AND' operator to perform a wrap } // Performs a cubic interpolation read operation on the buffer at the specified sample position // This can be a fractional number float readCubic(float delaySamples) const { // w is the next write position. Read back from that according to delaySamples. float rp = static_cast<float>(w) - delaySamples; // wrap this read pointer into the range 0-size, where size=mask+1 rp -= std::floor(rp / static_cast<float>(mask + 1)) * static_cast<float>(mask + 1); // the floor of rp - the integer part int i1 = static_cast<int>(rp); // the decimal part float f = rp - static_cast<float>(i1); // grab the neighbours around i1 int i0 = (i1 - 1) & mask; int i2 = (i1 + 1) & mask; int i3 = (i1 + 2) & mask; // feed those numbers into the cubic interpolator return cubic4(buf[i0], buf[i1 & mask], buf[i2], buf[i3], f); } // returns the size of the buffer int size() const { return mask + 1; } // clear the buffer without changing the size and reset the write pointer void clear() { std::fill(buf.begin(), buf.end(), 0.0f); w = 0; } }; // ==========================| The node class with all required callbacks |========================== template <int NV> struct RingBufferExp: public data::base { // Metadata Definitions ------------------------------------------------------------------------ SNEX_NODE(RingBufferExp); struct MetadataClass { SN_NODE_ID("RingBufferExp"); }; // set to true if you want this node to have a modulation dragger static constexpr bool isModNode() { return false; }; static constexpr bool isPolyphonic() { return NV > 1; }; // set to true if your node produces a tail static constexpr bool hasTail() { return false; }; // set to true if your doesn't generate sound from silence and can be suspended when the input signal is silent static constexpr bool isSuspendedOnSilence() { return false; }; // Undefine this method if you want a dynamic channel count static constexpr int getFixChannelAmount() { return 2; }; // Define the amount and types of external data slots you want to use static constexpr int NumTables = 0; static constexpr int NumSliderPacks = 0; static constexpr int NumAudioFiles = 0; static constexpr int NumFilters = 0; static constexpr int NumDisplayBuffers = 0; // components double sampleRate = 48000.0; RingDelay rd; // Helpers // Converts milliseconds to samples and returns as an integer static inline int msToSamplesInt(float ms, double fs) { return (int)std::ceil(ms * fs / 1000.0); } // Converts milliseconds to samples and returns as a float static inline float msToSamplesFloat(float ms, double fs) { return (float)(ms * fs / 1000.0); } // Scriptnode Callbacks ------------------------------------------------------------------------ void prepare(PrepareSpecs specs) { // update the sampleRate constant to be the current sample rate sampleRate = specs.sampleRate; // we arbitrarily invent a pad guard number to add to the length of the ring delay const int guard = 128; // set the size using msToSamplesInt because setSize expects an integer rd.setSize(msToSamplesInt(10000.0f, sampleRate) + guard); } void reset() { } void handleHiseEvent(HiseEvent& e) { } template <typename T> void process(T& data) { static constexpr int NumChannels = getFixChannelAmount(); // Cast the dynamic channel data to a fixed channel amount auto& fixData = data.template as<ProcessData<NumChannels>>(); // Create a FrameProcessor object auto fd = fixData.toFrameData(); while(fd.next()) { // Forward to frame processing processFrame(fd.toSpan()); } } template <typename T> void processFrame(T& data) { // Separate out the stereo input float L = data[0]; float R = data[1]; // Calculate the delay time we want in samples - expects float float dTime = msToSamplesFloat(2000.0f, sampleRate); // read the value according to the delay time we just setup float dR = rd.readCubic(dTime); // Push just the right channel into our ring delay // remember, this will auto-increment the write pointer rd.push(R); // left channel - Write the original audio back to the datastream data[0] = L; // Right channel - Write the delayed audio back to the datastream data[1] = dR; } int handleModulation(double& value) { return 0; } void setExternalData(const ExternalData& data, int index) { } // Parameter Functions ------------------------------------------------------------------------- template <int P> void setParameter(double v) { if (P == 0) { // This will be executed for MyParameter (see below) jassertfalse; } } void createParameters(ParameterDataList& data) { { // Create a parameter like this parameter::data p("MyParameter", { 0.0, 1.0 }); // The template parameter (<0>) will be forwarded to setParameter<P>() registerCallback<0>(p); p.setDefaultValue(0.5); data.add(std::move(p)); } } }; }