@David-Healey can't open now but

https://forum.hise.audio/post/86690 related?

@David-Healey can't open now but

https://forum.hise.audio/post/86690 related?

@Christoph-Hart It looks like it comes with a built in host that auto-reloads the plugin as you iterate, and some templates.

But with that license I wouldn't really touch it.

Noncompete

Any purpose is a permitted purpose, except for providing any product that competes with the software or any product the licensor or any of its affiliates provides using the software.

How is that defined? Who defines what is considered a competing product?

lmao

@d-healey yes, their app for codesigning and contacting support to give you access.

But this at least means you can bundle it.

VST SDK 3.8.0 adds MIDI 2.0 and changes the license to MIT.

Interesting move, this will basically cement it as THE format for audio plugins. I mean it kind of has been, but CLAP was the only multiplatform free format until now and was an easy target given how you could just wrap it into vst3/au. I wonder how much of an impact CLAP had in them making this decision?

AAX 2.8.0. Is also available as GPL3. Which is a development as of this year.

ASIO SDK is now also available as GPL3.

@coupe70 don't use the svf nose, use the svf_eq node.

Neutral q is 0.7071 rounded, yes, square root of 0.5.

I recommend you try butterworth filters in Faust since they allow you to set the order and their frequency cutoff is always the value at - 3db.

@iamlamprey Faust library search is hot trash. Your best bet is to go through all the libraries a couple of times and sorta learn all that's there. Also, use the github version for proper search (you can also learn how it was done). That's kind of where all their dev resources seem to be going.

https://faustlibraries.grame.fr/libs/analyzers/#anpitchtracker

import("stdfaust.lib");

pt = an.pitchTracker(1, 0.01) : si.smoo : si.smoo;

process = pt, _;

@iamlamprey youll want to try different orders and time windows in the call in faust.

Also at certain settings it might be accurate, just off for a fixed amount, like off for a few cents in lower freqs. You can easily test this and write a fixing function.

Hiyya go bud. I even piped it to the UI for ya.

HiseSnippet 2302.3oc0Y8zaabbEeVIsRVLx1IEFE43.cIzsxzjzxRtwPPx5eNDMRlPTQH.8fvvcGRNPK2Y6NCkDaQ.JPujS8dtkC8Pu1dqeH5o9EweCZeuY1k6tjTRVJVAsxFRbm27dyu88meuYFxuTyC849mpBEQQbMg3LayXoGWojwDmRGOLhSbVxs0vPcuc5wDgjF6Rbdj6ALklGSsCs8vHlRw8INNy9Vb.mEmiX948atMKfE5wyFhPNQJ73esnuPmMZys9shff8Y97iE8yM6U2pgmLbGYfb.fmYcqRhXdmw5xOjgSaFWxWwT8HN+JWO+10eg+pd0Wk+adAuypuZ8Z0WuSs5ub8UqyVa0UAY70WiUm3L+d9BsLtklo4JhybaK8G1pm7hP6BbhPIZGvwGpQZAqrc38kA93qHNJYmdh.+QNJE31lKmaaVqa6ItGH7EiFOy88oFAzLMx6.cloH7ls.7pkGdUyAuo.ImbPZNKj9L2VdwhHclDDOehaiPHZ1gAwo7PwNWxLudN2cjvLB0U5yNiueL7vHMJuV0pqPge8zWWpDDqTZ54rXpU28+1ZzMnlrmJc4585zg6oKurUHEjtLp0yeN8sbMU2iSC45KjwmQi4c3wbHwAEdbOghB+2LAHtSkcn8k9CB3zdvrnxPin.dGM8WWXVCgzFZGQ.OGxRWhMn6E1UDxQfsqJ5P6vGktvEP4JzkUlmNsykShYoOuHf2WD5aD0XWZ6gzdxy4whvtilMNGPjGKDvdvPZaN0qGKrK2GmNGh+oy9x9A36QNWShtf6nMSI7XAf9V6p4egYvjW+DOwf9sgB0K5I75AfLJlqf3nZDT.UDfmAqQgO.CyziVob9L7UbiTAnGq7xLe+w8DQrXXkQhgL2QlQxjtgwfnYdW7NwbHQuYprxKeBKX.GrLsTAcC4Afdo4gvh2DGp7xFIP.BRBwTPyiUTbcSnZSejb.3J4k6LHzSKjgk69zR+wRKZrnPCdjMxPEBGyZWFLyhkVrKZEKySY6eTU.2nlih6VwOlcww7K0kMFZE38WnPS70RHlrsbPnupb0mBy86PmzHXgzaw6.Qs1.OVFtLvxXBH73eDOBQ+APIJP0UdLinYwVyTtV0pUedc70N0PP9zgPhv6RrXouqDcbQc5LUYnmMVFD.lcZhsK30nXYal1JP3B7gilHP8TjOy8p4yxS25YCz4lnLrQnP+tHd3UQBSRxNfO8MM1koYHIXxXv7h3wZABAmc4mCsfrThK5tKWclVFYla+HYHZAm4zFoOLkvDc8DAPKtfqILPtDdUbvVTCS+.YR9VfoG4oX5hz+XSxDAfOr.mKxqFpD5g4ah9QqmvGJD+L2lXV8zw3LSAifm99.iIcRenqsyQF.myc+u8mu1lkbSamYV7kbyZMLsFlNeuaVABv3BTL7ikMCXCKqX8iB3GAXbEZ6.o2YsD+A9jUUQVHrMNixXqAHiScWJ9l+C1qT65CJVlztGvzwBL0+vA8aAThd7cRPGVELCVCZetZZUQKfOy7v+A9IQXM7YmDg0RElq7MoYrIbj7YHWy56yZDSdSPf7BrnUjjkBImlwZJCFF0SFJ7vvhcFoH8M8AxYcJbyRw2NMbjhbXmkGyDAnQaMPA7N9uKrEXGy1Ycl8DdrxrlK3VsB7OB5WgkpO1eEUxYlCgtbPLXelGDCF1jo6gkVH0FjGCcb7FkOW30JeJkcetEDiVMcGwVjlrpl8Ca1.eJUmMaFeBpUmwtPMTmfRgtTDS6NDpFahSOEx1oJUdvtxQpAxDuCw7J4DOMHmS7sAxnHnF4xTB7cEpHnzY6A.Efs.svH36EbDlKSyh1CJD7gcpaTOMyZz1KP8mezSDmGbfHLwKjQkc.6xwF6S2pklGYSNxnkacF+BqeI+nnqadjTkSv8GbrzXKH2F1DnYM+D2uA.bGHCvmL1579M2k2gMHPOAlHWOnWcqI.82+uGGz+3O7C+yIA8V+49+qMsGCX+X9ue.jeO71g7+lK4G+caMUj+mNaqa.4ueyIc2Uukt6kLHmdDVG+wyo+9MI29LE.l2xLk2BTy+OHnAx4qDzK31rGbNiaOpIj6X98GCTOuYeW2FP+j4N4QO6e72uZWcN1xG41AeAt7TSa8h7kedNN+ByZRZywLy8IWuSNzufKPoJzjqrUkU7j3MUwaEC+z51rfqsa3MzrbZHvJ4ZPvm6hJAGQKVNnaOyQUEgQCz+zbfE5pjOU3AVDU61+tLRy6y397E75PlsZr39R116VISygakbMXrrq4dM7kTglZ1np8FNvCwqkTE6b34nAaN9axLi8lrn6NAvp2XTM4RtliaqigSOaNoP9Y+XbWo4BJI5jd5r7QqVWfl4XVLbh8whdOzsuLTVGueStrni4WXcL4kOo6on92PfblB0EWUBEVjw8TEAyicYgrfgJdEqvoWahRtAL3LQsYxx9X2HN6rSGDpfDIvvEV9mX8EEmwjXXbabCXYtBXIay4ihTlCzGx8ra22Pf.YGNttLe+L+WCyA0sw+668Sl2iYgQA+TI29vewKrZJdG67uFexeAx2i31KWC7i3UzYtxSkDuaSyMH1M4x2.KkdidlSAZuNOXplSNToToTEXAf1pzqrCsp458f5RPHzbjGC4UTUfTCC3SksQRKyMCdc4qPZTVG7R2W66vZfOh663MCzRH.kdwFFaYBPEq+7B3r3wYIMAVqjo1VxH4ZBtq4hgDeY3WnoW.jiLH1xhMgE3z1P+ozq90v2RMjdlDzOrfvC9+lfPw5oOvG1VJOqOybIA2oq66mkqznOyKVdpm8VZv.zCLi.88BMYIK5d.9LsF47wcJ8E9hS87JZpITr9cUwWbWUb06phu7tp3Z2UEW+tp3qtYEwuBuDxCrUDTY0bOaOBm8BYsCRXQ3IsOrWMHlqNZjP9k6KBr6fC+NpfBa7OVj7Ksax54Ya2ohuJJ8ZwIN+UW76YxvKXSZons9RZ17wuXF6WthOE1sSO5W0n0d3lwpWs9KeV0W8r5unjnejLVWdYk12rbUBDsMeoNQZ5F.6eEi4N1R7Tt1JzpUpV6ozujpDUT8kxrOg5XuuP76TQuB8zWWhP9uhjUkT.

It's pretty accurate and ridiculously fast.

@Adam_G just a simple webview.set("saveInPreset", true) call.

@Christoph-Hart I think he's missing the CPU percentages on individual nodes (which were quite nice, frankly).

@ustk said in g.dropShadowFromPath got funky on macOS between May 30 and July 02:

@aaronventure Yeah noticed the same, I needed to replace the area that was taking the object bounds with the path bounds in the whole project:

When was the Rectangle API introduced? Might come from this...

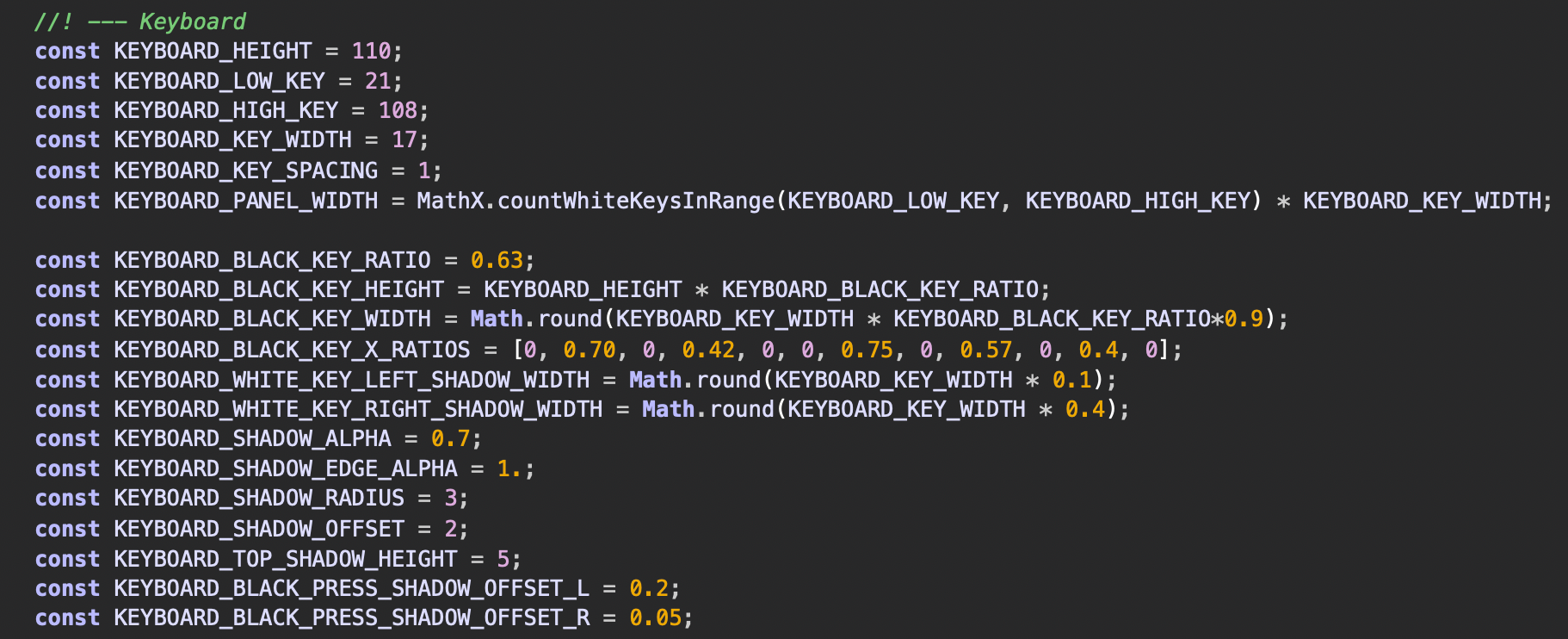

I can't make any sense of this, I didn't use getLocalBounds, I use calculated dimensions based on keyboard parameters... How are you getting the correct positions for the shadow?

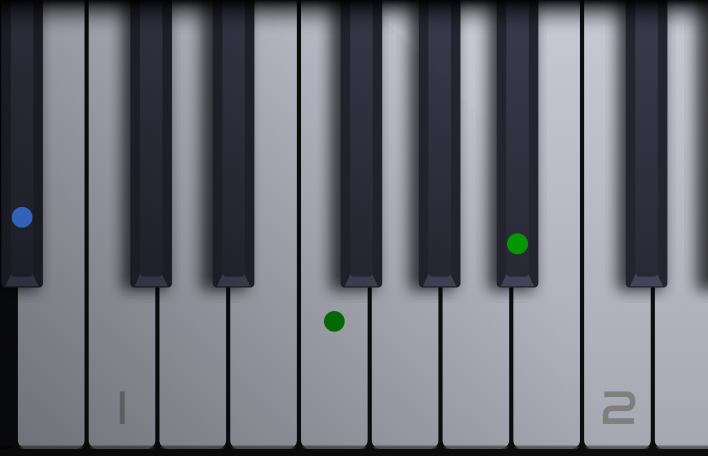

@d-healey Thanks! It's even got conditional shadows!

It's also fully configurable!

This was my "alright, let's get a full hang of this Paint Routine stuff" moment when I was learning HISE. I think it took me a better part of a week.

With a bit softer shadows

There was a whole month of broken macOS commits, and the first one that compiled is cac7a45f4cd70a2f0ad96771ea8df1c10bfd2de7 from July 02.

However this broke dropShadowFromPath for my PaintRoutined custom keyboard and I can't figure out why. It also looks like this on the latest commit.

Like the path is a tiny dot or something.

The last one that has it working correctly is the last working macOS commit before that, on May 30.

a20873ea7a8c00b251ddae7df8d6e3007f8c5f71

This is what it should look like

@Christoph-Hart Any idea what could've broken it?

@Christoph-Hart okay that's a build command, but I still have to use a single global build for the interface.

There's a bunch of stuff HISE stores in its app data folder. Like it's source code path, faust path, etc. It would be nice to be able to just go to the HISE submodule directory in the project directory and launch the version of HISE that I know will work for that specific project.

For example, I opened an older project now and dropShadowFromPath is broken in it. Now I gotta find the last commit where it worked.

@dannytaurus As long as you don't need to compile scriptnode networks first or do anything funny with hardcoded networks, you can do it.

You need the HISE binary to initiate the calls and the build order, create the JUCE project and generate all the code.

You need the HISE source because it contains the JUCE source, which is what's actually used to build your plugin.

So pull the repo, build HISE, pull your project, build your project.

If you need to install IPP first, check this https://github.com/sudara/pamplejuce/blob/main/.github/workflows/build_and_test.yml , though this uses Cmake.

HISE is a bit funny here. The annoyance is how it uses the appdata folder, which makes having multiple HISE builds annoying or sometimes impossible. It should store its preferences and stuff right next to the binary, I don't think anyone building from source ever moves the binary. This would make it possible to add the HISE repo as a git submodule, effectively letting you lock projects to a certain commit, which would be great as HISE starts making more and more breaking changes (a welcome development).

Now every project can have its own HISE build, which would definitely be a good change rather than having to rebuild it with different flags for every project, because you have to manage and keep track of which flags you're using for which project, and hopping projects becomes silly. In fact, I'll tag the boss here, and hopefully get his take here @Christoph-Hart

If that were to happen, you'd just pull your repo, initialize submodules, build the HISE submodule, call the binary to build the project, without having to worry about breaking changes or manually updating your build script every time you validate a new HISE commit as safe.

@d-healey Hold Shift while typing to amplify my anger.