Simple ML neural network

-

@aaronventure no idea but I would guess that the performance is pretty much the same as they do the same calculations.

-

I think the only issue with conversion will be that most NAM models are wavenet which is not currently supported by RTNeural.

NAM has been tuned specifically around this architecture which is one of the reasons it's currently considered the most realistic.

Whether or not this poses a barrier to conversion, and if in conversion you will loose some of the realism that has been achieved I'm not sure. I think as stated real time performance will likely be the same. NAM currently operates with zero latency, which I think RTNeural also does from memory, it's really about CPU utilisation.

-

I think maybe we just need an effective NAM-to-RTNeural converter.

Seens like RTNeural already has everything to hundle wavenet (conv1d,ReLU,softmax), I remember Jatin talked about his plan of making examples for several different architectures including wavenet in Discord.

Also check this thread

https://forum.mod.audio/t/new-neural-lv2-plugin-from-aida-dsp-based-extensively-on-existing-neuralpi-reduced-to-the-bone/8047/142?page=8

(sorry for posting link to other forum...) -

@Christoph-Hart said in Simple ML neural network:

@ccbl And can't you just convert the models to work in RTNeural? In the end it's just running maths and I'm not super interested in adding the same thing but in 5 variations.

I understand why you feel that way. There's no point chasing your tail every time a new NN comes on the scene. What I would say though is that since NAM has been released, I've seen at least 4 different companies bring out their own NN capture tech. And not a single one of those has taken off, all combined they have less captures publicly available than one comprehensive NAM pack on ToneHunt .

Multiple commercial companies have incorporated NAM including Melda, Amplifire, Two Notes, Audio Assualt, and Solemn Tones amongst others. AnalogueX now uses NAM to capture preamps and compressors. Past of Future also does this.

Essentially NAM has become the industry standard format for Analogue gear capture using neural networks at this point. So personally I think it would be worth implementing the NAM core tech as there's a far higher chance people are going to want to use a NAM model than any of the other tech.

Beggars can't be choosers though. I don't mean this to come across as a demand so much as trying to present a compelling case. I don't have the skills to implement this myself. I understand there's only some much time and will power a person has to dedicate to a project like this.

So I'm highly grateful for any way to incorporate NAM into the signal processing using HISE. Whether that involves conversion or native processing.

-

@ccbl said in Simple ML neural network:

@Christoph-Hart said in Simple ML neural network:

@ccbl And can't you just convert the models to work in RTNeural? In the end it's just running maths and I'm not super interested in adding the same thing but in 5 variations.

I understand why you feel that way. There's no point chasing your tail every time a new NN comes on the scene. What I would say though is that since NAM has been released, I've seen at least 4 different companies bring out their own NN capture tech. And not a single one of those has taken off, all combined they have less captures publicly available than one comprehensive NAM pack on ToneHunt .

Multiple commercial companies have incorporated NAM including Melda, Amplifire, Two Notes, Audio Assualt, and Solemn Tones amongst others. AnalogueX now uses NAM to capture preamps and compressors. Past of Future also does this.

Essentially NAM has become the industry standard format for Analogue gear capture using neural networks at this point. So personally I think it would be worth implementing the NAM core tech as there's a far higher chance people are going to want to use a NAM model than any of the other tech.

Beggars can't be choosers though. I don't mean this to come across as a demand so much as trying to present a compelling case. I don't have the skills to implement this myself. I understand there's only some much time and will power a person has to dedicate to a project like this.

So I'm highly grateful for any way to incorporate NAM into the signal processing using HISE. Whether that involves conversion or native processing.

I have to agree. I have spent a lot of time comparing and contrasting the various amp capture platforms over the years, and NAM is clearly the most accurate and most realistic sounding.

NAM uses the Eigen library for its core DSP playback module.

@Christoph-Hart I would imagine you'd need to implement Eigen into HISE if you wanted to support NAM playback???

-

@Christoph-Hart Regarding the current RTNeural implementation.

With the GuitarML's AutomatedGuitarModelling LSTM trainer allows you to create parameterised models (https://github.com/GuitarML/Automated-GuitarAmpModelling).

With the NeuralNetwork module are you able to address these input parameters? I'm interested in a single input parameter and want to connect a knob to address this parameter in the model inference.

-

@ccbl I believe so. When I first looked into the RTneural it appeared to be more robust than NAM. I get the appeal to be compatible with NAM captures though.

According to their github:

RTNeural is currently being used by several audio plugins and other projects:

4000DB-NeuralAmp: Neural emulation of the pre-amp section from the Akai 4000DB tape machine.

AIDA-X: An AU/CLAP/LV2/VST2/VST3 audio plugin that loads RTNeural models and cabinet IRs.

BYOD: A guitar distortion plugin containing several machine learning-based effects.

Chow Centaur: A guitar pedal emulation plugin, using a real-time recurrent neural network.

Chow Tape Model: An analog tape emulation, using a real-time dense neural network.

cppTimbreID: An audio feature extraction library.

guitarix: A guitarix effects suite, including neural network amplifier models.

GuitarML: GuitarML plugins use machine learning to model guitar amplifiers and effects.

MLTerror15: Deeply learned simulator for the Orange Tiny Terror with Recurrent Neural Networks.

NeuralNote: An audio-to-MIDI transcription plugin using Spotify's basic-pitch model.

rt-neural-lv2: A headless lv2 plugin using RTNeural to model guitar pedals and amplifiers.

Tone Empire plugins:

LVL - 01: An A.I./M.L.-based compressor effect.

TM700: A machine learning tape emulation effect.

Neural Q: An analog emulation 2-band EQ, using recurrent neural networks.

ToobAmp: Guitar effect plugins for the Raspberry Pi. -

Trying to do a simple LSTM processor project. Where should one store their json files? Or do you paste in the weights directly? Using the ScriptNode Math function.

-

-

@Christoph-Hart ok got it. Let's say I wanted to cascade two different NNs

I'm guessing these parts will have to be modified somehow in order to differentiate between the networks. Would it just be a case of "obj1"

and "obj2"`// This contains the JSON data from `Scripts/Python/sine_model.json` const var obj = // load the sine wave approximator network nn.loadPytorchModel(obj);I'm pretty sure this is how you would set up the first part?

// We need to create & initialise the network via script, the scriptnode node will then reference // the existing network const var nn = Engine.createNeuralNetwork("NN1"); // We need to create & initialise the network via script, the scriptnode node will then reference // the existing network const var nn = Engine.createNeuralNetwork("NN2"); -

@Christoph-Hart so for now I decided to try and make a very simple audio processor using scriptnode. I followed the tutorial project adding the weights for the math.neural module to pickup, and it does see the object, however when I select it HISE crashes, complete CTD.

It's an LSTM network generated by a RTNeural based project (guitarML automatedguitarampmodelling pipline).

Not sure what the issue is.

-

Attached the model in case someone wants to look at it.

-

@ccbl Just did the same thing here. Used this script and it crashes HISE when I select the model:

https://github.com/GuitarML/Automated-GuitarAmpModelling

It's my first attempt, so I'm obviously doing something wrong. @Christoph-Hart is there a guide to train a model with audio files?

Also, is there a way to implement parameterized models? -

The Neural node is a promising feature.

It would be great to see an example guitar amp model (the most used case right now) with parameters.

-

@orange yeah actually being able to model amps would be magnificent. I have a project in the pipeline where it would save literal gigabytes (no need to deliver processed signals).

There was also talk in another thread, I think it was the Nam (Neural AMP modeller)

-

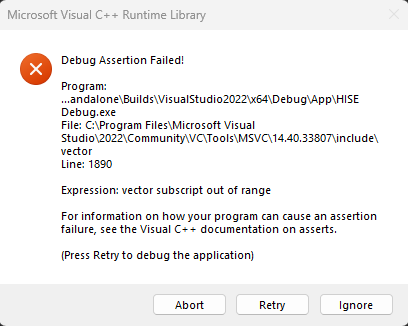

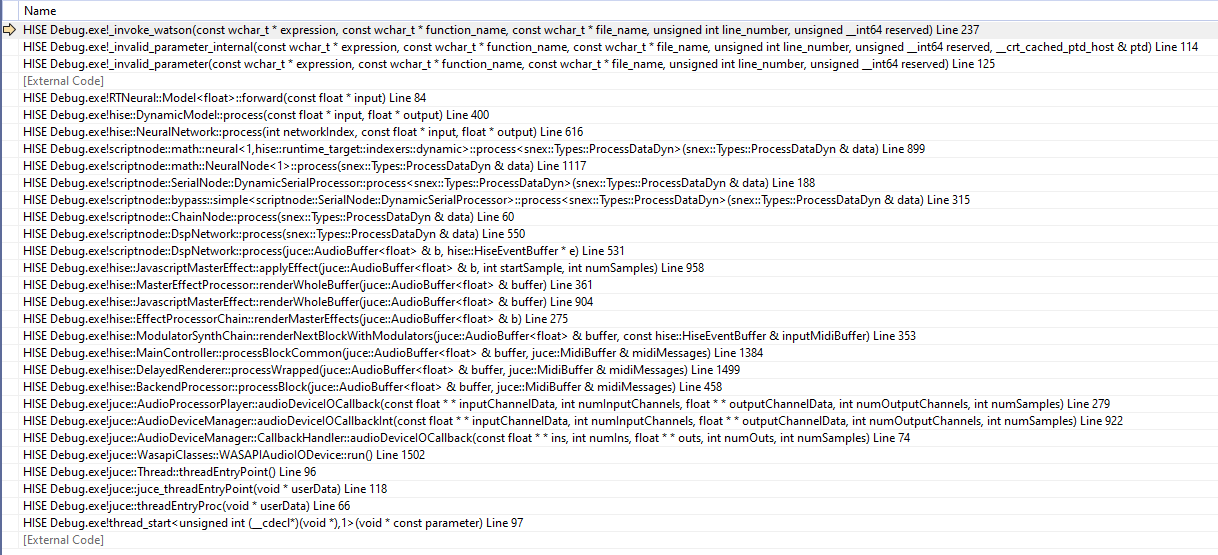

When I debug, I get failure here:

Is this caused by a key mismatch in the JSON?

@Christoph-Hart would you be able to take a quick look at this and see where it's muffed? -

@Dan-Korneff Sure I'll check if I find some time tomorrow, but I suspect there is a channel mismatch between how many channels you feed it and how many it expects.

-

@Christoph-Hart That would be awesome.

The json keys look like this:{"model_data": {"model": "SimpleRNN", "input_size": 1, "skip": 1, "output_size": 1, "unit_type": "LSTM", "num_layers": 1, "hidden_size": 40, "bias_fl": true}, "state_dict": {"rec.weight_ih_l0": -

@Christoph-Hart Don't wanna load you up with too many requests, but it would be super rad if we could get this model working in scriptnode.

-

@Dan-Korneff The model just doesn't load (and the crash is because there are no layers to process so it's a trivial out-of-bounds error.

Is it a torch or tensorflow model?