Simple ML neural network

-

I realise we already hashed this discussion out, and people might be sick of it. But IMO the NAM trainer has a really intuitive GUI trainer which allows for different sized networks, at various sample rates. It also has a very defined output model format, which seems to be a sticking point with RTNeural.

Given the existence of the core C++ library https://github.com/sdatkinson/NeuralAmpModelerCore

Might it be easier to implement this instead, given many people want to use ML Networks for non-linnear processing for the most part?

-

I'll just leave these here:

https://intro2ddsp.github.io/intro.html

https://github.com/aisynth/diffmoog

https://archives.ismir.net/ismir2021/paper/000053.pdf

https://csteinmetz1.github.io/tcn-audio-effects/

I'm convinced Parameter Inference and TCNs will be the future of audio plug-ins. CNN's will take over circuit modelling as the next fad. Training NN so we can map weights to params to make any sound source will take over. Just have a look at Synth Plant 2.

Having access to trained models from PyTorch in HISE would be awesome. A few VSTs devs are using ONNX Runtime in the cloud to store the weights and the VST calls back to perform the inferences.

P

-

@ccbl for instance, how I plan to use HISE is to create plugins where I use a NN to model various non-linear components such as transformers, tubes, fet preamps etc, and then use the regular DSP in between. I'm just a hobbiest who plans to release everything FOSS though, so I'll have to wait and see what you much more clever folks come up with.

-

I realise we already hashed this discussion out, and people might be sick of it. But IMO the NAM trainer has a really intuitive GUI trainer which allows for different sized networks, at various sample rates.

The current state is that I will not add another neural network engine to HISE because of bloat but try to add compatibility of NAM files to RTNeural as suggested in this issue:

https://github.com/jatinchowdhury18/RTNeural/issues/143

There seems to be some motivation by other developers to make this happen but it‘s not my best area of expertise and I have a few other priorities at the moment.

-

@Dan-Korneff said in Simple ML neural network:

@Christoph-Hart it should be a pytorch model.

This is the training script I'm testing with. It uses RTneural as a backend as well:

https://github.com/GuitarML/Automated-GuitarAmpModellingHave you tried running it through this script?

https://github.com/AidaDSP/Automated-GuitarAmpModelling/blob/next/simple_modelToKeras.py

-

@Christoph-Hart I just read the thread and found the link. Gonna give this a go first thing this morning.

-

@Christoph-Hart

https://github.com/AidaDSP/Automated-GuitarAmpModellingThere is already a script in Automated-GuitarAmpModelling named modelToKeras.

"a way to export models generated here in a format compatible with RTNeural"I'll give both a try and report back.

I'm seeing that there is also a script to convert NAM dataset.

"NAM Dataset

Since I've received a bunch of request from the NAM community, I leave some infos here. Since the NAM models at the moment are not compatible with the inference engine used by rt-neural-generic (RTNeural), you can't use them with our plugin directly. But you can still use our training script and the NAM Dataset, so that you will be able to use the amplifiers that you are using on NAM with our plugin. In the end, training is 10mins on a Laptop with CUDA." -

Learning curve is high on this one.

I've written a config script, prepared the audio files into a dataset, trained the model with dist_model_recnet.ph.

The model_utils.py script complained about how output_shape was being accessed, so I made a little tweak there.

In the end, it was able to convert the model to keras, but the layer dimensions are exporting as null.

Time for more beer and research -

@Dan-Korneff yeah I tried to write the wavenet layer today for RTNeural, by porting it over from the NAM codebase, but I don't know either framework (or anything about writing inference engines lol), so it wasn't very fruitful.

Let me know if you get somewhere then we'll try to load it into the HISE neural engine.

-

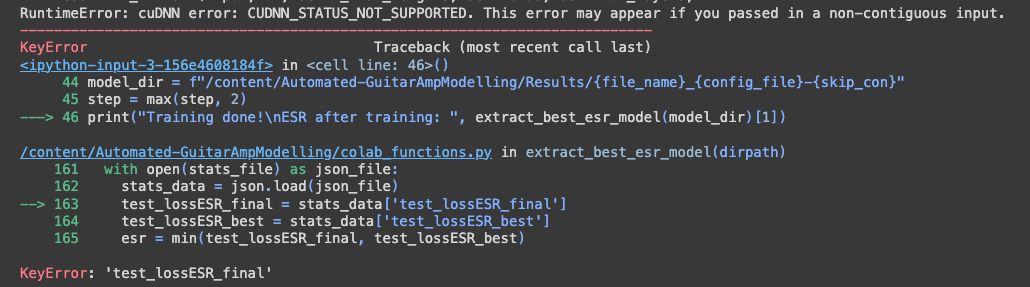

@Dan-Korneff DId you have any luck running the colab for training? I upload input.wav and target.wav and get an error

-

I don't know if it helps, but Karanyi Sounds (uses HISE) also does machine learning using HISE Neural Network with colab, they shared this photo today.

As I see, if this Neural implementation is done very well, it will be really popular among developers. Lots of people would love to use this latest technology in their software.

-

@Christoph-Hart It seems like so many projects are abandoned, even if it's relatively new. Still researching

-

@aaronventure The tech is evolving so much that the scripts on google collab break just about every time there is an update. I got the google collab script to work for Proteus, but moved to local processing cause my GPU is better than the ones provided by google.

-

@resonant Invite them to the conversation

-

@Dan-Korneff In this instance the dataset refers to the input output audio pairs that NAM uses for it's training, not the resulting model. Basically they're saying they added info in their training script that can detect the NAM audio pairs and train and Aida-X model based on those.

-

@resonant that's awesome. Would love to pick their brains and see if we can get it up and running for the rest of us.

-

Here's where I'm at with the process:

https://gitlab.korneff.co/publicgroup/hise-neuralnetworktrainingscriptsI have used the scripts from https://github.com/AidaDSP/Automated-GuitarAmpModelling/tree/aidadsp_devel as a starting point.

This will allow you to create a dataset from your input/output audio file, train the model from the dataset, and then convert the model to Keras so you can use it RTNeural.

@Christoph-Hart The final model is making HISE crash. I thought I was doing something wrong because some of the values for "shape" are null, but I've downloaded other files created with the source script and they are null in the same places.

Here's one for example:

JMP Low Input.jsonIt's possible that the script is not formatting the json properly, but I don't know what a correct model looks like to compare to.

-

@Dan-Korneff That's the JSON from the sine generator example:

{ "layers": "SineModel(\n (network): Sequential(\n (0): Linear(in_features=1, out_features=8, bias=True)\n (1): Tanh()\n (2): Linear(in_features=8, out_features=4, bias=True)\n (3): Tanh()\n (4): Linear(in_features=4, out_features=1, bias=True)\n )\n)", "weights": { "network.0.weight": [ [ 1.046385407447815 ], [ 1.417808413505554 ], [ 0.9530450105667114 ], [ 1.118412375450134 ], [ -2.003693819046021 ], [ 1.485351920127869 ], [ -1.323277235031128 ], [ -1.482439756393433 ] ], "network.0.bias": [ -0.4485535621643066, -1.284180760383606, 1.995141625404358, -1.036547422409058, 0.2926304638385773, 0.4770179986953735, 0.3244697153568268, 0.4108103811740875 ], "network.2.weight": [ [ -1.791297316551208, -0.3762974143028259, -0.3934035897254944, 0.1596113294363022, 0.5510663390159607, -1.115586280822754, 0.678738534450531, 1.327430963516235 ], [ 0.3413433432579041, 1.86607301235199, -0.217528447508812, 2.568317174911499, 0.3797312676906586, -0.1846907883882523, 0.04422684758901596, -0.0883311927318573 ], [ 0.3113365173339844, 0.8516308069229126, -0.6042391061782837, 0.9669480919837952, -1.354665994644165, 0.1234097927808762, -1.171357274055481, -0.9616029858589172 ], [ -0.5073869824409485, -0.7385743856430054, 0.3118444979190826, -0.9642266035079956, 1.899434208869934, -0.1497718989849091, 1.684132099151611, 0.895214855670929 ] ], "network.2.bias": [ -0.6971003413200378, 0.3228396475315094, -0.6209602355957031, 0.1816271394491196 ], "network.4.weight": [ [ -0.9233435988426208, 1.108147859573364, -0.8966623544692993, 0.394584596157074 ] ], "network.4.bias": [ 0.06727132201194763 ] } }So apparently it doesn't resolve the python code for defining the layer composition but uses a single string that is parsed. That's the output of a custom python script I wrote and run on a model built with TorchStudio, but if your model is "the standard" way, I'll make sure that it loads correctly too as these things look like syntactic sugar to me.

-

Here's the link to the tutorial again:

https://github.com/christophhart/hise_tutorial/tree/master/NeuralNetworkExample/Scripts/python

But I realized your example looks more or less like the Tensorflow model in this directory. Which method are you using for loading the model?

-

@Dan-Korneff said in Simple ML neural network:

@resonant Invite them to the conversation

@resonant

yeah, maybe - they asked me to get re-involved with them on some ML stuff as they were a bit stuck.....it didnt go anywhere, so they may well still be stuck or they found someone else to do the coding for them....your call