Things I've Learned about ML Audio Modelling

-

In trying to learn how to use HISE to create analogue modelled FX plugins, to start with Amp Sims, but later preamps, and compressors etc, I've done some preliminary work.

TlDr; LSTM has a large amount of training variance, therefore you should run the training recursively until you get a good model. With recent code developments by Jatin Chowdry, and Mike Oliphant, I think it is worth integrating solutions that allow NAM models, which have clear advantages in certain scenarios, while LSTM might be preferred in others.

Given that currently HISE implements RT-Neural which can load KERAS models, I started there. The easiest to use platform for generating models was the AIDA-X pipeline [https://github.com/AidaDSP/Automated-GuitarAmpModelling/tree/aidadsp_devel] (utilising docker, through talking to them on Discord I was advised that unlike what is currently documented in their GitHub you should use the

aidadsp/pytorch:nextbranch not,aidadsp/pytorch:latest).I found the process of training LSTM models a little frustrating compared to my previous experience with NAM. The training process is very erratic with big jumps in error rate and extremely variable end point ESR even using the exact same input output pair and model setting. I should mention though that I was attempting to train a high gain 5150 type amp here, one of the harder things to model.

To try and figure out the best model parameters I used chatGPT to create a script that would repeat the training process but randomising a number of key training parameters. I ended up doing ~600 runs. I then performed a Random Forest Regression analysis on the results of that training with best ESR as the outcome metric. I used the suggested parameters for a further 100 runs of the same input output audio pair keeping the parameters constant.

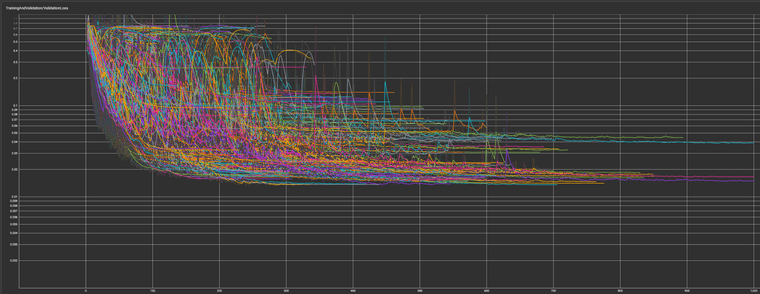

LSTM training results of randomised parameters

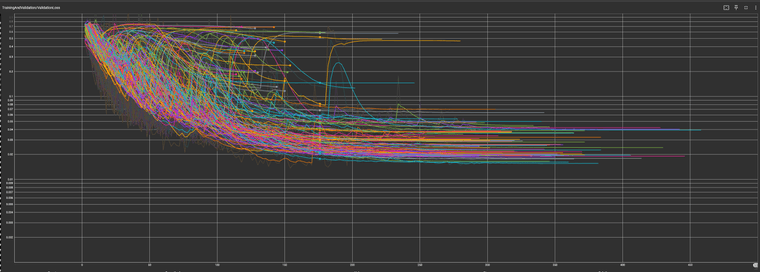

LSTM training results with Random Forest reccomend parameters

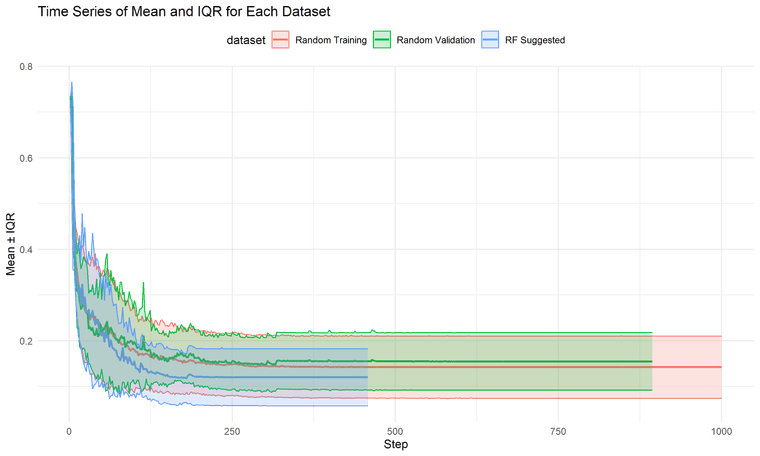

Comparison of average and IQR ESR of Random Parameters vs RF Suggested Parameters

The random forest suggested parameters did result in a lower average ESR, however it did not much reduce the amount of variation with roughly an equivalent IQR.

This is the exact same input output audio pair trained with Neural Amp Modeler (NAM). I only did 10 runs here, nor did I bother computing the IQR, because the extreme reduction in variability was pretty evident. NAM also obtained a better final ESR with a comparable model size.

Something else I discovered during my initial tests with the AIDA pipeline was that there was some of the clean signal blended into my model. This is due to the fact that in the config files a flag known as

skip_conwas set to "1" by default. Setting this to "0" removes the clean signal from the model. With talking to the folks from AIDA that flag is to help more accurately model things like TubeScreamers, and Klon pedals which do have some clean blend in their designs, but obviously this isn't useful for high gain amp designs, or say things like a Neve Preamp which would not have such a clean blend. skip_con will be set to 0 by default in updates to the AIDA pipeline.There have been two recent developments, Jatin Chowdhury who is the original author of RT-Neural has created a fork of RT-Neural that can read NAM weights (https://github.com/jatinchowdhury18/RTNeural-NAM). However this fork only has the NAM functionality and can not read the KERAS models.

What might be of more interest is Mike Oliphants "NeuralAudio" (https://github.com/mikeoliphant/NeuralAudio). Mike's code is capable of reading NAM files using NAM core, as well as NAM AND KERAS models using the RT-Neural implementation. In my opinion this would be the optimal solution to incorporate into HISE.

The reason for this is flexibility. There are situations where NAM is clearly the best choice when it comes to Neural Models, especially in high saturation situations like guitar amps. However, I think LSTM has advantages in situations where time domain information is more important, or indeed on less complex signals like say component/circuit modelling where LSTM is a little lighter on CPU. Being able to use both of these approaches would open up a lot of opportunities for processing in HISE. Not just in terms of audio fx but in terms of processing instruments too.

I will publish all this info and my scripts on GitHub soon for anyone that might want to use them. The script for AIDA will be useful for people who wish to do multiple runs of training until they get a satisfactory ESR (which is my opinion is anything less than 0.02 for high gain guitar amps).

-

@ccbl Amazing! Thanks a ton for sharing

-

I’ve come across the same results as you. Using the AIDA-X pipeline as my starting point as well. It’s a challenge to get consistent results, especially with sources that contain a lot of harmonics.

I’ve been tweaking the training scripts to get a more usable result but don't have much to report yet. -

@ccbl Thanks for all this valuable info! I also managed to load a few pre-trained AIDA-X weights for guitar amps.

Quick question: Are you able to compile .vst, standalone applications, or any binary that includes neural? -

@hisefilo I actually compiled one just yesterday that can switch between 1 of 3 models. Works in Reaper.

-

@ccbl Is it possible to share a simplified project that works please? The models cannot being compiled here. It would be so useful for starters.

-

@ccbl Wow good to know. That means is my bad :) As @Fortune says, can you share a minimal example?

Not even the Christop's NeuralNetworkExample creates a binary on my end. Im missing something.This is an AIDA-x I found googling that loads and works within HISE.

https://tonehunt.org/kordas/0d42ea78-a6a7-493d-80d3-d5ad3044e36b -

@hisefilo I used this as a basis. The important part for now seems to be that your model is in the "keras" format. The aida-x ones are like that by defaults.

-

@ccbl

After pounding my head against the wall for weeks, I finally feel like I'm making progress here. I've got the Aida-X models running! For the life of me, I can't seem to switch models once the NN is created and the first model is loaded. I am trying to load different Aida-x models based on a user selection. If I understand correctly, you got this working. Would you be willing to share how you are switching models? -

@scottmire Hi there. I think I cheated basically. I used a safe bypass module matrix that switches the network based on which buttons are pressed at the time. I'm not sure if this has performance implications, it certainly seems like the more networks are in the code base the worse the plugin runs. When I get more time I think a new thread is in order where we can all discuss optimisations and solutions.

-

@ccbl

So close!! I have 5 NN models, each wrapped in a soft bypass. All 5 are then loaded into a Split container. I have a slider mapped to a XFader module that controls the soft bypass states. As you turn the knob, the xfader controls the bypass. Think of it like the "GAIN" knob on an amp....as you turn up the slider, NN models of progressively more distortion are loaded. It's working.....BUT....when a NN is bypassed or unbypassed, there is an audible pop. Like I said, so close. LOL. -

@scottmire the soft_bypass node has that issue. Try the branch node instead.

-

@HISEnberg

I switched to a branch node and it does "pop" the very first time it cycles through each NN nodes. After that initial time, it doesn't "pop" as you cycle through. -

@scottmire you can right click the soft bypsss node to adjust the fade time

-

I believe the issue I'm seeing is that whenever a NN node is instantiated and loaded with a model the first time, there is a loud "pop" coming from the NN node. If you are trying to switch between multiple NN nodes, whether you are using a Branch node or a soft_bypass, the NN nodes are not instantiated UNTIL signal is passing through them. So, no matter what you use to switch between NN nodes, the first time they are selected, they pop. After that (at least with a Branch node), they no long "pop".

I could work around this, IF all the NN nodes instantiated at the same time (maybe on load). I could just mute the output of the node for a moment. But, it doesn't seem to work that way.

-

This post is deleted!