Sampler normalisation, is it per channel?

-

@d-healey I'd do this in the raw sample content, personally. You could easily script something in Lua inside Reaper to do this.

-

@Orvillain Usually I do too, but I missed it and wanted to avoid going through my sample exporting chain again - I have a setup that is super efficient for batch processing but cumbersome for a single sample.

-

@d-healey yeah, that sounds like a you-problem :)

JK, but the use case for normalising samples is to quickly remove all gain differences and replace them with velocity mods (or whatever) but you definitely want to retain the stereo balance, hence the normalisation to the loudest of the channels.

And what is the target gain, is it -6db?

Actually 0dB, but I think it has a max gain so that it doesn't completely blow up the sample if you feed it with -90db noise.

-

@Christoph-Hart That's fatal. Does this mean that it is more original to create dynamics only in samplers without using a velocity modulator? And if I use a velocity modulator, do the samples have to be normalized for the velocity modulator to work correctly?

-

@Robert-Puza said in Sampler normalisation, is it per channel?:

Does this mean that it is more original to create dynamics only in samplers without using a velocity modulator?

I'm not 100% sure what you mean. Velocity is a MIDI control that can be linked to all kinds of parameters. You can use it to control volume, pitch, filters, group xf, etc.

And if I use a velocity modulator, do the samples have to be normalized for the velocity modulator to work correctly?

I always normalise my samples as I find this gives me the most consistent results, but you lose the natural volume relationship between different notes so you might need to recreate that using a note modulator, or through some other method.

-

@d-healey I only want to use velocities as information from the midi keyboard to control samples in dynamic levels. But how to correctly decide to what force of hitting the keyboard will be a pianosimo sample and from what force of hitting the midi keys will be a forte sample (127)? I think that only a musician can make the right decision.

-

@Robert-Puza if you set the samples in the first dynamic level, you have the option of 0 to 127. how do you decide that LoVel will be 0 to HiVel 50 or 0 to 57?

-

@Robert-Puza Assuming you only have 1 level of recorded dynamic you can add a velocity mod, set the intensity to something like 80% and that will be pretty good for volume control. You should probably also use velocity to control the cutoff (or gain) of a filter so you get a change in timbre as well.

If you have multiple dynamics recorded then you can map them to different velocity ranges in the mapping editor - in addition to using the velocity modulators as I described.

@Robert-Puza said in Sampler normalisation, is it per channel?:

if you set the samples in the first dynamic level, you have the option of 0 to 127. how do you decide that LoVel will be 0 to HiVel 50 or 0 to 57?

You can decide. There is no right way to do it. Just go with what sounds good to you.

-

@d-healey multiple dynamics. And yes you are right. it must be decided by listening.

-

@d-healey my 2 cents on this its better that HISE dont match each side equally if there are not, if HISE equally normalize both channels at the same level then the stereo effect of that particular sound will be lost if it was meant to be like that, SPECIALLY IN SOUND DESIGN where we put together couple of layers in different sides., but if its an option to match that when we need to will be nice also.

-

@WepaAudio said in Sampler normalisation, is it per channel?:

@d-healey my 2 cents on this its better that HISE dont match each side equally if there are not, if HISE equally normalize both channels at the same level then the stereo effect of that particular sound will be lost if it was meant to be like that, SPECIALLY IN SOUND DESIGN where we put together couple of layers in different sides., but if its an option to match that when we need to will be nice also.

100% this. Stereo samples should never be normalized per channel by default. Have an option, but not as a default.

-

@dannytaurus that‘s why it‘s the default and I have no intentions changing it.

-

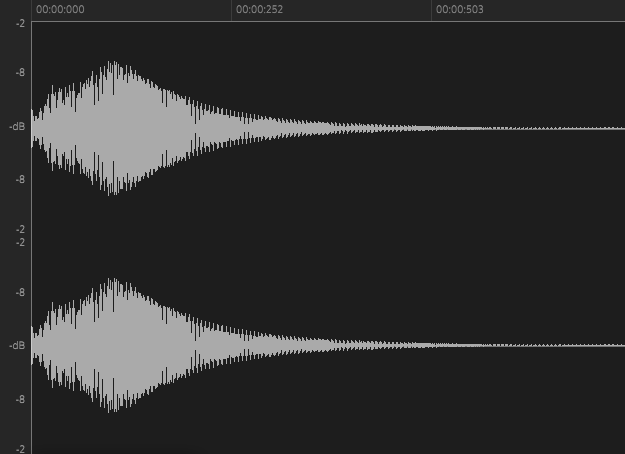

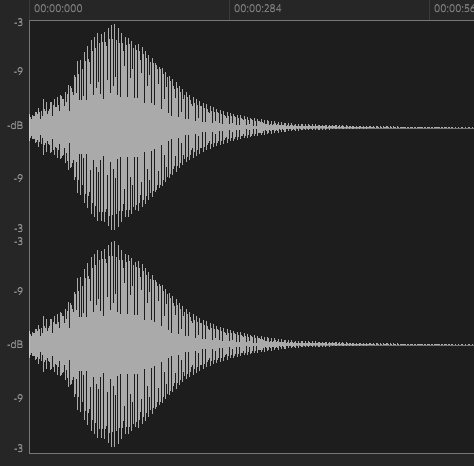

These have both been normalised but look like they are hitting totally different peaks. Why is that?

-

@Christoph-Hart said in Sampler normalisation, is it per channel?:

@dannytaurus that‘s why it‘s the default and I have no intentions changing it.

Awesome

Sample normalization has been a bugbear for me for the last 30 years.

More precisely, other people's idea of sample normalization

-

These have both been normalised but look like they are hitting totally different peaks. Why is that?

Have you edited the samples after creating the samplemap? The normalisation level is cached (so that it doesn't have to scan the samples everytime you load the samplemap), but if you have tampered with the audio afterwards you might get a wrong normalisation gain factor.

Other than that, no idea, maybe the waveform renderer is playing tricks on you and doesn't show you the real peak in this zoom level, but that's a wild guess.

-

@Christoph-Hart I haven't done anything with them since mapping. But it just occurred to me, these are multi-mic samples, so could it be that another mic of the same sample is the one that is hitting the peak?

-

@d-healey Ah yes, that's the culprit:

for (auto s : soundArray) // <= the list of multimic samples highestPeak = jmax<float>(highestPeak, s->calculatePeakValue());but that is definitely a feature not a bug - the same rule that applies to stereo channels applies to multiple mic positions too - you certainly don't want to mess with their inner balance.

-

@Christoph-Hart said in Sampler normalisation, is it per channel?:

but that is definitely a feature not a bug

Yeah that's good, I've just been puzzled by the UI not hitting the same peak across different samples, and now the mystery is solved :)