Simple ML neural network

-

@iamlamprey In that case I'd go with the IR which takes a few seconds to make - I have a script that can process 1000s of files to create loads of IRs in one go.

@iamlamprey said in Simple ML neural network:

rather use multiple lightweight networks and fade between them with a Gain node or something

Can do the same with multiple IRs

-

@iamlamprey said in Simple ML neural network:

@Lindon id say they can be about as accurate as a decent whitebox model, 8.5-9.0/10

so to get this clear in my head - the ML version is no better at the dynamic processing of guitar input that a set of IRs is?

..and by "dynamic" I mean different volume levels of input as the instrument is played...quietly (for those AOR ballads) or loudly...(thrashing it)

-

@Lindon it really depends on the implementation of the model and the data, having a robust model trained on a lot of varying data (different amp settings, different players etc) will sound great, and probably beat IRs and blackbox models

that being said, a dedicated whitebox model recreating each component of the signal path is still probably the best method for physical accuracy, i don't think a test comparing a proper neural net vs a proper physical model has ever been done, for obvious reasons ($$$)

there's still a lot of progress to be made with neural audio, so who knows how things will look in a year or two

-

@iamlamprey said in Simple ML neural network:

@Lindon it really depends on the implementation of the model and the data, having a robust model trained on a lot of varying data (different amp settings, different players etc) will sound great, and probably beat IRs and blackbox models

that being said, a dedicated whitebox model recreating each component of the signal path is still probably the best method for physical accuracy, i don't think a test comparing a proper neural net vs a proper physical model has ever been done, for obvious reasons ($$$)

there's still a lot of progress to be made with neural audio, so who knows how things will look in a year or two

Yes you are probably right, I was however thinking the amount of effort to build a neural net based amp sim would possibly greater than that to build a "nebula like" , "dynamic" IR model...

-

@iamlamprey - so does a "well formed/well trained" neural net model perform with the non-linearities of a real amp or not? I guess I'm back at "is ML even worth it right now?" as a question.

-

@Lindon here's an example of an effective implementation of NN:

https://mcomunita.github.io/gcn-tfilm_page/

Christian's repos are also public (or were last time i checked), they're more complex models compared to a regular LSTM, often utilizing multi-resolution STFT loss & other advanced things like dilated convolutions

I believe RTNeural has convolution dilation already implemented, so something like this is possible, albeit probably difficult

-

@iamlamprey said in Simple ML neural network:

@Lindon here's an example of an effective implementation of NN:

https://mcomunita.github.io/gcn-tfilm_page/

Christian's repos are also public (or were last time i checked), they're more complex models compared to a regular LSTM, often utilizing multi-resolution STFT loss & other advanced things like dilated convolutions

I believe RTNeural has convolution dilation already implemented, so something like this is possible, albeit probably difficult

Thanks -listening to the examples was interesting. Its clear the LSTM approach has some weaknesses at low gain inputs...but the approach outlined in the paper seems to deal with these much better...but as you say its probably considerably more difficult to achieve -especially as I have no idea what STFT is or dilated convolutions or how to build a model using them.

Hmm, this is getting more complex the more I look at it.

-

@Lindon said in Simple ML neural network:

-especially as I have no idea what STFT is

Short-Time Fourier Transform, basically a series of FFT's across the length of the signal to see how the frequency response changes over time, "multi-resolution" STFT means to create multiple STFT's of the same signal using different settings, which helps solve the frequency-time resolution issue

or dilated convolutions

if i'm remembering correctly, dilated convolutions basically refers to a layer in the network "skipping" a certain number of values and relying on other layers to process them, this means the resolution for that single layer might be lower, and therefore less computationally intensive which means you can have a larger receptive field (how far back in time the model can look)

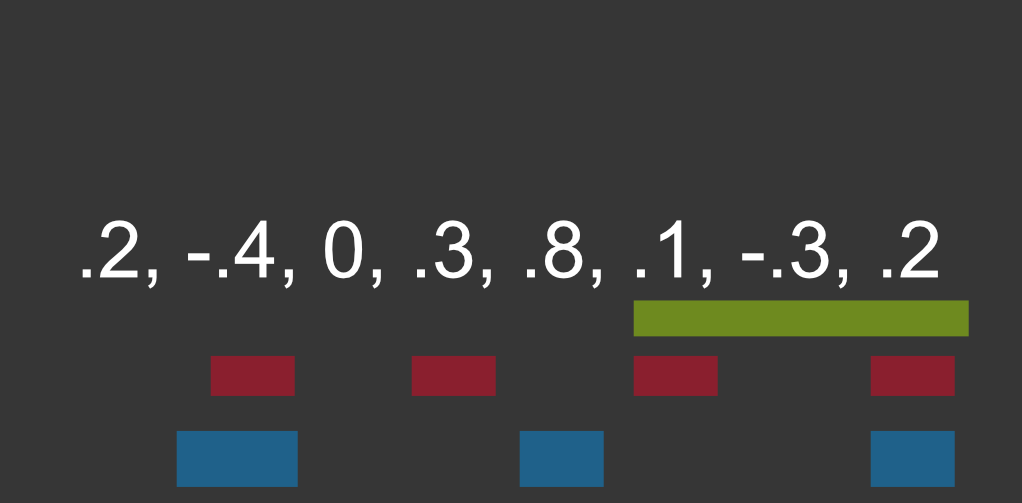

Green: No dilation

Red: Skips 1

Blue: Skips 2or how to build a model using them

both Torch & Tensorflow have built-in dilation parameters for layers:

tf.keras.layers.Conv1D(dilation_rate=1)

torch.nn.Conv1d(dilation=1))Hmm, this is getting more complex the more I look at it.

Yep, the cool part of ML is how automated it is, the uncool part is the massive amount of prerequisite work needed to be able to create robust models that do all the work for you

if you're wondering if I think you should do ML or old school blackbox modelling for your specific project, I'd lean towards the latter, especially if you already have experience in the field, even hiring someone to consult on an ML project would be tricky because the field is very new and there's still a lot of problems to be solved

-

@iamlamprey yep I am beginning to see the magnitude of the problem space,

This is an interesting interview;

https://www.youtube.com/watch?v=WLTzbEKTxhk (starts at min 12)

For those who dont know Neural DSP are the new kids on the block and I guess are the reason for all the fuss about neural net based guitar amp sims.

I looked up how they were funded, they got E5.9 Million in 3 Round of funding... and a lot of them arrived at work day 1 with PhDs in this field, and it still took them from 2018 to 2022 to get anything out the door...so to be in their league -= a lot of money + a lot of research+ a lot of time.

-

@Lindon Let me know if you want my IR script :D

-

@d-healey said in Simple ML neural network:

@Lindon Let me know if you want my IR script :D

My that made me laugh out loud.....

-

Sorry to hijack this thread potentially. I wonder if it would be worth implementing https://github.com/sdatkinson/NeuralAmpModelerCore

NAM has been gathering a lot of steam recently, the training process for new models is really easy to do and IMO it produces the best sounding models of gear to date, at least gear without time constants. It would be a great way to produce amp sims, or even just add a really accurate post sound processing option in synths and virtual instruments. Imagine an E-piano or organ etc with a great tube amp drive processor.

-

@ccbl So when I was looking at using ML to do Amp Sims I looked at the implementation you point at.

The important thing to remember when looking at this stuff is to realise that nearly every implementation(including the one you point at) are "snapshots" of an amp in a given state (controls set to a given position), yes they tend to do the non-linearities better than say an IR would, but what they dont do is allow you to meaningfully change settings on the amp sim (pre-amp, treble boost, mid, bass, etc. etc.) and get back the non-linearities associated with that combination of control settings.

What they do is post-process(or pre-process) the signal with eq etc. Now this is fine, but.... what you get are the non-linearities of a given snapshot with some post-processing on it. This can sound acceptable, but its not actually correct. Those Neural DSP guys have some deep, gnarly and secret approaches/algos for doing the sim correctly... But if you uncover what those approaches are then feel free to post them here

-

@Lindon The audio signal does not have to be the only inputs into the network - you can train it with additional parameters as input and if the training is successful, it will mimic the parameters in the 1-dimensional space that you gave it.

-

@Lindon I'm well aware of how NAM works. It is possible to make a parameterized model. But the thing is with the right DSP surrounding it you don't really need to and you drastically cut down the number of input output pairs you need to make. For instance, you can split the pre-amp and poweramp and just do digital EQ in between given that the EQ tends to be after the non-linear gain, and the EQ itself behaves linearly. But those eq inputs feed into the power amp model.

A lot of the time for what you want to do, a single snapshot is actually fine, just varying the input gain alters the amount of saturation, say in a tube mic pre or something like that. So given NAM is the best out there right now it would make it a really useful module I think in HISE.

-

@ccbl said in Simple ML neural network:

A lot of the time for what you want to do, a single snapshot is actually fine, just varying the input gain alters the amount of saturation, say in a tube mic pre or something like that. So given NAM is the best out there right now it would make it a really useful module I think in HISE.

What does that offer that cannot be achieved with the current neural network inference framework in HISE (RT Neural)?

-

@Christoph-Hart A few things I guess. The number of NAM captures currently dwarfs all the other that are supported by RTNeural (I have used guitar ML in the past for instance) and it's only growing (here for example https://tonehunt.org/). Not just 1000s of amp snap shots but people are really getting into studio gear captures too. There's a huge group of people for support in generating good captures and technical training support. And of course it is probably the best sounding in terms of accuracy right now.

I'm interested enough in using a neural net that I'm willing to use RTNeural, it's still a great system. NAM is becoming a defacto standard in a lot of NN capture spaces currently though. So for the future it seems like a good addition to the code base. And on a personal level I've already created over 1000 NAM models.

Maybe once I've learned more of the basics, if someone is willing to help me with it I would appreciate it.

-

@ccbl And can't you just convert the models to work in RTNeural? In the end it's just running maths and I'm not super interested in adding the same thing but in 5 variations.

-

@Christoph-Hart but....

This would require the developer(s) to convert each from the NAM model, and if HISE loaded/played NAM models natively - then the end user could load any model they wanted...so 1,000s of models....instantly available.

-

@ccbl This looks great, the colab notebook for training is super simple as well (you reamp the test signal and upload it, then wait).

Someone already implemented it into a pedal and it's selling for €500.