Hello my old friends.

Here's how to get realtime Pitch-Shifting working with the Rubberband Library.

First, your project either needs to be GPL (open-source), or you need to pay the developers of Rubberband for a license (and Christoph!). Also this is just for Windows, I don't use or own a Mac so YMMV.

First, there's some dependancy conflicts between JUCE and Rubberband. To fix, we have to open the file:

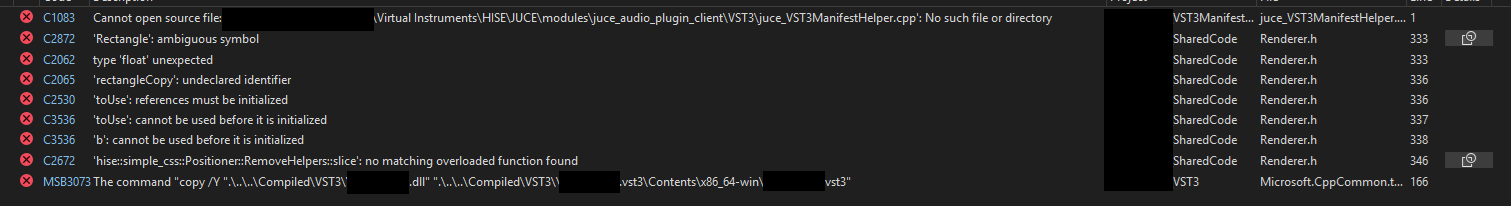

HISE/hi_tools/simple_css/Renderer.h

And replace EVERY instance of the Rectangle class with juce::Rectangle instead. I've tried the preprocessor flags and it didn't fix the problem, so I just ended up replacing everything manually. Don't use Find & Replace, there's some other "Rectangle" variables that you don't want to override, just go through manually and replace every Rectangle with the strictly type juce variant.

I also replaced the copy variable in the Positioner class to rectangleCopy because I think this will also cause problems on Windows. Lines 333 and 336.

Next, in your Projucer, add the preprocessor flag HI_ENABLE_CUSTOM_NODES=1.

Okay, now we can build HISE. When it's done, open a project and hit Tools -> Create C++ Third Party Node Template. Name it anything.

We'll also need to tell HISE where our Custom Nodes are stored, open HISE Settings -> Development -> Custom Node Path and point it to {*YourProjectName*}\DspNetworks\ThirdParty. Click "Save".

We just created our Third Party node, but it won't do much good without the Rubberband Library.

Navigate to that same folder in a File Browser, there should be a src folder, if there isn't, create it. Clone the Rubberband Repository into that folder.

Open your custom node in any editor. Let's start with the includes, which go AFTER the JUCE one:

// Original Code:

#pragma once

#include <JuceHeader.h>

// What we're adding:

#include "src/rubberband/single/RubberBandSingle.cpp"

#include "src/rubberband/rubberband/RubberBandStretcher.h"

Inside the primary node struct, declare a unique pointer to hold our RubberBandStretcher:

// Original Code:

static constexpr int NumTables = 0;

static constexpr int NumSliderPacks = 0;

static constexpr int NumAudioFiles = 0;

static constexpr int NumFilters = 0;

static constexpr int NumDisplayBuffers = 0;

// What we're adding:

std::unique_ptr<RubberBand::RubberBandStretcher> rb;

In the Prepare method, assign the RubberBandStretcher with the OptionProcessRealTime Option:

void prepare(PrepareSpecs specs)

{

rb = std::make_unique<RubberBand::RubberBandStretcher> (specs.sampleRate, specs.numChannels, RubberBand::RubberBandStretcher::Option::OptionProcessRealTime);

rb->reset(); // not sure if this is necessary, but I don't think it hurts

}

RubberBand uses Block-Based processing, not per-sample. So we'll use the process method, not the processFrame one:

template <typename T> void process(T& data)

{

if (!rb) return; // safety check in case the rb object isn't constructed yet

// "data" is given to us by HISE, it has multiple channels of audio values as Floats, as well as some utility functions:

int numSamples = data.getNumSamples(); // the number of audio samples in the block

auto ptrs = data.getRawDataPointers(); // RB expects Pointers, not just values

rb->process(ptrs, numSamples, false); // This is the Pitch-Shifting Part

// the "false" is just telling RB if we're using the last audio buffer, which we're not if it's realtime

int available = rb->available(); // Checks to see if there is repitched audio available to return

if (available >= numSamples)

{

// We have enough output, retrieve the repitched audio

rb->retrieve(ptrs, numSamples);

}

}

Okay, we're almost there. We just need to tell the Pitch Shifter how much we want to shift the signal by. It uses a Frequency Ratio, so:

1.0 = None

0.5 = - Octave

2.0 = + Octave

If you'd prefer to have a Semitones-based control, I recommend using the conversion function in the ControlCallback of your UI element instead. It looks like this:

// In the GUI Slider Control Callback

// This assumes the Slider goes from -12 to +12, with a stepSize of 1.0

inline function onknbPitchControl(component, value)

{

// bypass if semitones = 0 (save CPU & reduce latency)

if (value == 0)

pitchShifterFixed.setBypassed(true);

else

pitchShifterFixed.setBypassed(false);

// Calculate the FreqRatio from Semitone Values

local newPitch = Math.pow(2.0, value / 12.0);

pitchShifterFixed.setAttribute(pitchShifterFixed.FreqRatio, newPitch);

};

Either way, we need to connect our Custom Node's only Parameter to our RubberBand Object.

In the createParameters method:

void createParameters(ParameterDataList& data)

{

{

// Create a parameter like this

parameter::data p("FreqRatio", { 0.5, 2.0 }); // Between -1 Octave and +1 Octave

// The template parameter (<0>) will be forwarded to setParameter<P>()

registerCallback<0>(p);

p.setDefaultValue(1.0);

data.add(std::move(p));

}

}

And finally, we connect the Parameter in the setParameters method:

template <int P> void setParameter(double v)

{

if (P == 0) // This is our first paramater, if you add more, increment the index.

{

rb->setPitchScale(v); // passes the parameter value "v" to our pitch-shifter

}

}

Okay, that's all the code we need.

Now hit Export -> Compile DSP networks as dll.

Assuming everything worked, you can now add a HardcodedMasterFX and load your pitch-shifter.

Notes / Thoughts:

- I haven't tested this extensively, there might be other Includes that cause problems depending on your HISE installation.

- There's about 30-50ms Latency which is effectively unavoidable, the Pitch Shifter needs a window of a certain size in order to perform calculations.

- There might be other

RubberBand::RubberBandStretcher::Option values that make it sound better for transients etc, I haven't tested them yet.

- Make sure your Parameter doesn't go to 0, as the Library uses it for dividing somewhere.

Here's the full node, if you copy-paste it, make sure you rename it to whatever your node was or HISE won't find it:

// ==================================| Third Party Node Template |==================================

/*

Don't forget, you have to replace every instance of Rectangle in HISE/hi_tools/simple_css/Renderer.h to juce::Rectangle

And replace both "copy" variables in that same file with copyRectangle

Then build HISE with HI_ENABLE_CUSTOM_NODES=1 preprocessor

*/

#pragma once

#include <JuceHeader.h>

#include "src/rubberband/single/RubberBandSingle.cpp"

#include "src/rubberband/rubberband/RubberBandStretcher.h"

namespace project

{

using namespace juce;

using namespace hise;

using namespace scriptnode;

// ==========================| The node class with all required callbacks |==========================

template <int NV> struct rubberband: public data::base

{

// Metadata Definitions ------------------------------------------------------------------------

SNEX_NODE(rubberband);

struct MetadataClass

{

SN_NODE_ID("rubberband");

};

// set to true if you want this node to have a modulation dragger

static constexpr bool isModNode() { return false; };

static constexpr bool isPolyphonic() { return NV > 1; };

// set to true if your node produces a tail

static constexpr bool hasTail() { return false; };

// set to true if your doesn't generate sound from silence and can be suspended when the input signal is silent

static constexpr bool isSuspendedOnSilence() { return false; };

// Undefine this method if you want a dynamic channel count

static constexpr int getFixChannelAmount() { return 2; };

// Define the amount and types of external data slots you want to use

static constexpr int NumTables = 0;

static constexpr int NumSliderPacks = 0;

static constexpr int NumAudioFiles = 0;

static constexpr int NumFilters = 0;

static constexpr int NumDisplayBuffers = 0;

// declare a unique_ptr to store our shifter

std::unique_ptr<RubberBand::RubberBandStretcher> rb;

// Scriptnode Callbacks ------------------------------------------------------------------------

void prepare(PrepareSpecs specs)

{

// assign the shifter to our pointer

rb = std::make_unique<RubberBand::RubberBandStretcher> (specs.sampleRate, specs.numChannels, RubberBand::RubberBandStretcher::Option::OptionProcessRealTime);

rb->reset(); // not sure if this is necessary, but I don't think it hurts

}

void reset(){}

void handleHiseEvent(HiseEvent& e){}

template <typename T> void process(T& data)

{

if (!rb) return; // safety check in case the rb object isn't constructed yet

int numSamples = data.getNumSamples();

auto ptrs = data.getRawDataPointers(); // RB needs pointers

rb->process(ptrs, numSamples, false); //

int available = rb->available();

if (available >= numSamples)

{

// We have enough output, retrieve it directly

rb->retrieve(ptrs, numSamples);

}

}

template <typename T> void processFrame(T& data){}

int handleModulation(double& value)

{

return 0;

}

void setExternalData(const ExternalData& data, int index)

{

}

// Parameter Functions -------------------------------------------------------------------------

template <int P> void setParameter(double v)

{

if (P == 0)

{

if (!rb) return; // Safety check in case the Parameter is triggered before the rb object is constructed

rb->setPitchScale(v);

}

}

void createParameters(ParameterDataList& data)

{

{

// Create a parameter like this

parameter::data p("FreqRatio", { 0.5, 2.0 });

// The template parameter (<0>) will be forwarded to setParameter<P>()

registerCallback<0>(p);

p.setDefaultValue(1.0);

data.add(std::move(p));

}

}

};

}