The Sample Map of The Future: Escaping the 20th Century Sample Mapping Paradigm

-

@Christoph-Hart Nice, bitmasking is always such fun :)

-

@Christoph-Hart can't it set the filters automatically based on how many tokens we got? or are the consts just to demonstrate what's happening?

This is going over my head a bit (heh), my bit manipulation is very rusty.

I'm happy to leave the backend specifics to you, I agree that there shouldn't be a performance regression.

Does your filter idea play multiple stuff (like all the layers of a note) with a single event?

-

can't it set the filters automatically based on how many tokens we got?

As I said, we can make it easier to use after we get the main principle right. All that bit shuffling can be shoveled under the rug. My current suggestion would be to supply the sampler with a list of articulations and their properties and it does all the parsing & bit mangling on its own:

// create a group manager that will do all this stuff so that Lindon doesn't need // to calculate how many bits are required for 13 RR groups const var gm = Sampler.createGroupManager([ { ID: "RR", // can be used in the UI to filter / display the samples LogicType: "RoundRobin", // the logic type is a hint for the default behaviour IndexInFileTokens: 1, // used by the parser to calculate the bit mask NumMaxGroups: 7, // required for calculating the amounts of bits needed }, { ID: "XF", LogicType: "Crossfade", IndexInFileTokens: 2, NumMaxGroups: 4, }, { ID: "PlayingStyle", LogicType: "Keyswitch", // this is just one of a few new "modes" that we can supply IndexInFileTokens: 3, NumMaxGroups: 3, } ]); // Goes through all samples, calculates the bit masks and writes the result into // the RRGroup index (does the parsing part for you) gm.updateBitMask(Sampler.createSelection(".*));with this approach you even don't need to script anything anymore on the playback side (you still can of course for custom group logic, but the bases are covered), plus there is even more room for optimization as it can create sample lists for each key switch group and then it doesn't have to look through the entire sample array but can pre filter the current playing type.

Does your filter idea play multiple stuff (like all the layers of a note) with a single event?

Everything that isn't masked out will be played, yes - in my example above, all the XFade layers pass the bit mask test and are flagged for playing.

-

Edit: I just noticed you said you'd keep the basic note/vel mapping. I'll boil my question down to "Does this mean XFade groups would be added as an axis separate from RRs, and will I still be able to use Enable MIDI Selection?"

Original novel:

This looks like a dream come true as someone traumatized by too many huge orchestral libraries done in Kontakt.

I'm curious how you see it interacting with the existing controls. Is the idea that in this mode, samples could only be played back with Sampler.addGroupFilter(), or would assigning a sample a note and rr value still put it in the mapping editor? The xfades would need a way to be edited, were you thinking to move the existing group xfade controls out of the RR groups? Or would it all be managed by script?

The library I'm working on would not have been possible without HISE's "Enable MIDI selection", which let me adjust volume, pitch, start, and end of my legato samples to match them to the sustains. In Kontakt, without combined mics, it's almost impossible to do, though Jasper Blunk masochistically manages it with table modulators and force of will. Spitfire and OT probably do it with their dedicated (proprietary) players. Point is this has always been out of reach for me.

The downside was I needed to use RRs for variants -- vowels in this case. Crossfaded dynamics got split out into separate samplers and controlled with a global modulator.

If "XFade" is added as a native concept, and if it were added as a separate axis, similar to how RRs are now, with a dropdown to filter for a given xfade layer in the mapping editor, that "Enable MIDI selection" workflow would still be possible.

I'm biased, as almost all libraries I've worked on have multiple arts, some with rrs, some with xfaded dynamic levels, some with both. I think supporting keyswitching natively is maybe overkill, as separate samplers for separate articulations is logical and works fine, but maaaaybe it's worth natively supporting crossfaded dynamic levels in the UI?

-

or would assigning a sample a note and rr value still put it in the mapping editor?

Yup, Samples are still mapped in a 3+ dimensional space. Currently it's X = note, Y = Velocity, Z = RRGroup. With the new system it's X = Note, Y = Velocity, Z = BITMASK!!! and this bitmask can handle multiple dimensions of organisation (keyswitches, articulation types, RR, whatever you need).

The library I'm working on would not have been possible without HISE's "Enable MIDI selection", which let me adjust volume, pitch, start, and end of my legato samples to match them to the sustains.

This should still be possible, it just selects what is played back most recently. If you have now multiple types in one sampler (eg. sustains and legatos), it might be a little bit tricky, but we could then also apply the bitmask filter on what to display

-

@Christoph-Hart In your example samples get assigned both an RR and a group XFade, were you planning to add separate controls to manage and filter group xfades?

-

@Christoph-Hart said in The Sample Map of The Future: Escaping the 20th Century Sample Mapping Paradigm:

or would assigning a sample a note and rr value still put it in the mapping editor?

Yup, Samples are still mapped in a 3+ dimensional space. Currently it's X = note, Y = Velocity, Z = RRGroup. With the new system it's X = Note, Y = Velocity, Z = BITMASK!!! and this bitmask can handle multiple dimensions of organisation (keyswitches, articulation types, RR, whatever you need).

The library I'm working on would not have been possible without HISE's "Enable MIDI selection", which let me adjust volume, pitch, start, and end of my legato samples to match them to the sustains.

This should still be possible, it just selects what is played back most recently. If you have now multiple types in one sampler (eg. sustains and legatos), it might be a little bit tricky, but we could then also apply the bitmask filter on what to display

Ok well Im pretty much in - where do I sign?

Just for my clarity tho - we now "map" samples into the 3d space by using the 2D (velocity x note) space in the editor - and we use the name of the wav file to assign it in the n-dimension Bitmask - so some set of tokens like:

InstrumentName_Velocity_NoteNum_MicPosition_RRGroup_XFadeGroup.wav

Where our bitmasking system ignores the velocity, note num, and mic position tokens..because we've defined a set(array) of strings that define what will appear for each position in our bitmask, e.g.round robins:

const var RR_GROUPS = ["RR1", "RR2", "RR3", "RR4", "RR5", "RR6", "RR7"];

xfades:

const var XF_GROUPS = ["XF1", "XF2", "XF3", "XF4" ];

Is this right?

In fact wouldnt it be much nicer if we were not declaring these token arrays every time we loaded a new sample map - and we moved them into the samplemap xml itself?

or is that waht this thing is for?

ID: "XF", LogicType: "Crossfade", IndexInFileTokens: 2, NumMaxGroups: 4, -

@Christoph-Hart said in The Sample Map of The Future: Escaping the 20th Century Sample Mapping Paradigm:

Everything that isn't masked out will be played, yes - in my example above, all the XFade layers pass the bit mask test and are flagged for playing.

Can we filter it further, play a note, then filter again within the same callback? The idea in the original post is to make use of the eventData concept to allow per-sample modulation.

Or is the thing that we now use the good old note x vel 2d map to choose the individual samples?

-

Is this right?

Yes.

Can we filter it further, play a note, then filter again within the same callback?

You can always apply your own filters to filter out a layer. I'm currently writing the data container, it will all clear up soon.

-

@Christoph-Hart said in The Sample Map of The Future: Escaping the 20th Century Sample Mapping Paradigm:

Is this right?

Yes.

In fact wouldnt it be much nicer if we were not declaring these token arrays every time we loaded a new sample map - and we moved them into the samplemap xml itself?

-

@Lindon said in The Sample Map of The Future: Escaping the 20th Century Sample Mapping Paradigm:

In fact wouldnt it be much nicer if we were not declaring these token arrays every time we loaded a new sample map - and we moved them into the samplemap xml itself?

sure, if the layout changes between samplemaps then this shouldn't be an issue.

-

@Christoph-Hart Im trying to work out in my head(and lets be frank theres not much room left in there)... how I might use this to manage True Legato - so thats start note fades to transitioning sound fades to target note.

If it doesnt obviously support this in a simple way maybe we can think about how a 21st century sampler would manage that......

-

@Lindon I‘m working on exact that use case right now. I just need to work out a way how to make it efficient in the backend.

-

I don't see this particularly affecting legato. I would still put sustains in one sampler and legato samples in another, which makes it easier to adjust envelopes and manage the sample maps.

The biggest change for me here is being able to use dynamic xfades and round robins simultaneously in one sampler.

-

@Simon having legatos in the same sampler does bring some benefits (eg. Automatic gain matching like with the release start and zero cross aligning the start for less phasing during the fade).

In the end it‘s just another filter with 128 options - the legato sample will be mapped to the target note and this filter is set to the source note.

-

@Christoph-Hart For automatic gain matching, I'll gladly put all my legatos in the same sampler :)

-

@Christoph-Hart You've probably thought of this already, but just in case:

Ideally the legatos would be matched to the sustains, not the other way around. The start of the legato sample should be matched to the end of the source sustain, and the end of the legato sample should be matched to the start of the target sustain.

An envelope seems simplest to me, or a compressor/expander. In any case:

- Apply a gain offset to the legato sample, to match it to the source sustain

- Apply an envelope to the legato sample, to match the end of the legato sample to the target sustain

The envelope attack time and curve might be worth exposing as options, or maybe a fixed curve is enough.

I have spent a lot of time working on legatos and have equally many opinions on them, so I am very excited to see what you are working on.

-

@Simon said in The Sample Map of The Future: Escaping the 20th Century Sample Mapping Paradigm:

The biggest change for me here is being able to use dynamic xfades and round robins simultaneously in one sampler.

Already possible, see Lindon's recent thread

-

@d-healey I know you technically CAN, but I mean in a way that is easy to work with, and doesn't require using RR groups for two different purposes.

-

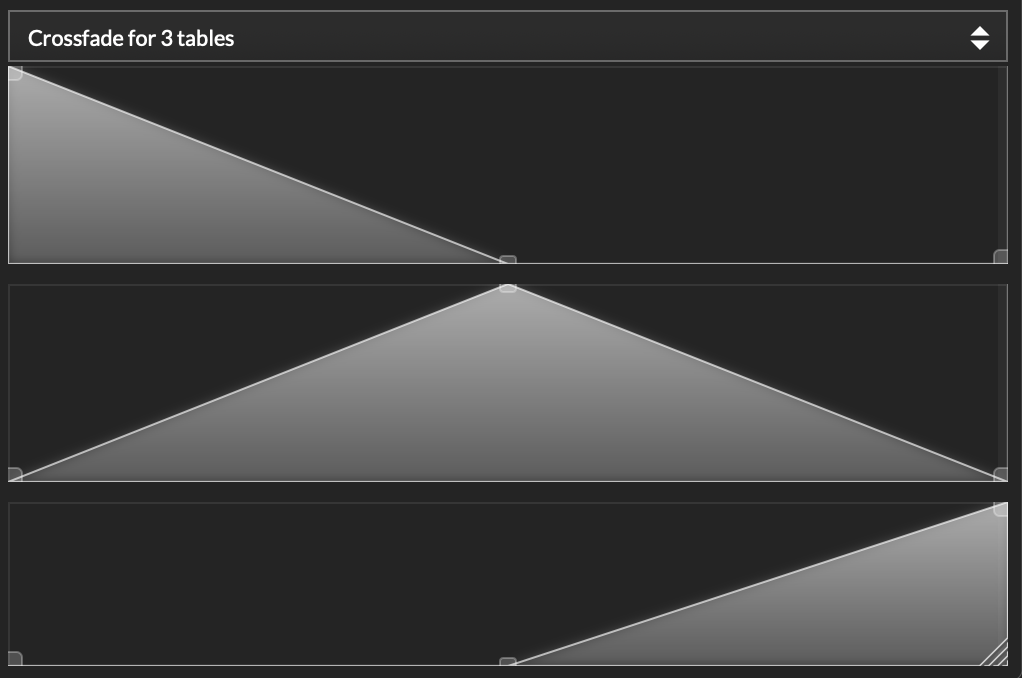

Quick survey: how much of you that are using the Crossfade feature actually use the tables for customizing the crossfade curves? I mean changing this

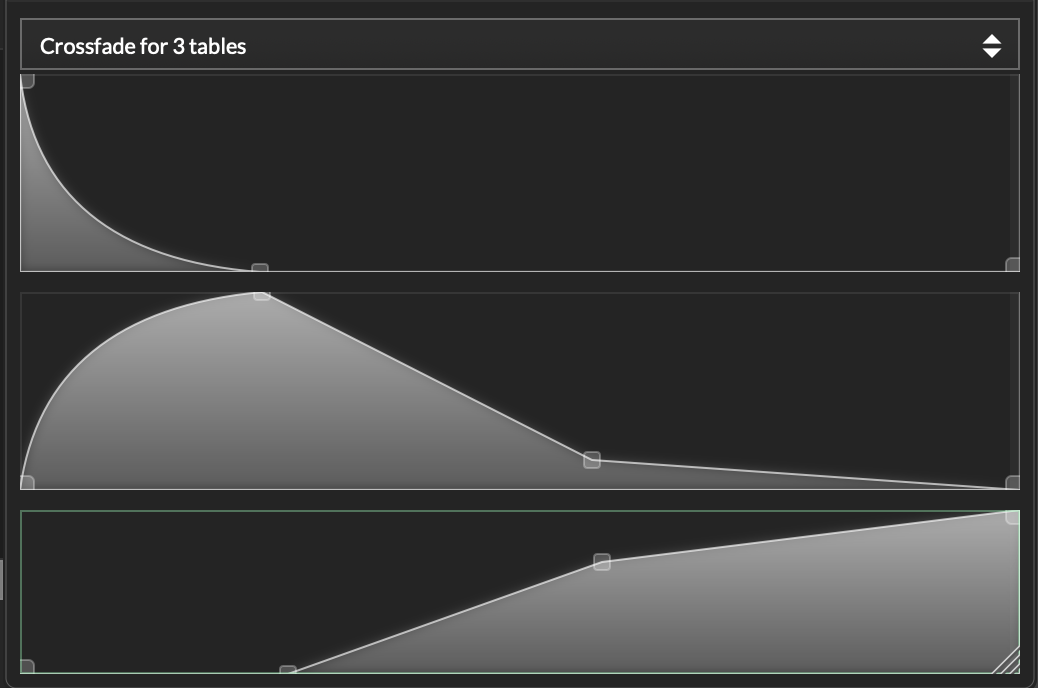

to something like this:

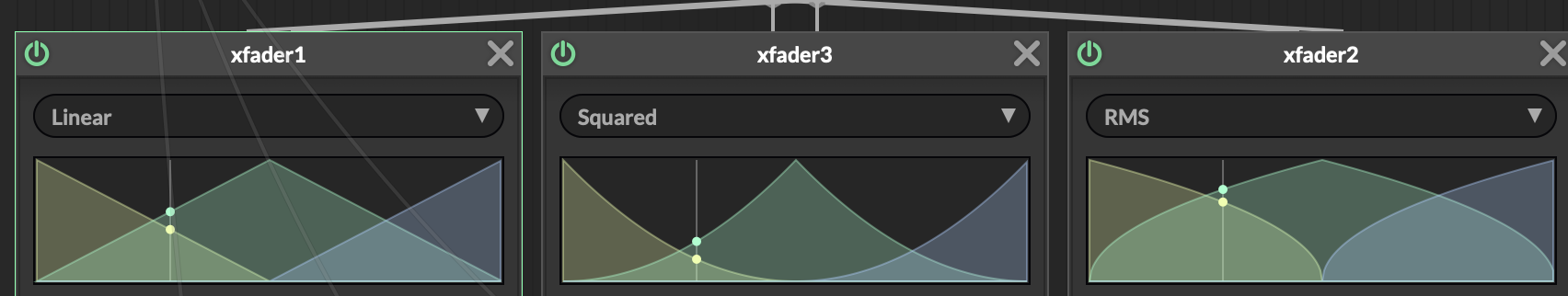

The reason I ask is that now we have that nice xfader node available in scriptnode, we can just use its algorithms to replace the table curves which makes it much faster to calculate and easier to implement / maintain (I'll include a few fade types which should cover most use cases):

I'll keep the tables around in the old system for backwards compatibility of course, but not having to drag this tech debt to the new system would be nice.