Help me understand Waveshaping

-

Clipping is waveshaping.

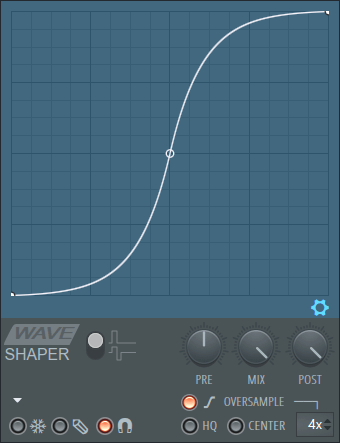

Waveshaping is taking an input sample and mapping it to an output sample.

Audio samples make up waveforms.

Samples range from -1 to 1, and an audio file is an array of these numbers. A sine wave for example could start at 0, increase to 1 and then back to 0, then to -1 then to 0, and repeat.Waveshaping basically looks at the current sample, say this sample is 0.75, and it maps this to a different sample value and outputs that value instead.

This is raw digital distortion of the wave. You basically take a wave shape, and transform each point in order to end up at a different wave.Often continuous functions are used.

So that you get a smooth transformation.

Waveshaping is linear and memoryless.Waveshaping is static and will generally not sound like a guitar amp.

Because analog amps are nonlinear and will have a different effect depending on past audio. Rather than just looking at the current sample and transforming it. -

@griffinboy That is one of the best and easiest to understand descriptions of waveshaping I have read. Well done!

-

@griffinboy thanks for the explanation! I will probably look to the RT-Neural implementation once it seems like there's a known pipeline.

For now I just want to learn some stuff. Maybe I'll try out different ways of modulating the waveshape based on some kind of analysis. RMS maybe?

-

@griffinboy Second question I guess. Is this all affected by the sample rate. -1/1 at 24bit has a much tighter ranger than -1/1 at 32bit float right?

-

@ccbl said in Help me understand Waveshaping:

@griffinboy Second question I guess. Is this all affected by the sample rate. -1/1 at 24bit has a much tighter ranger than -1/1 at 32bit float right?

the range is exactly the same no matter what the bit size or sample rate, (1 to -1)

Bit size defines the size of the number that is used to represent a given sample - so 32-bit is a longer number than 24-bit so it provides finer granularity, sample rate just defines the frequency at which values are sampled. -

-

Yeah so RT neural will be the 'easiest' way to create an analog style distortion, in terms of the amount of physics you will need to learn.

I've not yet messed with machine learning myself, but I can tell you that making a white box model will mean you are reading endless amounts of papers on maths, electronics and physics

-

@griffinboy ha ha yeah. To be honest I wasn't expecting to make some UAD level simulation but just learn the basics of HISE a bit more.

I'm already very familiar with AI though through making a lot of NAM profiles and being one of the co-founders of ToneHunt.

Really looking forward to implementing quite a few ideas once that process is ironed out.

-

@orange sweet, will check this out.

-

@ccbl Chase the best sound, not the technology.

Currently, the best way to simulate amplifiers is with nonlinear impulse responses.

But a single very-high-quality linear impulse response (as used in HISE) sounds as good as the best amplifiers recorded by the best engineers.

How it sounds is far more important than the underlying technology.

I would suggest investing in hiring a world-class audio guitar engineer to create conventional IRs—put your resources there. (I can recommend someone if interested, but you gotta be serious).

-

@clevername27 I appreciate your sentiment, but I'm just a guy with a normal day job with an interest in plugins. Not looking to make any money here, just releasing my work for free as and when inspiration strikes.