Is blending between impulse responses possible?

-

@Orvillain You can load different convolution units and do it that way, but that's of course not what you mean.

It would for sure be cool if you can pass a list of audio file references to the convolution reverb and have it recalculate an IR based on the gains that you set.

It's also missing one important feature: IR size.

Compared to Kontakt, it's also missing the ability to split the IR into stages in the time domain with a draggable vertical line, and have different size/lowcut/highcut for different stages (e.g. Early/Late).

-

@aaronventure said in Is blending between impulse responses possible?:

It's also missing one important feature: IR size.

Compared to Kontakt, it's also missing the ability to split the IR into stages in the time domain with a draggable vertical line, and have different size/lowcut/highcut for different stages (e.g. Early/Late).

This will be so great :)

+1 -

@Orvillain I understand the interest and promise here, but an easier solution is to perhaps reevaluate if that is desirable.

-

IRs aren't well-suited for that. You can use a bunch of tricks to force them into that box, but it's not an effective use of them.

-

Morphing IRs can take signifiant processing power.

-

The only people who could really tell how realistic your simulation is will probably not be using your (or any) plugin to do it.

-

Don't give the user too many options — it's paralysing. Offer a limited number of options that are all musically useful, and flexible to many conditions,.

All that being said, it's certainly possible within the HISE framework, as it's extensible. The newest version of my plugin does what you're describing. But for a commercial plugin, I don't see a net positive.

If you want to cool something cool with IRs, I'd suggest implementing non-linear IRs (or a variation thereof). For example, your convolution model can respond differently based on amplitude. Morphing can simulate this, but the real deal can be implemented more realistically with a single convolution model. Kemplar does this to better model guitar workflows. I use it in my plugin to model drums and cymbals. It's a win for users because there's nothing for them to adjust - it just works. The results are audible. However, the processor usage can be extreme. (Mine certainly is.) I'm oversimplifying here, but I'm happy to answer some questions about this.

As always, just my $0.02.

-

-

@Orvillain oh I just realised you meant realtime morphing.

In that case yeah, just load two convolution units in ScriptNode, add a crossfade node and do with it what you will.

You will almost certainly run into some phasing issues. Getting a sound for a position between A and B isn't as simple as mixing A and B equally - you're just duplicating the number of reflections. Most of the reflections are different, because spaces aren't linear, so doing some sort of "morph" where you interpolate "reflections", if your software could even detect individual reflections in an IR, is pointless.

-

I was thinking something along these lines:

https://youtu.be/ndRMtqJLNWgAs I understand it, these "drag a microphone across the front of a speaker graphic" interfaces are pretty much just a nicer way to browse a list of IR's. I think they're interpolating across a set of IR's that have been named properly so they can be laid out in a grid like fashion, to represent a real speaker+microphone experience. I think they use the Dynamount devices to capture the IR's.

This is the kind of thing I was thinking of building. So far as I know, they phase-align the IR's to ensure that all positions are equally "usable" - If you listen to some of the audio there, you can hear that it isn't exactly true to life, but good enough in most cases.

-

@Orvillain in that case yeah, pretty sure that's what it is.

they're not interpolating, they're just crossfading. phase alignment is key as well.

true to life doesn't matter, it's a bigfoot term. @clevername27 is right about chasing that

@clevername27 said in Is blending between impulse responses possible?:

The only people who could really tell how realistic your simulation is will probably not be using your (or any) plugin to do it.

It doesn't have to be realistic. It just needs to be believable, i.e. sound good.

-

@aaronventure Yes agreed. So I guess the question is .... how many IR's is realistic to load up in Hise at once? Convolution is quite expensive isn't it ??

-

@Orvillain The CPU load scales (almost) linearly with IR length, so it all depends on that. Infinite Brass in Kontakt works with up to 3x convolution, the IRs of the biggest room are 3 seconds long or so (for an RT60 of around 1.5s),

In late 2018 when it launched, new CPUs could easily take it all on at low buffer sizes. Today, CPUs are almost 3x as fast.

-

@aaronventure said in Is blending between impulse responses possible?:

@Orvillain The CPU load scales (almost) linearly with IR length, so it all depends on that. Infinite Brass in Kontakt works with up to 3x convolution, the IRs of the biggest room are 3 seconds long or so (for an RT60 of around 1.5s),

In late 2018 when it launched, new CPUs could easily take it all on at low buffer sizes. Today, CPUs are almost 3x as fast.

I guess I just need to try it then!

-

@Orvillain @aaronventure Good points.

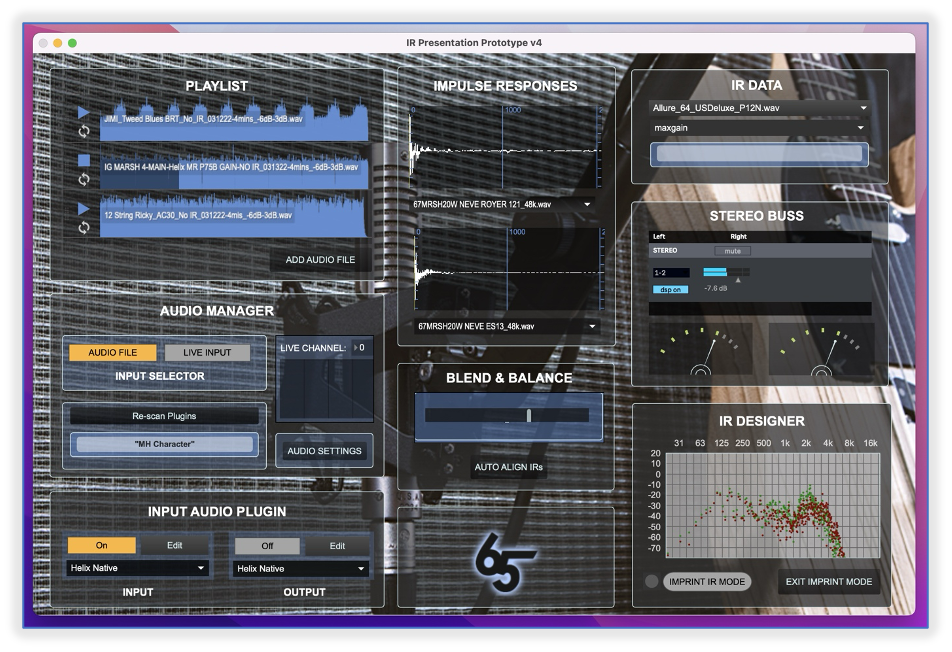

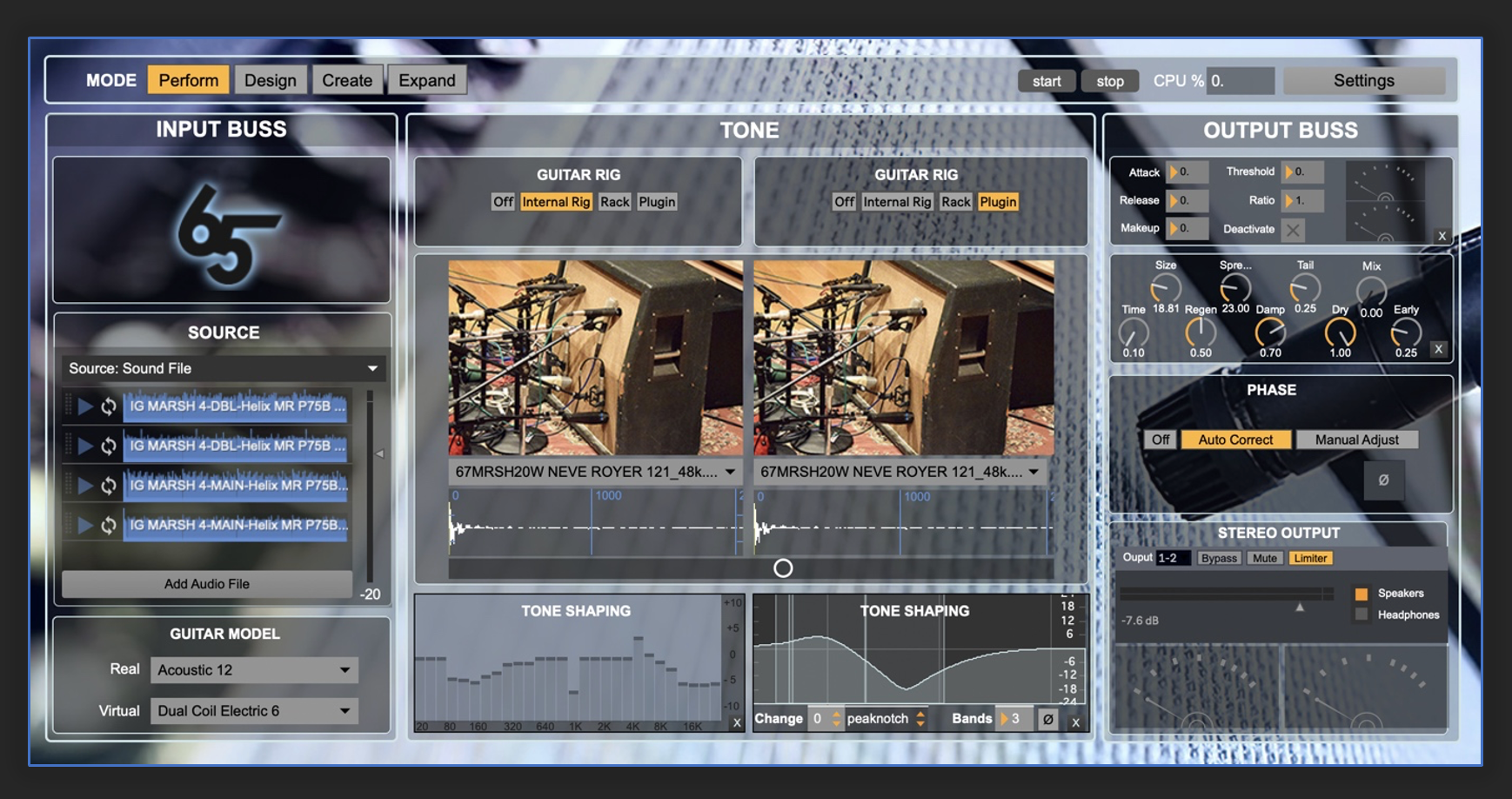

I've reached through the archives. This one -

- Phase-aligns two IRs in real-time (guitar chains).

- Morphs in real-time between those two IRs. (guitar chains).

- Employs non-linear IRs ( guitar modelling).

- Print new IRs (linear) of the plugin's current state.

As I recall, the phase alignment made a big difference. I can try to dig this one up some more if that's helpful, but it's not HISE. Here's an early build that shows the IR designer, and some other IR-related stuff.

This one began wrapping the low-level controls into meta-controls.

If you'd like any information on any of this, pls let me know.