Sample.set weirdness

-

@iamlamprey said in Sample.set weirdness:

It's probably just my HISE installation

Did you try what Christoph said and set the low note before you set the high note?

-

@d-healey yessir

@iamlamprey said in Sample.set weirdness:

Yeh I have the loop first then set the Root & HiKey manually and it seems to work fine now, just thought it was worth a mention.

-

@d-healey said in Sample.set weirdness:

Are you doing this from a single sample? Does it sound realistic?

Sorry not sure how I missed this, the current pipeline is:

- Record 15 RR's of a guitar sustain note

- Extract Residue w/ Loris, repitch the 15 RR's across the keyspan (sounds fine so far in my testing)

- Trim Resynthesized Samples (only need a small section = way less RAM)

- Dynamically build sampleMaps, set loop points to be exactly 1 cycle, based on resynthesized fundamental w/ no Fade Time

- Now the Sampler works like a Wavetable Synth, minus the WT Position which is basically a lowpass filter for guitars (ie Karplus-Strong method). The added benefit of the Sampler is that we get Round Robin

- Play the Residue (pick attack) sample back alongside the Tonal "Wavetable" & process further with things like the Scriptnode Palm Mute setup I posted a while back

It sounds pretty good so far, I'm confident I can get it sounding identical to the original Sample Library of the same guitar, but with 1/100th the file size, more parameters exposed to the end user to play with, and more realism (since I'm now able to 3x or more the RR's because the footprint is so tiny)

The key piece of the puzzle is the Loris library, since I get to be as lazy as I want and only record a single note and just have Loris handle the rest (although there are some tonal differences between strings)

I've also been using one of the Melda EQs to "model" the middle & neck pickups and applying that as a function, this can also be extended to capture other guitar bodies without needing to re-record any samples (you can also do it with AI but let's not unless we have to lol)

i'll do a full on writeup when I'm happy with the sound and pipeline

-

@iamlamprey said in Sample.set weirdness:

It sounds pretty good so far, I'm confident I can get it sounding identical to the original Sample Library of the same guitar, but with 1/100th the file size, more parameters exposed to the end user to play with, and more realism (since I'm now able to 3x or more the RR's because the footprint is so tiny)

So the approach we use if we want to reduce the memory/disk footprint and to have RR-like response is:

Think about RR's what are they actually doing - what is the difference between them? - The answer here is:

- Tuning - there are micro differences in tuning

- EQ - there are micro differences in eq too

- Timing - there are micro difference here as well.

Can you think of anything else that would be different if we compared RR recordings of the same note at the same velocity on the same instrument (be it drum, guitar piano, whatever)?

I cant think of anything but the very vague "Timbre" - and this essentially breaks down into "EQ over time" - but noticeable timbre changes is (generally speaking) not something you would hear between two given RRs...

So.... I built an "RR Engine" that varies 1 thru 3 above, giving me infinite RRs, and end user control over them. I'm sure you can work out how to build your own RR Engine from this.

-

@Lindon said in Sample.set weirdness:

Can you think of anything else that would be different if we compared RR recordings of the same note at the same velocity on the same instrument

The attack. I haven't been able to find a convincing way to generate fake RRs that have a unique attack.

We can shift the start position around and nudge the attack time but it's not convincing to me, it still sounds like the original sample.

I also use an infinite variation system similar to yours, but I can't get past the attack sounds off so I still record a bunch of RRs too.

-

@d-healey said in Sample.set weirdness:

@Lindon said in Sample.set weirdness:

Can you think of anything else that would be different if we compared RR recordings of the same note at the same velocity on the same instrument

The attack. I haven't been able to find a convincing way to generate fake RRs that have a unique attack.

We can shift the start position around and nudge the attack time but it's not convincing to me, it still sounds like the original sample.

I also use an infinite variation system similar to yours, but I can't get past the attacks sounds off so I still recording a bunch of RRs too.

yes - sorry forgot that - we vary the attack of the note too in the RR engine. Works ok here, but we are not usually doing "orchestral" instruments like you...so you may have more comprehensive needs.

-

I've implemented "fake RR" already which includes pitch & amplitude randomization per voice (very easy to do with Modulators), but the most realistic approach is still a form of sampling, let's look at a waveform:

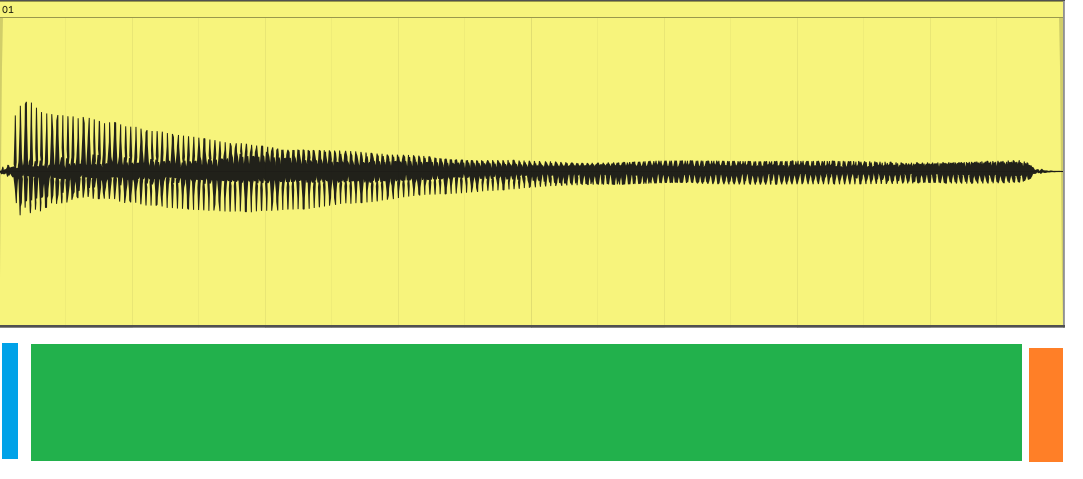

Where Blue is the transient, green is the body and orange is whatever release sounds the particular instrument might have

As David said, the attack/transient sample is difficult to fake, and similarly so is the release sample, i just record these separately (pick attack and release noises for guitar)

the Body however is basically a repeating cycle that decays over time (either in Gain or High End, usually both)

If we can grab a single cycle, and loop it perfectly, the decay can be handled by HISE Modulators as well (Filter+AHDSR)

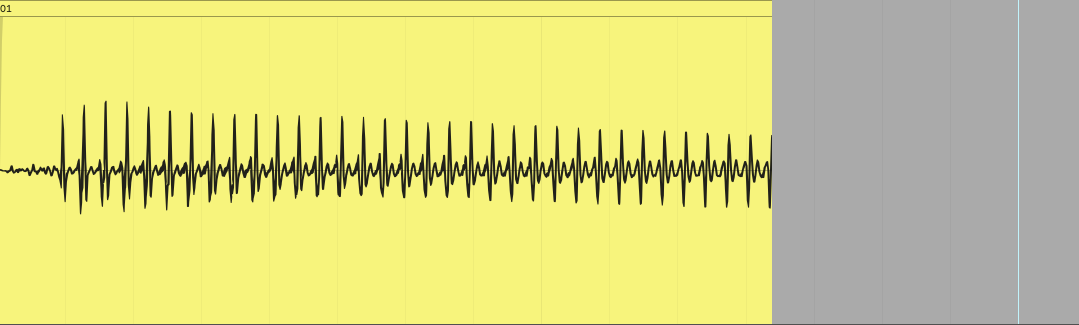

this is where Loris comes in, my backend lets me record a single pitch (15 RR's in this case), and resynthesize it across the keyrange. I can then truncate the samples since we don't need the full length, it looks something like:

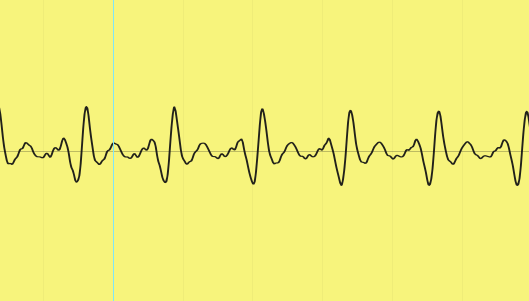

Loris can isolate the partials (which means we remove the transient, which is why i record them separately, or you can phase-invert the partials to get the Residue), and because I resynthesized it with Loris, it SHOULD now have a predictable cycle length:

f0 = 440 // A above middle C sr = 44100 cycle = sr / f0 // repeat for each pitchwe can then set an arbitrary loopStart point (anywhere past the initial transient) and use our cycle length to get the loopEnd, as long as we have no fadeTime we SHOULD have a perfect looping sample (some of mine are buzzing so there's probably something more I need to do but it's not a huge deal), this can also be done programmatically to automatically create the sampleMaps for you

combining these "wavetables" alongside the recorded Transients results in a very convincing waveguide model, now I just setup the Modulators to filter/attenuate the loop so it decays naturally, this involves measuring the decay times of the real samples and such

because we've truncated the samples, we're using significantly less RAM AND we get all of the usual sampler benefits like Round-Robin and lower CPU usage than the Wavetable Synth

another added benefit is I can process the transients and body separately, so if there's some filtering needed I don't need to ruin the transients

tldr: use truncated, resynthesized versions of the original round-robins and loop them with a single cycle to emulate waveguide synthesis

-

@iamlamprey yes very impressive.

-

@iamlamprey so in theory then you should be able to separate out the attack transient part - into its own sampler, and leave the sustain and release in their own - and thus do that waveguide thing of combining different voice attacks with alternate sustain/release components, e.g. Trumpet attack with piano sustain and release...and even reasonably cross-fade the sustain parts between different sounds...e.g. Trumpet attack with piano sustain going into violin sustain

-

@Lindon yeh my attack & release samples are in their own samplers with their own processing, i'm avoiding synthGroups because i need individual midiProcessors

mind you, i haven't tried this technique for anything except picked guitars/bass, it SHOULD translate to percussion (glocks, marimbas, things like djembes etc) and maybe piano, but im not sure how well it would go for brass/strings without adding a more complex multi-dimensional setup