Realtime Microphone-Moving ScriptNode/Effect Network for Y'all [Expanded!]

-

Thank you to @Dominik-Mayer for increasing my quotas, so I can post better screen capture movies.

What It Does

Simulates a desirable auto engineering process that impossible in physical reality: moving a microphone to change the timbre of an instrument, without incurring ambience changes, and direct control over phase.

User Experience

As the distance increases, amplitude is attenuated, and frequency response decreases.

The specific attenuation and frequency response changes are based on the specific characteristics of the microphone—this process is automatic, and does not require tagging by the user. A boolean operation locks the signal phase, so as the microphone is repositioned, the signal phase does not change.Realtime

Every parameter can be controlled by you (or the user) in realtime, including assignment to MIDI CC for automation. This includes the Blocksize. Note: You may want to adjust the Smoothing on the Gain Node.

Limitation

Proximity effects and angle are not factored—this would be a different Effect, positioned in serial before the repositioning Effect.*

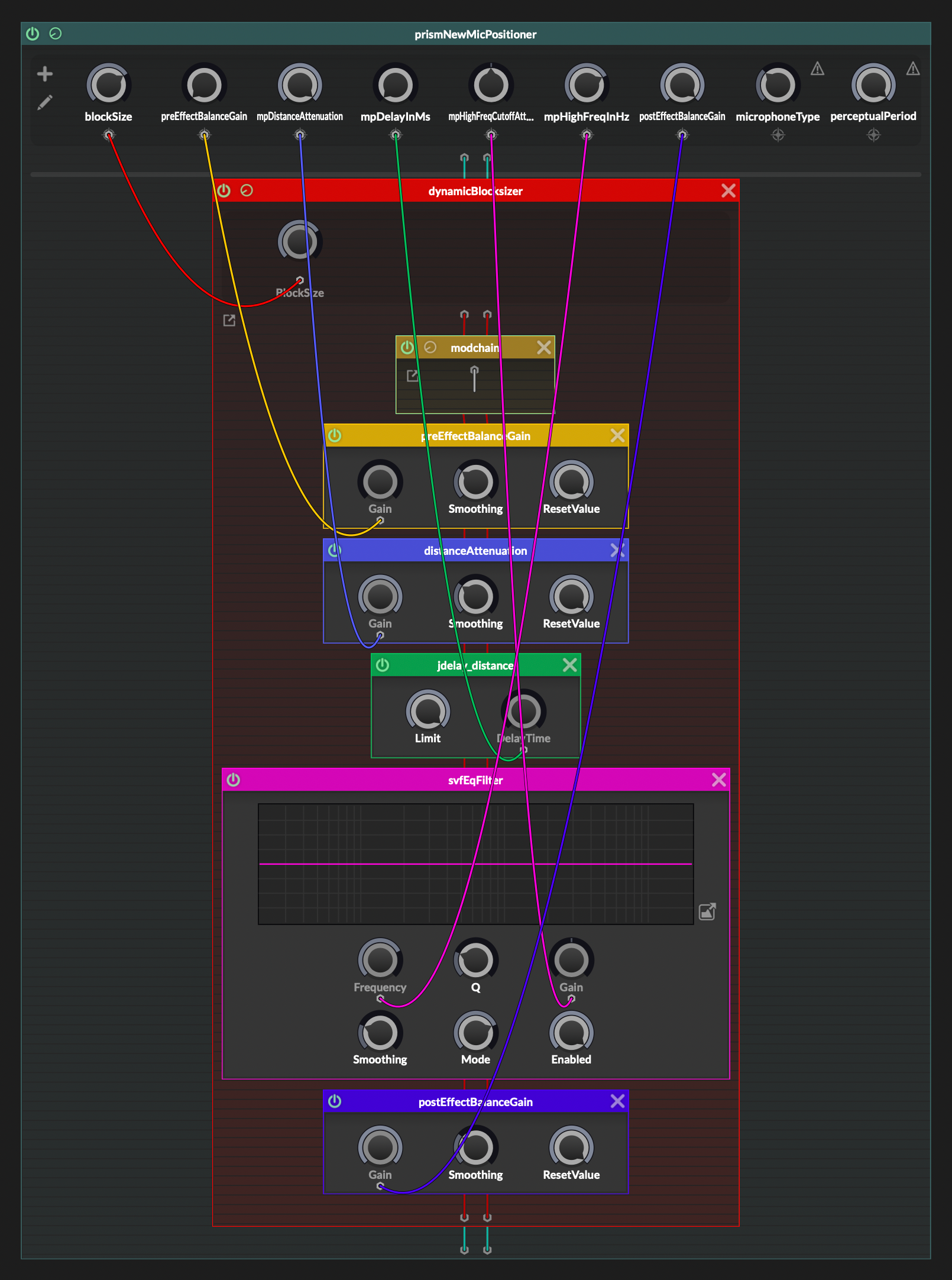

Visual of Starting-Point

Discussion

Microphone repositioning is part of PRISM's suite of process allowing mix engineers to go "back in time" and alter original recording conditions.

Why this is Useful

As a rule of thumb, it desirable to maximise the printing of sonic parameters during the recording process. This enables control over specific aspects of the final mix, including parameters (generally) impossible to control after recording has occurred. It also provides predictability over the final mix, regardless of when and by whom this occurs, and reduces the amount work required to get a "good" sound during the mixing process.

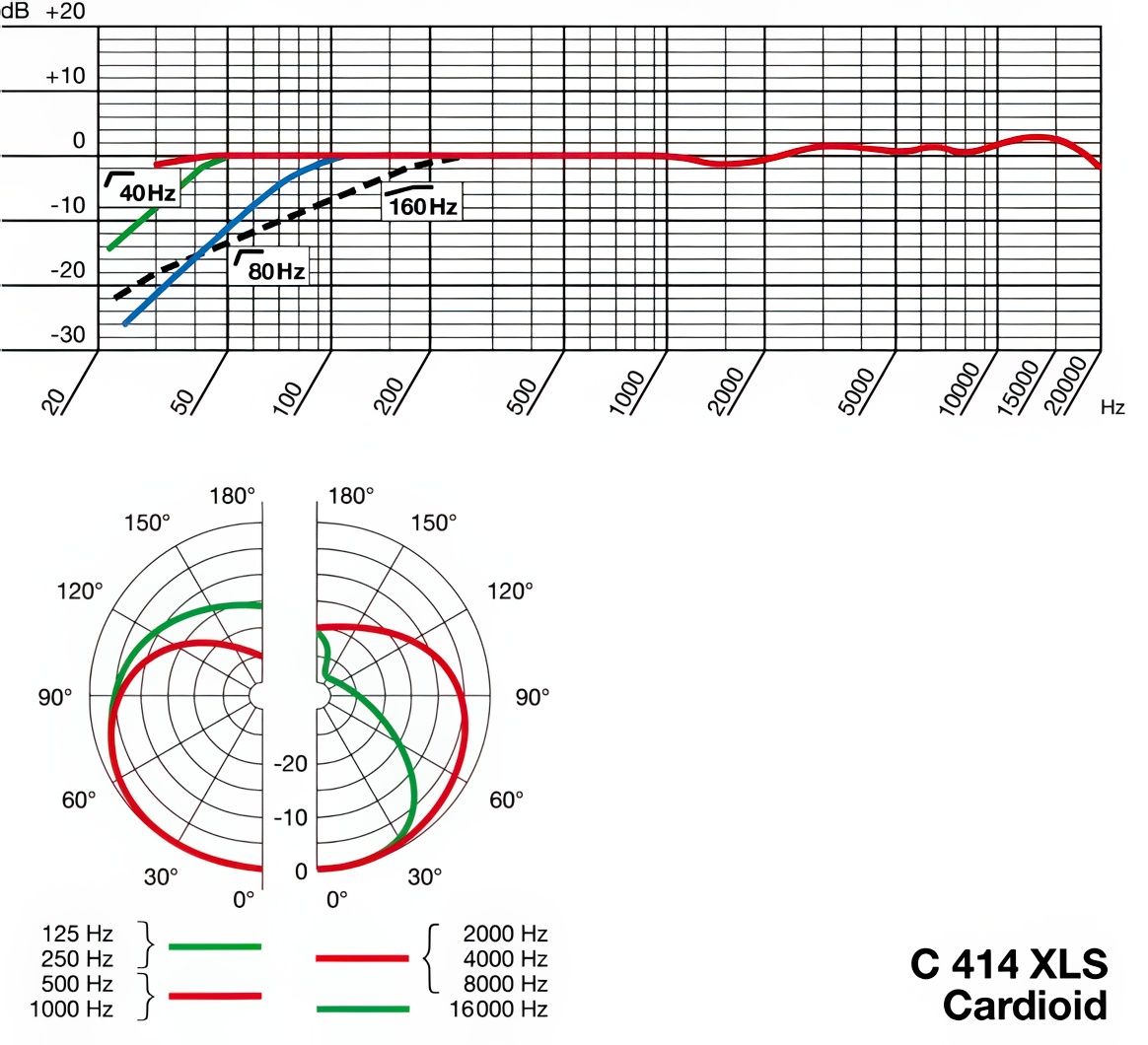

A key aspect of microphone design is the control over how timbre (as a function of phase and frequency response) changes as a microphone moves. In effective microphone design, these changes are specifically designed into the microphone. It's part of the difference between a $200 condenser microphone, and a $2000 one—it sounds good regardless of source proximity, and the changes predictable in a musically-useful way—and is consistent between factor-produced units. (In other words, the recording engineer can "play" the microphone.)

Example

Tom Syrowski uses the positioning of multiple microphones, on guitar amps, to create Grammy-award winning guitar sounds for bands such as Mastodon and Incubus.

What Changes

There are generally two desirable parameters associated with moving microphones:

- Systemic frequency (timbre) changes without the limitations incurred by the use of EQ processing. (Phase changes, but this is perceptually uniform across all frequencies.)

- Precise control over phase as a timbre-shaping process.

- Precise control over the delay before sonic energy onset, which a key component of distance-miking with multiple microphones.

But these change as result, which are not desirable:

- Ambience changes: Great care is often taken in setting up a recording space, but every aspect of these parameters changes as a microphone is moved. The PRISM Microphone Movement algorithm enables microphones to be moved while preserving the exact sonic characteristics of acoustic room treatment.

- Phase: It changes whether you want it to or not. In reality, there is quantum-like effect where you cannot change the phase without changing the frequency response (as a function of proximity), and vice-versa. PRISM enables these variables to altered independently.

How it Works

All professional microphones have precise specifications published that describe the changes in amplitude and frequency response as a function of distance. The speed of sound is (functionally) constant in a given recording space. (In practice, as equipment warms the room, increasing the air pressure, and thus altering the speed of sound.)

What About the Room?

Don't you need to know all the characteristics of the recording room to simulate moving the microphone? Fortunately, no—you already have a recording of the source through the microphone in question. And remember, the goal is here is to move the microphone without changing the ambience. Likewise, the goal is to move the microphone without changing the absorption of frequencies (because that's already been optimised for the recording you're starting from.)

Limitations

PRISM audio plugins are playback engines for pre-encoded data. Very little actual processing occurs within the plugin. For example, a PRISM algorithm automatically determines the model of microphone used on a given audio track, and matches it to pre-existing database of manufacturer-supplied specifications.

My HISE plugins read this encoding data when performing their processing. Additionally, my HISE plugins are proof-of-concept protypes, and often “cut corners” that are not important to specific aspects of a specific development roadmap.In this code example, I’ve added all of the information for you to implement this Effect without PRISM encoding, except for Phase Preservation. (And for that parameter, I have provided guidelines for how to implement it yourself.)

Expectations

All of the preceding information may built up this network to be far more sophisticated than it appears. However, the discussion above is intended as the other parts of this Effect’s “Code”, as in the information required for a precise implementation that accurately reflects the Effect’s intended goals.

The Microphone Type and Perceptual Period parameters, as well as the (empty)

modchainNode are placeholders if you wish to implement them inside the Network.Also, please not that the PRISM algorithm is currently being tuned, so the processing is in flux.

Example Microphone Data: AKG 414

Below: Some of the data published by AKG for the 414.. Use it to create your microphone configuration files, or more general profiles for microphone types.

The Network

<?xml version="1.0" encoding="UTF-8"?> <Processor Type="ScriptFX" ID="BussNetworkEditor" Bypassed="0" Script=" function prepareToPlay(sampleRate, blockSize) { 	 } function processBlock(channels) { 	 } function onControl(number, value) { 	 } "> <EditorStates BodyShown="1" Visible="1" Solo="0"/> <ChildProcessors/> <RoutingMatrix NumSourceChannels="2" Channel0="0" Send0="-1" Channel1="1" Send1="-1"/> <Content/> <Networks> <Network ID="prismNewMicPositioner" AllowPolyphonic="1" Version="0.0.0" AllowCompilation="1"/> </Networks> <UIData> <ContentProperties DeviceType="Desktop"/> </UIData> </Processor>Parameters

Most of the processing in this example is performed outside the Network. I provided sample code, and it should be trivial implement within the ScriptNode network. If anyone does this, I encourage them to post their Network for the community. I’ve added notes for performing these calculations in-node. While it is relatively trivial to perform all of these calculations with a high degree of accuracy, within the context of a commercial audio plugin, users will likely not notice this—instead, I suggest tweaking your parameters so the results feel like they “ought to”.

Preprocessing Gain

An optional parameter to prevent clipping within the Network. In this example, it is assumed the microphone is at the point-source. However, you could add this as a parameter, and if so, as the microphone is moved closer to the source, clipping could occur.

Distance Attenuation in dB

The decrease (or increase) in amplitude as a function of distance. For a HISE-only implementation, this parameter would be better-expressed as the change in distance. This would require a (fixed) starting distance, and a variable change in distance parameter.

Delay in Milliseconds

In a HISE-only implementation, this would not be an input parameter; it would be calculated based on the change in distance.

High Frequency Cutoff

The point at which frequency-filtering occurs. Changes in frequency as a function of distance are linear. The algorithm presented here oversimplifies these changes, but they are not complex to implement if accuracy is desired.

High Frequency Attenuation

As named.

Postprocessing Gain

Another gain stage to prevent possible clipping.

Microphone Type

or an in-HISE implementation, I suggest allowing the user to tag the microphone type (as dynamic or condenser), and use a constant here to identify the choice, internally. As PRISM does this in the encoding file, this input parameter is not used here, but including as a suggested starting-point.

Dynamic Blocksize

Enables you to change this during runtime, including for inclusion in default presets. (I am not overly-familiar with blocksize a HISE Network parameter.)

Perceptual Period: This is the “useful” period of the signal envelope. It is the only parameter that I’m not providing working values for. But here is one way for you to do it:

- Identify a fundamental frequency for the signal. There are a plethora of published algorithms for doing this.

- As the user moves the “Distance” user interface slider, ensure that the distance changes in increments of the fundamental’s period.

HISEscript Code Examples for Parameters

Note that this code incorporates my own (crude) Broadcaster system. I suggest using HISE’s!

Sample JSON Data

{ "micMovement": { "room": { "sizeInCm": 3000.0 }, "micResponse": { "dynamic": { "amplitudePadEndinDb": -5.0, "highFreqPadEndinDb": -9.2, "fixedHighFreqInHz": 5309.4 }, "condenser": { "amplitudePadEndinDb": -3.0, "highFreqPadEndinDb": -4.0, "fixedHighFreqInHz": 2978.4 } } }HISEscript Code

////////////////////////////////////////////////////////////////////////////////////////////////////////////////// // // // // // TIME MACHINE - MICROPHONE RE-POSITIONER [UI THREAD] // // // // // ////////////////////////////////////////////////////////////////////////////////////////////////////////////////// ////////////////////////////////////////////////////////////////////////////////////////////////////////////////// /////////////////////////////////// SECTION ACTIVATION BUTTON CALLBACK FUNCTION ////////////////////////////////// ////////////////////////////////////////////////////////////////////////////////////////////////////////////////// // Register and define the control callback. button_TM_MOVEMIC_SystemToggle_GUI.setControlCallback(on_TM_ToggleRePositionBypass_Control); inline function on_TM_ToggleRePositionBypass_Control(component, value) { if (value) TRANSFORM_Time_SUBMOD_MicMover_PerformOp("user_poweron"); else TRANSFORM_Time_SUBMOD_MicMover_PerformOp("user_poweroff"); }; ////////////////////////////////////////////////////////////////////////////////////////////////////////////////// ///////////////////////////////////////////// DISTANCE KNOB CALLBACK ///////////////////////////////////////////// ////////////////////////////////////////////////////////////////////////////////////////////////////////////////// // Register and define the control callback for the distance knob. NumOfIRsForPerformance() knob_TM_MICPOS_AdjustDistance_GUI.setControlCallback(on_TM_AdjustDistance_Control); inline function on_TM_AdjustDistance_Control(component, value) { // Value is distance in centimetres. if (value) { local mpDelayTime = MIC_MOVER.MS_PER_METER * (value / 100); local mpGain = value * g_micMovement.factors.mpDistanceAttenuation; local mpHighAttenuation = value * g_micMovement.factors.mpHighFreqCutoffAttenuation; } else { local mpGain = 0; local mpDelayTime = 0; local mpHighAttenuation = 0; } // Set the node values. fx_TM_NODE_MicPositioner.setAttribute(fx_TM_NODE_MicPositioner.mpHighFreqCutoffAttenuation, mpHighAttenuation); fx_TM_NODE_MicPositioner.setAttribute(fx_TM_NODE_MicPositioner.mpDistanceAttenuation, mpGain); fx_TM_NODE_MicPositioner.setAttribute(fx_TM_NODE_MicPositioner.mpDelayTime, mpDelayTime); }; ////////////////////////////////////////////////////////////////////////////////////////////////////////////////// /////////////////////////////////////////////// PHASE LOCK BUTTON //////////////////////////////////////////////// ////////////////////////////////////////////////////////////////////////////////////////////////////////////////// // Register and define the button's control callback. button_TM_MICPOS_PhaseLock_GUI.setControlCallback(on_TM_TogglePhaseLock_Control); inline function on_TM_TogglePhaseLock_Control(component, value) { if (value) { // if a cycle is defined, show it. if (isDefined(g_instrumentData.general.period)) { // Construct the value text. local periodTimeInMs = g_instrumentData.general.period/ SAMPLE_RATE; local displayTime = Engine.doubleToString(periodTimeInMs, 4); // Set the text. label_TM_MICPOS_PhaseLock_GUI.set("text", "Period: " + displayTime + " ms"); // Fade it in. label_TM_MICPOS_PhaseLock_GUI.fadeComponent(BUTTON_VISIBILITY_FADE_TIME, true); } } else { // if a cycle is visible, hide it. if (isDefined(g_instrumentData.general.cycle)) if (label_TM_MICPOS_PhaseLock_GUI.get("visible")) label_TM_MICPOS_PhaseLock_GUI.fadeComponent(BUTTON_VISIBILITY_FADE_TIME, false); } }; ////////////////////////////////////////////////////////////////////////////////////////////////////////////////// ////////////////////////////////////////// STANDARD LIBRARY: CORE FUNCTIONS ////////////////////////////////////// ////////////////////////////////////////////////////////////////////////////////////////////////////////////////// inline function TRANSFORM_Time_SUBMOD_MicMover_PerformOp (moduleState) { local workingState = null; switch(moduleState) { case "init": workingState = "start"; break; case "ready": workingState = "start"; break; default: workingState = moduleState; break; } switch(workingState) { case "start": // Reset the knob. knob_TM_MICPOS_AdjustDistance_GUI.setValue (0); // Reset the suffic. knob_TM_MICPOS_AdjustDistance_GUI.set("suffix"," cm"); // Clear processor parameters. fx_TM_NODE_MicPositioner.setAttribute(fx_TM_NODE_MicPositioner.mpHighFreqCutoffAttenuation, 0.0); fx_TM_NODE_MicPositioner.setAttribute(fx_TM_NODE_MicPositioner.mpDistanceAttenuation, 0.0); fx_TM_NODE_MicPositioner.setAttribute(fx_TM_NODE_MicPositioner.mpDelayTime, 0.0); fx_TM_NODE_MicPositioner.setAttribute(fx_TM_NODE_MicPositioner.preEffectBalanceGain, 0.0); // Set the frequency - for now at least, we're not changing that. if (moduleState == "init") { // Disable the activation button, and reset High Frequency (in Hz) parameter. button_TM_MOVEMIC_SystemToggle_GUI.set("enabled", false); fx_TM_NODE_MicPositioner.setAttribute(fx_TM_NODE_MicPositioner.mpHighFreqInHz, 20000); } else { // "ready" // We'll take the first mic as the type for both mics. local micType = g_instrumentData.recording.mics.array[0].type; // Compute the amplitude pad factor. local amplitudePadEndinDb = g_songData.micMovement.micResponse[micType].amplitudePadEndinDb; g_micMovement.factors.mpDistanceAttenuation = amplitudePadEndinDb / g_songData.micMovement.room.sizeInCm; // Compute high-frequency cutoff factor. local highFreqPadEndinDb = g_songData.micMovement.micResponse[micType].highFreqPadEndinDb; g_micMovement.factors.mpHighFreqCutoffAttenuation = highFreqPadEndinDb / g_songData.micMovement.room.sizeInCm; // Assign the fixed high-frequency constant. local fixedHighFreqInHz = g_songData.micMovement.micResponse[micType].fixedHighFreqInHz; g_micMovement.constants.mpHighFreqInHz = mpHighFreqInHz; fx_TM_NODE_MicPositioner.setAttribute(fx_TM_NODE_MicPositioner.mpHighFreqInHz,fixedHighFreqInHz); // This amount only changes with the SampleMap. fx_TM_NODE_MicPositioner.setAttribute(fx_TM_NODE_MicPositioner.mpHighFreqInHz,fixedHighFreqInHz); // Enable the system activation button. button_TM_MOVEMIC_SystemToggle_GUI.set("enabled", true); } // Disable the processor. UTILITY_SetEffectActivationState(fx_TM_NODE_MicPositioner, false); // Set the system activation button to false. button_TM_MOVEMIC_SystemToggle_GUI.setValue(false); // Disable the distance knob. knob_TM_MICPOS_AdjustDistance_GUI.set("enabled", false); // Reset and disable the phase lock button. button_TM_MICPOS_PhaseLock_GUI.setValue (false); button_TM_MICPOS_PhaseLock_GUI.set("enabled", false); // Hide the period display. label_TM_MICPOS_PhaseLock_GUI.set("visible", false); break; case "user_poweroff": // Do the knobs and buttons. knob_TM_MICPOS_AdjustDistance_GUI.set("enabled", false); button_TM_MICPOS_PhaseLock_GUI.set("enabled", false); // Disable the processor. UTILITY_SetEffectActivationState(fx_TM_NODE_MicPositioner, false); break; case "user_poweron": // Do this stuff if the Section is activated. if (button_TM_MOVEMIC_SystemToggle_GUI.getValue()) { knob_TM_MICPOS_AdjustDistance_GUI.set("enabled", true); button_TM_MICPOS_PhaseLock_GUI.set("enabled", true); UTILITY_SetEffectActivationState(fx_TM_NODE_MicPositioner, true); } break; } }

For Commercial Plugin Developers

Remember that it's not important whether your implementation is accurate, or even close to accurate. This significantly eases the DSP implementation, but also shifts the locus of user interaction to less trivial domains—because most users have not encountered this Effect before. This can make your implementation more challenging—or, you can leverage their unfamiliarity to your advantage.

- The GUI should respond as expected. Don't get bogged down in things like drawing a room with a microphone that moves around—that introduces problems such as the room's shape, vertical position of the microphone, and so on.

- The changes in audio should respond as expected. Your audience isn't likely to be engineers that actually know how to use microphones (the process this Effect simulates). Even if your users are recording engineers, the vast majority have never done this. Focus, instead, on making the sound change audibly throughout the knob's travel, as a cognitive function of how the visual interface is presented. If you're not sure what users expect, send out some betas, and ask if it meets their expectations. (Don't tell them you're not focussing on accuracy, obviously.)

- The changes in audio should be useful. Often, the changes manifested by microphone positioning are subtle, especially with dynamic microphones,. But if your Effect is subtle, users may complain that the feature either isn't useful, or simply doesn't work well. It may be sagacious for you to exaggerate the Effect of the plugin.

- In keeping with the other points, don't get bogged down in microphone specifications. If you to establish a baseline accuracy for that, gives your plugin to a couple recording engineers who move microphones around, and adjust the parameters until they say, "This is useful".

- I don't have a microphone-type tag in my interface; that can simply be a two-option combobox. Used effectively, user-tagging can unconsciously guide user expectations so that your plugin "works great". But what does that it mean? It means only that there is high correlation between expectation and result. By controlling the expectation via your GUI, you can indirectly control the user's cognitive response.

- Ensure the audio doesn't stutter while the distance is changed. Two possible tweaks here are adjusting the (dynamic)

blocksize, and theSmoothingparameter is the gain Nodes. I've imagine it's possible to construct an algorithm that detects these problems (either algorithmically, or via machine learning), and having the Network adjust itself automatically to compensate. - In your own testing, try using this Effect as part of your mixing process; you'll be able to refine parameters with that process.

Questions?

Ask away…

-

That is quite a thing! Thanks

-

@griffinboy I've got dozens of these things, if people are interested.

-

@clevername27 This might be a good subject for a show and tell type meet, you could walk us through your collection.

-

@d-healey I'd be happy to.

-

@clevername27 Nice! Thank you, I'm interested to hear how that sounds.