Communicate with an External Audio File slot?

-

How do we get a refrence to the external audio file slot used here?

I want to be able to pull data from it such as the number of samples in the audio file. And possibly also take direct control of the other metadata such as the start and end range of the sample.I'm in the process of writing my sampler and I'm wondering how to best interface my audio data with the main UI in Hise.

I'm new to external data, and I have trouble understanding the docs.

I assume I need something to do with Audiofile?https://docs.hise.dev/scripting/scripting-api/audiofile/index.html#getcontent

-

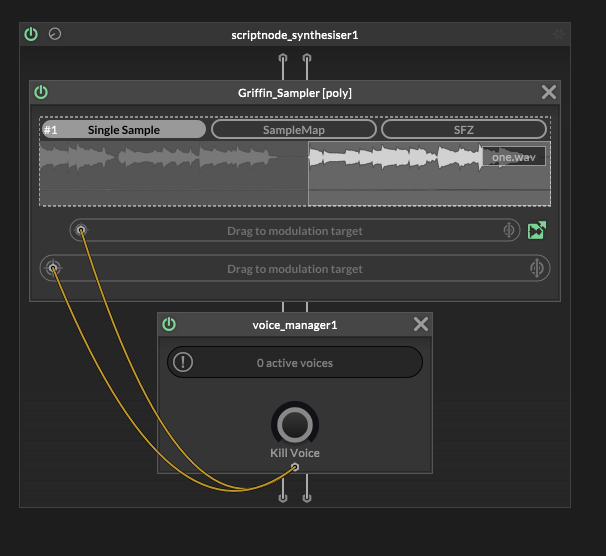

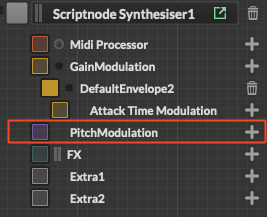

Something I've also realised is that I'm not sure how to properly interface with the rest of the Scriptnode Synth functionality. Things such as the Pitch modulation I've been wanting to do per voice, but i've realised that communicating this data to the node will require being able to send detailed info from Hise to the node about what I need each voice to do.

Is there a method to get per voice modulation data into a node?

I may have gotten myself into a complex spot with this one!

-

@griffinboy said in How to reference an External Audio File slot:

How do we get a refrence to the external audio file slot used here?

This is for getting the peak node buffer. I imagine it's NumChannels: 2 for the one you're showing.

// to be the External Display Buffer #1) const var outputBuffer = Synth.getDisplayBufferSource("ScriptFX1").getDisplayBuffer(0); // This will override the default properties of the node. You can use the same procedure to customize the FFT properties too (like Window type, etc). // NumChannels must be 1, but you can set another buffer length for the display outputBuffer.setRingBufferProperties( { "BufferLength": 16384, "NumChannels": 1 });@griffinboy said in How to reference an External Audio File slot:

Something I've also realised is that I'm not sure how to properly interface with the rest of the Scriptnode Synth functionality. Things such as the Pitch modulation I've been wanting to do per voice, but i've realised that communicating this data to the node will require being able to send detailed info from Hise to the node about what I need each voice to do.

You can use the PitchModulation slots in the ScriptnodeSynth processor

VoiceStart and Envelope modulators are polyphonic. Time Variant is monophonic. VoiceStart also has the code area and it can access the Message class. You can communicate the data from other MIDI scripts using GlobalRoutingManager.setEventData.

That same data can be pulled and set in scriptnode using the appropriate nodes, so you might not even need the pitch modulators and can cram it all into the main scriptnode synth network.

-

Thanks for your answer, this may get me started on the right track.

I've not yet touched this side of Hise, and starting off by creating a custom sampler may not have been the place to begin.My playback scripts are custom and so don't respond to the pitch modulator. I shall have to work out how the polyphonic data works and see if I can communicate it to my node.

Are there any tutorials or guides for this Hise synth control stuff? I've not seen it before.

Thank you.

-

@griffinboy Do you mean modulators? I'm sure David covered some of it on his channel. For Script Voice Start and co., no. You'll just have to brave it, but feel free to ask. I made some seriously complex stuff using all of these.

Do you have any experience with Kontakt?

-

Yes, lots of kontakt experience.

Just browsing through the Event. stuff in the docs now.

This is looking very familiar. I think it's just a case of learning the Hise equivalents for everything.I am rather hoping that Hise might have everything I need for sending the data.

Message.setFineDetune(int cents)

^ I noticed this which caught my eye.

Detune per voice is essentially what I'm wanting to communicate.

I'm looking for the communication of fine pitch bends from the UI script. Continuous control for each voice. -

const var outputBuffer = Synth.getDisplayBufferSource("ScriptFX1").getDisplayBuffer(0);^ unfortuantely no, I don't think you can get a reference via this method.

At least it doesn't work for my External Audio File SlotI'm not cut out for this sort of thing

Give me analog simulation any day, but this will stump me. -

@griffinboy said in How can you reference External Audio File slot?:

Yes, lots of kontakt experience.

Just browsing through the Event. stuff in the docs now.

This is looking very familiar. I think it's just a case of learning the Hise equivellents for eveything.It's very similar. HISE differs in places where it needs to be more flexible to allow for all the extra features and event hierarchy.

https://forum.hise.audio/topic/8602/coming-over-from-kontakt-read-this

The important thing is: events move through MIDI scripts within a processor in descending order, then propagate to child processors in parallel. They always share the same ID, so you can catch events created above down the line.

Is it detune per voice or per note? Is it microtuning or will live voices pop up in your interface?

If there's no formula for targeting the voices (i.e. notes of a scale for microtuning), you'll have to use globals. Declare a global object somewhere high up the chain, I do it before the interface script

global Event = {};You cannot be dynamically creating properties in a MIDI callback, so create them up front, and call reserve() on any arrays you might be using in realtime callbacks as well.

Maybe FixObjectStack is a good idea here, but I have not used it much.

If you have a fixed number of playing notes (like a guitar), it's just an array that you're storing them to. If that array is in the global object (Event.nodeIds), you can now access it back up in your interface script. From the control callbacks, you can call GlobalRoutingManager.setEventData() to send new data there since you can now access the IDs of the notes that are currently playing (stored in your Event.nodeIds array).

This data will then be picked up either in your realtime Synth timer down where you're doing the MIDI processing (with getEventData() and then doing whatever you want) or within scriptnode using event nodes.

-

mmm. Thanks for your explanation.

Yes the detune is per voice. There are going to be situations where multiple of the same note are triggered and held at once.

And each voice (despite them being the same note) needs it's own playback speed modulation.Sounds like this is unfortunately more complex than I anticipated. I think this shall take me some time, Kontakt is certainly nice when it comes to this kind of thing, I remember I scripted my entire end goal in an evening : /

It shall certainly not be the case here.if I'm being specific, I am aiming to script a hierarchy of control.

First I need to be able to collect all notes separately and control the pitch of each note.

Then within that I need access to the pitches of the voices belonging to each note.Notes don't need to know about each other.

But the main system needs create and set this pitch data for any note that starts playing. It's quite a procedural thing. -

- create a global Event = {}; at the start of your interface script or in a separate script before.

- in your synth midi script, whenever you play a note, store it into the Event object. Decide on a data model and initialise the necessary properties and call reserve on any arrays that you create in the Event object

- Access your note ids in the interface script. You can use 2d arrays to keep a nice track of events played per note, just remember to call reserve on all of them.

- Write data to the manager using setEventData

- Use the data back down in the synth midi processor either in the timer callback or in scriptnode itself using event nodes to access the slot (when polyphonic, a stream of the network is created per voice so the polyphonic nodes will work independently. You cannot see this as you cannot see an individual voice's network stream and the values on node parameters per voice, you have to use your ears here. I.e. the network will always show the last voice to be played and what's happening with its nodes).

-

Thank you, you've been very helpful.

I think I shall start by figuring out the Event and midi system.

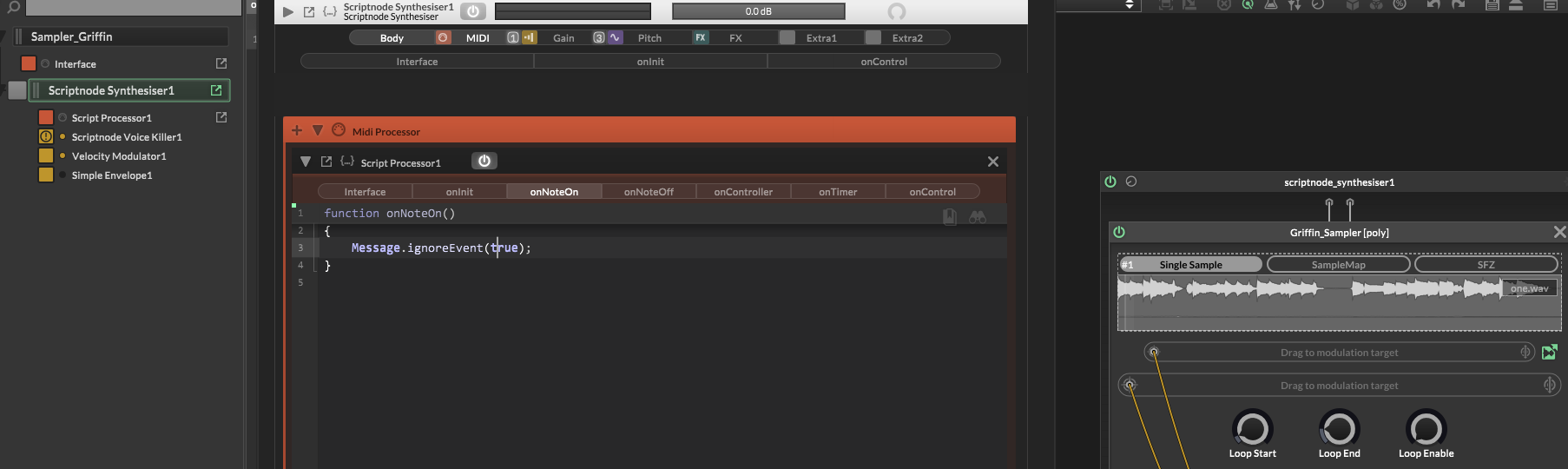

Something that perhaps makes this a 'little' easier is that I realised in this design I will be intercepting all of the midi data, and writing my own based on the input. It's 100% controlled, so I am going to start with figuring that out.

Success.

-

@griffinboy You can use Message.ignoreEvent(); That specific callback will still execute, but the input message will not go beyond that processor.

If you now spawn 5 events using Synth.play, The children synths (but not the midi scripts within the same processor / sibling midi scripts) will receive 5 messages. All of them will have a positive isArtificial() return.

They will share the same IDs that they returned when you played them in the parent's midi script, so you can query that within your global object and do individual processing down the line (in case you need delaying, ignoring etc.).

-

Fantastic, thanks again for your help.

-

One last thing: You wouldn't happen to know how to stop Hise from killing voices with duplicate notes?

Unfortunately I am very foolish when it comes to Events and Hise. Completely new area for me. -

For the sampler, check the settings on it, there's a voice handling dropdown.

-

-

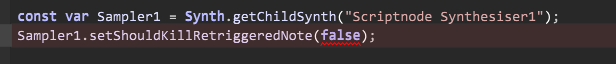

@griffinboy Get a reference to your custom synth and call

Synth.setShouldKillRetriggerredNote(false);This is on by default. -

-

G griffinboy marked this topic as a question on

G griffinboy marked this topic as a question on

-

G griffinboy has marked this topic as solved on

G griffinboy has marked this topic as solved on

-

G griffinboy marked this topic as a regular topic on

G griffinboy marked this topic as a regular topic on