How to Create Artificial Notes of a Specific Duration

-

I've almost got this working—can anyone point me in the right direction, please? Cheers.

Goal

When a MIDI comes in, replace it with the same note, but with a fixed duration.

Code

Realtime Thread: Note-on

Message.makeArtificial(); // Why doesn't this need to happen???????? // // Message.ignoreEvent (true); // Replace the incoming note-on with a new one. We'll keep the event ID in a global variable, so it can be // recalled in the Note-Off Callback. eventId = Synth.playNote(noteNumber, noteVelocity);Realtime Thread: Note-off

if (Synth.isArtificialEventActive(eventId)){ // Delay the note-off by the duration specified in the knob. Synth.noteOffDelayedByEventId(eventId, Engine.getSamplesForMilliSeconds(g_extendedDurations.fixedDurationInMs)); // What note is this? Message.ignoreEvent (true); } -

@clevername27 You need to put your delayed note off in the on note on callback, after you've created the new note.

-

@d-healey yes.

Also, keep on mind that this delayed note off will not trigger the note off function of this processor, but only that of its children.

-

@d-healey Thank you, got it. @aaronventure Thank you, and there I go again forgetting about the children.

For those who found this thread, asking the same question, here's the answer, with comments:

Note-On

var noteNumber = Message.getNoteNumber(); var midiChannel = Message.getChannel(); var noteVelocity = Message.getVelocity(); var eventId = Message.getEventId(); // Delete the incoming MIDI note. Message.ignoreEvent (true); // Replace the incoming note-on with a new one. We'll capture the Event ID so the note-off we create answers this note-on. eventId = Synth.playNote(noteNumber, noteVelocity); // Delay the note-off by the duration specified in the knob. Synth.noteOffDelayedByEventId(eventId, Engine.getSamplesForMilliSeconds(g_extendedDurations.fixedDurationInMs));Note-Off

// Nothing.

Notes:

- This code goes in your real-time thread.

- Remember that the note-off call duration is measured in samples. The conversion call will correctly translate from milliseconds, regardless of the host sample rate.

- If you want to specify the MIDI channel, use

Synth.playNoteFromUI(int channel, int noteNumber, int velocity). - For extra insurance, you may wish to invoke this call to help ensure you don't end up with stuck notes (if can the note-off somehow happens before the note-on):

Synth.setFixNoteOnAfterNoteOff(bool shouldBeFixed). - There's an important point by @aaronventure I'll add here once I understand it. :)

-

@aaronventure Can you please explain a little more—the code above is working for me. Within the context of your note, should it work?

-

C clevername27 has marked this topic as solved on

-

@clevername27 said in How to Create Artificial Notes of a Specific Duration:

Synth.playNoteFromUI(int channel, int noteNumber, int velocity).

I'll get back to you on the code but this one will trigger the note on function in the processor that you call it from (at least if you call it from the control callback), so that's also worth keeping in mind.

-

first of all, let's address your variable initializations inside the function callback. I don't know whether that's something that was recently changed, maybe I missed it, but that's an unnecessarily intensive call for a realtime function that needs to be as fast as possible.

- use reg, declare them in on init, you have 32 per namespace

- if using arrays, call .reserve() to reserve the space for what you want to do, because dynamic expansion is expensive

- if using objects, initialize the properties in on init as well

- avoid string operations

The Audio Thread Guard should warn you about all of this, but it seems to be busted on MacOS (it's enabled in my Settings but even string operations are not tripping it).

Anyway, let's continue.

The HISE Event Guide

Please correct any mistakes I make; this is based on my own experience with multiple containers involving different amounts of children and grandchildren and writing complex event logic.

I also think we're in a need of a Glossary of Terms which would contain any current or past terminology.

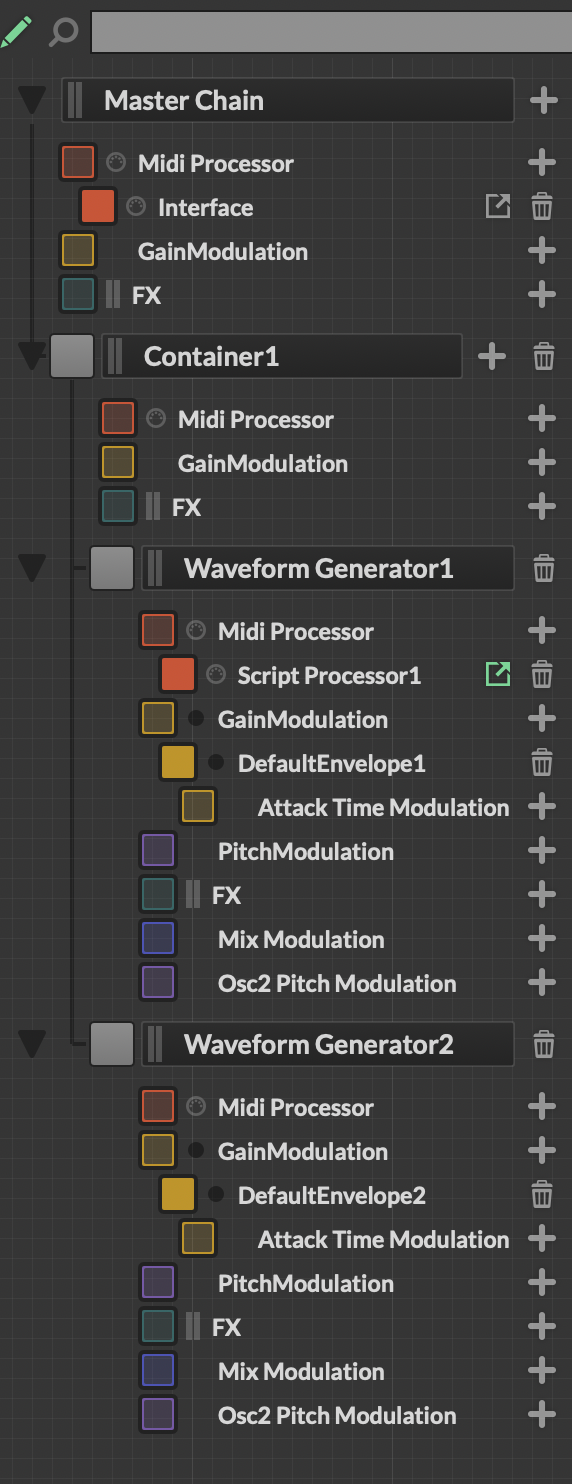

Here's a very simple architecture of a plugin. There's a container with two WGs. The reason one would put two or more of anything into a container is to not have to duplicate the MIDI and FX processing, as well as to possibly use delays or policing of events.

Before propagating events to child processors, HISE checks the Event Queue. Event Queue is a list of all the events that need to be send to the child processors, and they have a type and a timestamp.

When HISE receives MIDI, it enters the very first MIDI processor in the Master Chain container, which is always the topmost container in any project. All Sound Processors not parented by a Container module are direct children of the Master Chain container. The master chain reacts directly to external MIDI input.

You now have the ability to process MIDI. If you wish to react on note inputs in the interface script, you should defer it so that any repaints or whatever are not executed on your realtime thread. This means that any playback logic, delaying or filtering needs to be done in subsequent or child processors.

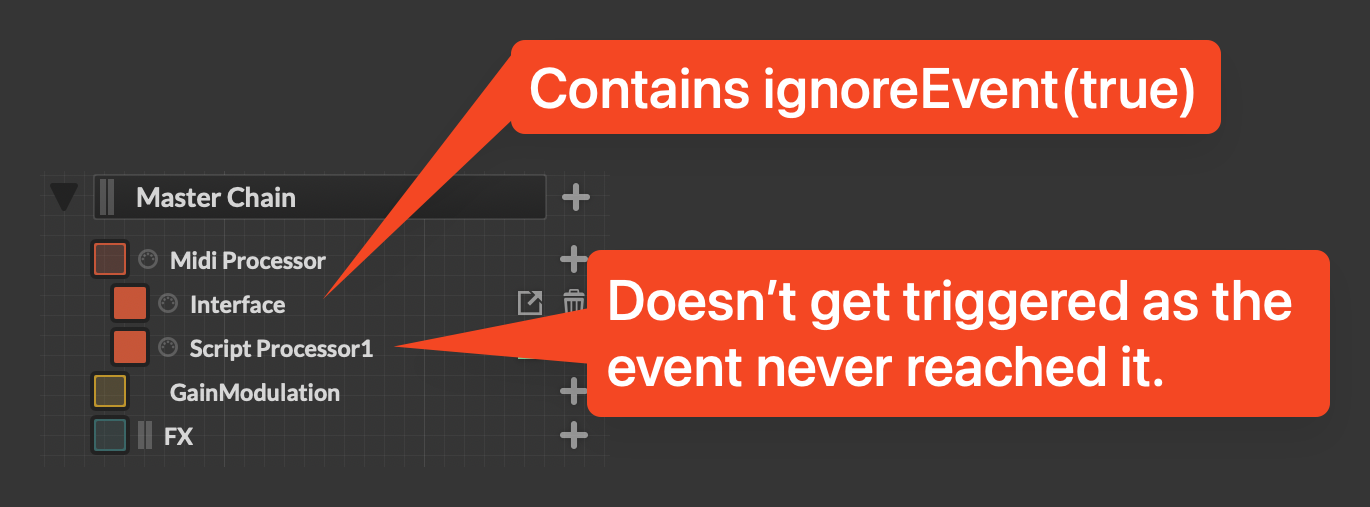

Once the event leaves the queue, it travels through the MIDI processors in order. This is how you do filtering.

Once it executes the final processor, it propagates to the children, if any.

playNoteFromUI and noteOffFromUI simulate real event input and no matter where you call them, it'll be as if a real event was received.

Here's a funny snippet. Just play one note. Do not, by any means, turn off the Dynamics/Limiter. Maybe even turn your speakers down for good measure.

The print message is only in the interface script. If you change it to isArtificial, it will return 0 for all events, even though they were created in the child processor, because these methods are meant to replicate an external note event coming into HISE. You should only use them to simulate that (like clicking on a pad, a note on a custom keyboard etc).

HiseSnippet 1168.3oc4XszaaaDDlzxzHVosn4QQ5QdnGjaMLDUjeTjBXGaYmHzHaAKGm1SoaVNzZgI2kfboaEJ5+s9Sn+D5sdMW6o1YIoLWJw3JK3ljlpCFZdsy2NO1Yj6GInPbrHxvr9IiBACyOxZvHtb3dCILtQ2NFlehUORrDhryXs6nPRbL3ZXZV6IJFlKunQ5mWu8tDeBmBErLLNUvnvyXALYA2967sLe+CHtvIr.MsauSWpfumvWjf3olUSiPB8bxYvgDkZKXY7TR7PCyuzx0osi2VTxFa4ztEk1ZqMa80aRAhmGrgy5a1dKu1dDZyVFlKsuKSJhFHIRHFOzcEtiFLT7i7LGbJKl8JePQ3XL.8bFai8Fx7c6ON3DaXXZ0uHTUKKTceqdLW1k7KBYeZp.6BKzCZlKbUPx4Z.ISMHsXFjti0.ZDKTVHQgmaa0kiYPLj.kfRltFl+g0dBTAtbs.x4vAQHwkFzXilMW0F+yJOxKgSkLA2VvOTHgi3MVo9OWeYz1XgOrVXDiKazCcKlxV6LPpT5vjfWAQMVYkGU+WpaO4I34kcDSHSglHguuxvJDqpZhtJCavS85p1WP7SfKUDiRkC8KMagdZVvQSQAuKmIOJDxoOP36pBopuOchxHO5he64c6PjDUtKmGpWHDIYJ3X1At.6VxxjKa0AhOWJBw9koRyXAlvMwmHKW0o5GyEfwiRoZU9jGyjiz6WuFkhMuxRwYEh2wpOSRGVMFWnBLhQp+MvXdC7GasO9hAUV.vEsN36l2t0lyd25cy7ecqNi3j.FMNuMcLoyDOyJg84DzMt5QFE6SFFAwCwpuIE7XoDe6TOYq3dL3CjXsmb+9c1SDDFkBqJbQgvJcTg3iUYScuUHZRj7EZtrB7jNq.lFLud6bIShjevy6a90bYSeqyELkizQQO7QujP8KVtUSKvr1Mzi2FGKRjL9Y8HxH1OgiWvGJGfy8n.VLx4fOd3VlKnBxYzMUzJHL.3toD+E9IWnih1LWnyXg5U7OHqh61VufbAjNgOsj6yRo8DQA1OA3Pjpc04Jlw+ay5L9vYdF+QTIBgShH73PQL3nexCf.1IBNDWh6DVzpRKJwsCHS3kO5LVkzREJN.CEZ5cqcFyrkNy9DdoCCoKcR8Tozwj+91YEyC.7l6dTLECLp3rdYZ+D+X3ELW4PGcCKX2Rm8SIQtXNjdUUlKNaUlkGYnoeEufsz6fUfZdCsBz8x4VfHmJWE5OsreCq4j1zrVnOYjhKtjTvy6pusSdqXiUV09MrCzWsdIQmB9BJNs68zkirtoVN5+iKDUpvb4wXb.KHzGGkeAl4QNJLdW7V4QR7ki4VtrrmfKBGJ3rR85GC3PiyNCJ8DRkWnIGHd+cpXR3yXbfDgwIXNiEW+emRk4qO2JCt1pZX6+6tHasOnWjc169vofuemEefENMtkcZpz9cSt7swZfuM7Q.gFIdIMaDipo+Vobv6MO8edyxV8Tz1NFoiczClA3hBujRKeTSYXq40vGNuF1ddMb840vMlWC2bdMbq+YCU6w83DoHHq2.Wts+9oyrMMu7GoYVy3uAKaAdnBAny other playNote methods will inject the event into the event queue. It will not be picked up by the subsequent sibling processor.

HiseSnippet 1160.3oc4X8taaaCDWxIJn1caXsqCceTnXevYHH0x0MICECM+wNsFqNwHNKc.CCErTmrIhDo.IcZMF1qzdV1ivdD5av1QI4HoTCOWutlNL+g.eG4c2Od+2ouTPAkRHsrqc5jXvx9SbFLgqGcvHBia0ssk8m4zinzfzMk09ShIJE3aYauxSLLrqtpUxm2738IgDNExYYYclfQgmwhX5bt8286YggGR7gSYQEtcqc6RE7CDghwHdVwogULgdNYHbDwbsJNVOknFYY+MN9ds7B1gR1ZGuVMozl6rcyucaJPBBfs7d31s1InU.g1nok8Zc7YZgbflnAEpz8E9SFLR7JdpANioXuLDLDdVCPKmx15fQrP+9ScNJKKam94tpURcU2woGymcI+bW1mmbfatDEcZ1UlGj7dGfTkBPZ0THcKmATIKVmehAO2zoKGifnKAJAkz6ZU42rcNPf2fq2LhbNbnDItTh5a0nwFt3eV+Q0pIggtvE3EY9OJXLmpYBtqfejPCGyqudseoVUTSJQHrYrjw006gn.ifaxT6I0r.FkQBquNpppWdxPtPBcLJstVNFLmUqZlQb+N2jzwMiCISLV4RENDzF5iFG8RPVe8MbKbvYPnfxzSRMTpB3FHFDzFPEA96OIwfc8qmYnMb6vGx3IhOfDEGBpCExdXdJa.fYk9p5dMPe.pvesl6Ue5AAou8qblwoJEggF.NiiMY+x4IXcdxqaC2KHgniY5Ewnc4Tn0VrTHZZLtvEE7tbl93XHi9PQnuI0v782NgyJKIA+1OzsMQSvbP6Ld38hALBafica3BrpOMirpSaPctVDi08KX55WjwMu.xaVos1uwwjN9ZLG4dJe08xROIH8O8y+yxNcu+8ceEF6cwrFWsjMbHH+HLt679Jt+gHViMEE9iCI5xcJMyPxN.8AkhylVPbEVGWbFy6P6yFys84hBwa4zmooilMFqLCLZ5L+u.FyF57oNcvobTcN.W04vebYmvr3l+tol+lNOmbAjzRMw3eYBcfPF49DfCRiuyaNKI76K5RBwK7RBGS0HDNUR3pXgB7Jp4APD6TAGTk3dEIZNSIJwsMnGyKq5TVktkwUfSMhJbuar6TlMKxrOgWRYHcIM0i85bx+3wc3DLfkNH5XEEcLF+bwxh9iCUvyY95QdEELmcyhreJQ5iwPZoj1UJm0r5h0bob8ag6OiEVV6ZXGp2OMAtF6SYaW.iUmhwALyNJc3Wfq5fbLX71X22.x3P8TtkqC6I3h3QBNqTT+DHa7VQrOyGzdZMtMdNm6r6IPHPTEpM+5ceFtBEQh9IXI8EdKtuXdwquxIEttllGt+2c9xJ+uY9x7q9v9gebGEuqC1WtoaRnz85IVdhXrlwG1ifUz3zCG7mFM.GRRADkbNDZVXzthY0tT5FF5zQdb+Dh+D+jcnmg1N6PuoG9AwFQDpT7BZ5pvlh9ajvAe27j+O.Uc5Ync8rRVOtnyLBGY7BJsrpdKAatrB9fkUvVKqfObYEbqkUvsWVA24uWPyD88FqEQo0F3ZN86j7aKrsS2vIoLw5u.nPNWjBThe same thing happens if you call delay on an event (even the event that triggered the callback. That specific callback will have all of its code executed, and if you didn't ignore it, the event's timestamp in all the event queues after will be adjusted by the given delay value.

Again, where does the event queue get evaluated? That's right, right after the final MIDI processor of a container is processed and the event gets propagated.

This means that if you now want to do logic involving your artificial event, you need to do it in a child processor. This is where containers come in, as they are the only type of processor, along with the Synthesizer Group, that are capable of having children.

Synthesizer group offers you voice-grouping so that you can propagate modulation to the children as well.

If you wish to delay an event, you need to do it in a Container which contains your targets.

Containers are also useful for filtering events and addressing edge cases in weird user input, as you can filter out events for given scenarios and prevent them from propagating to any of your synths or samplers.

To summarize:

- events travel from one processor to the next, and then propagate to child processors, if any

- all processors are children of the Master Chain container (also known as the Daddy/Mommy Container)

- ignore event prevents it from leaving the MIDI processor that called the method, so even subsequent sibling MIDI processors won't receive it.

- Event Queue is a list that holds all the events that are to be handed off to the child processors

- Event Queue is evaluated after the final MIDI processor within a container is executed, but before the event gets propagated to any child processors,

- ...FromUI methods simulate external events

- any artificial events are not executed immediately like in Kontakt, but instead get put into the Event Queue

- any processing or logic involving the artificial events needs to be done in child processors, once these events trigger the corresponding callbacks

-

@aaronventure My dude - thank you! This is fantastic…truly appreciate the detail. This is all new to me and incredibly helpful—cheers!