NEATBrain Writeup

-

Hello!

I promised a full writeup on the new type of VI I'm working on, designed from the ground up to solve the following problems I personally have with Sample Libraries:

- Large File Sizes

- Limited Round Robins (Restricted further by large file sizes)

- Limited tone-sculpting & End-User Parameters

- Limited Keyrange

- They're annoying to make

After probably about a year and a half of noodling and trying pretty much every approach (Modal Synthesis, Neural Synthesis, Neural Style Transfer, Karplus-Strong, Wavetable Synthesis etc), I'm finally happy to say I've found a method that solves all of these problems and more.

I'm calling it NEATBrain, and it's the successor to my previous, and now deprecated NEAT Player.

NEATBrain is a tonally consistent hybrid synthesizer for one-shot style instruments such as Guitars, Bass, Tuned and Untuned Percussion (including things like Glocks, Marimbas), Pianos and more. It does this by combining Digital Waveguides & Residue Sampling, but the real magic is the backend I spent several months developing, I'll talk about this a bit later in this writeup.

Digital Waveguide Synthesis

Basically this is what the Wavetable Synth is doing: looping a single cycle of the original waveform by its fundamental frequency. This equation is very simple:

const sampleRate = 44100.0; const root = 440.0; var cycleLength = sampleRate / root;Assuming our sample is tuned to 440.0hz exactly, we can set the

loopStart,loopEndandfadeTimeparameters of our Sampler to turn it into a Pseudo Wavetable Synthesizer. However because we're using a Sampler, we avoid the instability of the Wavetable Module AND we can easily make use of Round Robins (currently I'm using 15!). We can translate this single-cycle algorithm to every note in the playable range, as long as the samples are tuned perfectly.

How do we tune them perfectly? Using

Lorisof course! The backend I mentioned earlier takes a group of Samples, usually about 3 notes, and resynthesizes them across the keyRange without any Pitch-Shifting artifacts. And since we only need the start of the sample (we're looping the waveform indefinitely), we can Truncate (shorten) the audio files to massively save on Memory Usage. I'm using about 20% of the sample length, so I get to keep the "Attack" as well.So now we have a full keyrange of Waveguides derived from a very small group of samples. This makes the development process much more enjoyable for me, since I don't have to throw my back out recording a guitar for 2 hours. But so far we just have sine waves, what about the noisy part of the signal?

Residue Sampling

The Residue is the noisy part of the signal - it's whatever is left behind when Loris resynthesizes a Sample. We can easily grab this as a buffer with the following code:

var file = FileSystem.getFolder(FileSystem.AudioFiles).getChildFile("myCoolWav.wav"); var wt; var rs; var buffer = file.loadAsAudioFile(); var f0 = buffer.detectPitch(sr, buffer.length * .2, buffer.length * .4); // I ended up manually typing pitch values for consistency's sake lorisManager.analyze(file, f0); wt = lorisManager.synthesise(file); // here's our waveguide rs = buffer - wt; // and here's our noisy signal, which we can export to .wavNow, when we play the Residue alongside the resynthesized sample, we get the original sound (well, 99% of the time lol). So now I have two samplers: one playing the Waveguide, the other playing the Residue, which we just stretch across the keyRange and turn Pitch Tracking OFF.

Organic Waveguide Decay & Idiosyncracies

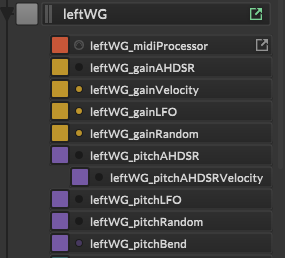

At this point, our looping Waveguide will play forever and sound quite synth-ey. Luckily a lot of one-shot sounds simply decay in amplitude and high-end frequency content over time (think Karplus-Strong). We can recreate this digitally using a good old AHDSR Module. We can expose the AHDSR controls to the end user, letting them tweak the sound until they're happy (there's some interesting sound-design opportunities here too).

Because we've separated the Waveguide and Residue, we can process them individually. If we just lowpassed a regular sample, the noisy part would get filtered as well, which doesn't sound great. We can also add all kinds of fun things to the Waveguide now, like Modulation.

Real instruments pitch upwards when hit with a high Velocity, and decay back to their original pitch over time. Test it yourself: grab a guitar (or whatever) and pluck the string really hard - you'll see the pitch flex upwards. This can be recreated with yet another AHDSR, this time as a Pitch Modulator. This is where our Synthesizer starts moving closer to the Greybox Physical Modelling realm. We can further extend this with a Pitch Random modulator. In fact, NEATBrain is using an AHDSR, LFO and a Random Modulator for both the Gain and the Pitch.

All of these controls are then exposed to the end user to play with.

Upper Register Frequency Dampening

Recording a low guitar string and pitching it upwards with Loris can make it quite harsh, because Loris retains all of the harmonic information. Initially I tried using a Dynamic EQ in Scriptnode to remedy this, but I ended up just sampling some of the higher notes. Because we're just using Samplers, we don't need to think about Wavetable Index positions or anything like that, just map the higher samples normally and voila - no more harshness.

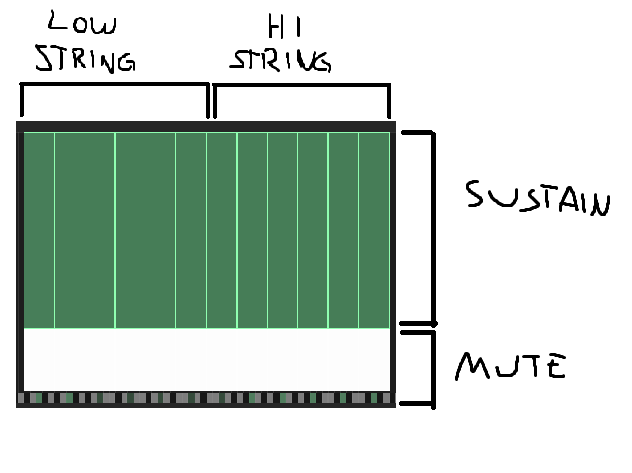

Palm Mutes

Since the first instruments are a guitar and bass, I simply created additional Waveguides from the Palm Muted samples. I tried doing this with a Scriptnode Network and some Filters, but nothing beats the real thing. Because our Residue is an atonal pick attack, we can re-use the same Residues from the sustained notes, further saving on Memory Usage. The Palm Mutes are triggered by low-velocity keypresses, making the instrument simple and intuitive.

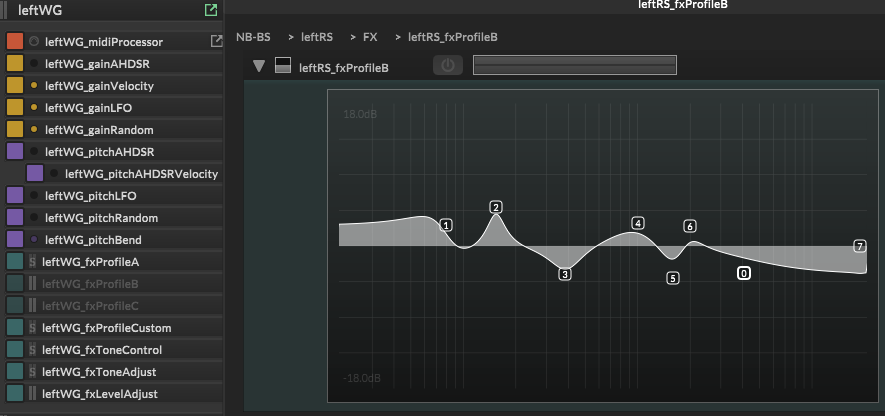

Tone Profiles

Once again, because we've separated the Waveguide and Residue, we can completely change the tone of the instrument without causing weird artifacts. For example, I've setup different body and pickup profiles using Melda's Freeform Equalizer to tone-match two signals and recreating the response curve with a regular Parametric EQ. Now the end-user can freely change the sound of the instrument to help better fit a mix.

FX Keys

Some things just can't be synthesized in an efficient way. Because our instrument has such little memory usage, we can get away with regular sampling for things like Pick Scrapes, Slides, Release Noises and... well I call them "Skiddley-doos" but I think they're referred to as Gojira Scrapes?

100 Articulations? Rhythmic Loops? Sustained Instruments? A Built-in AI-Powered Riff Generator?

Nope. While some of these things are certainly possible, the goal of NEATBrain from the start was to create a simple, lightweight, great-sounding instrument that covers 80-90% of use-cases. From my own experiences with NEAT Player, a lot of those extra features, while certainly cool and sometimes useful, are often overlooked by most users.

Take built-in FX for example. It's a lot of extra work to implement an FX Suite in your instruments, you need to add all of the modules, design the GUI for them AND make sure that they sound great, no matter what settings the end-user might choose. All of this adds CPU overhead, makes debugging more annoying (GUI bugs are one of the most common), AND about 5% of your end users will actually make use of them instead of the plugins they already have.

It's just not worth it (in my opinion).

Articulations and looped phrases are another big one. There's already plenty of Libraries that have all kinds of pre-recorded phrases in 7/8 time-signatures that need to be distributed on Hard Disks because they're over 100GB. If that's what you need for your specific use-case then great, but NEATBrain is not, and never will be, designed to fill that niche.

The Glorious Backend

Okay, let's talk a little bit about the pipeline. I won't be sharing the entire codebase for obvious reasons, but I can mention some things:

Because Loris is INSANE. I can record 15 Round-Robin samples of a single note and repitch them without artifacts. The code looks like this:

var targetPitch = 440.0; inline function repitch(obj) { // This function gets passed to the LorisManager using Loris.ProcessCustom() local ratio = targetPitch / obj.rootFrequency; obj.frequency *= ratio; } lorisManager.processCustom(file, repitch);Automatic SampleMap Generation

Did you know that sampleMaps can be created / edited programmatically? Using something like this, you can setup sampleMaps instantly after resynthesizing them with Loris (so even less work!).

var importedSample = Sampler1.asSampler().importSamples([path], true); // where [path] is the folder you've stored your "samples" for (s in importedSample) { // I had to use these integers manually, YMMV s.set(2, rootNote); // ROOT s.set(3, highKey); // HIGH s.set(5, lowVel); // VLOW s.set(6, highVel); // VHIGH // There's options for round-robin groups, mic groups etc // Make sure you save the sampleMap! (there's probably a way to do it with code) }Summary

So there we go, we take a small selection of recorded samples, repitch them and fill any gaps with Loris, truncate (shorten) the samples to take up way less space, automatically create a sampleMap, customize the tone with EQ Matching and finally play them back alongside the Residue for a pretty faithful reconstruction with the following benefits:

- Tiny Filesize / Memory Usage

- More Round Robins

- More end-user control

- Less work (well, less work sampling stuff)

Worth it imo :)

Built for Rhapsody

Huge thanks to Christoph and David for the no-export solution, Rhapsody. These instruments are super easy to deploy for VST & AU for Windows, MacOS and Linux (and soon AAX). No compiling, no codesigning nonsense, it's all handled already.

If you got this far, thanks for reading. I hope this writeup was helpful to those of you that have the same pain-points as me regarding traditional Sample Libraries. There's definitely a time and place for them, but they can also be quite burdensome and restrictive.

-

@iamlamprey Fantastic work, NEATBrain sounds very promising!

-

@iamlamprey Thank you for the info.

This is one of the most innovative products in this forum. Congrats!

-

@iamlamprey sounds really interesting, thank you for sharing your process and a big congratulations!

-

@iamlamprey amazing work really interesting and definitely unique and new! Do you have a sound demo of this?

-

@iamlamprey mate this is just amazing. Congratulations. I had your project in mind since the first time you mentioned it. Now is a real thing!!!!!! Thanks for sharing

-

thanks for the kind words everyone!

@oskarsh said:

Do you have a sound demo of this?

There's a couple already on the website and I plan to add some more:

-

@iamlamprey What AI did you use for the installation video audio?

-

@d-healey

elevenlabs, the first month is $1 and the voice I used was (i think) "Lily"

it took a few generations until I was happy with the cadence

-

@iamlamprey Not that cheap, but Murf AI is good too for this purpose

-

@orange we could also just make our own with the

neuralnode

-

System referenced this topic on

-

H HISEnberg referenced this topic on

H HISEnberg referenced this topic on